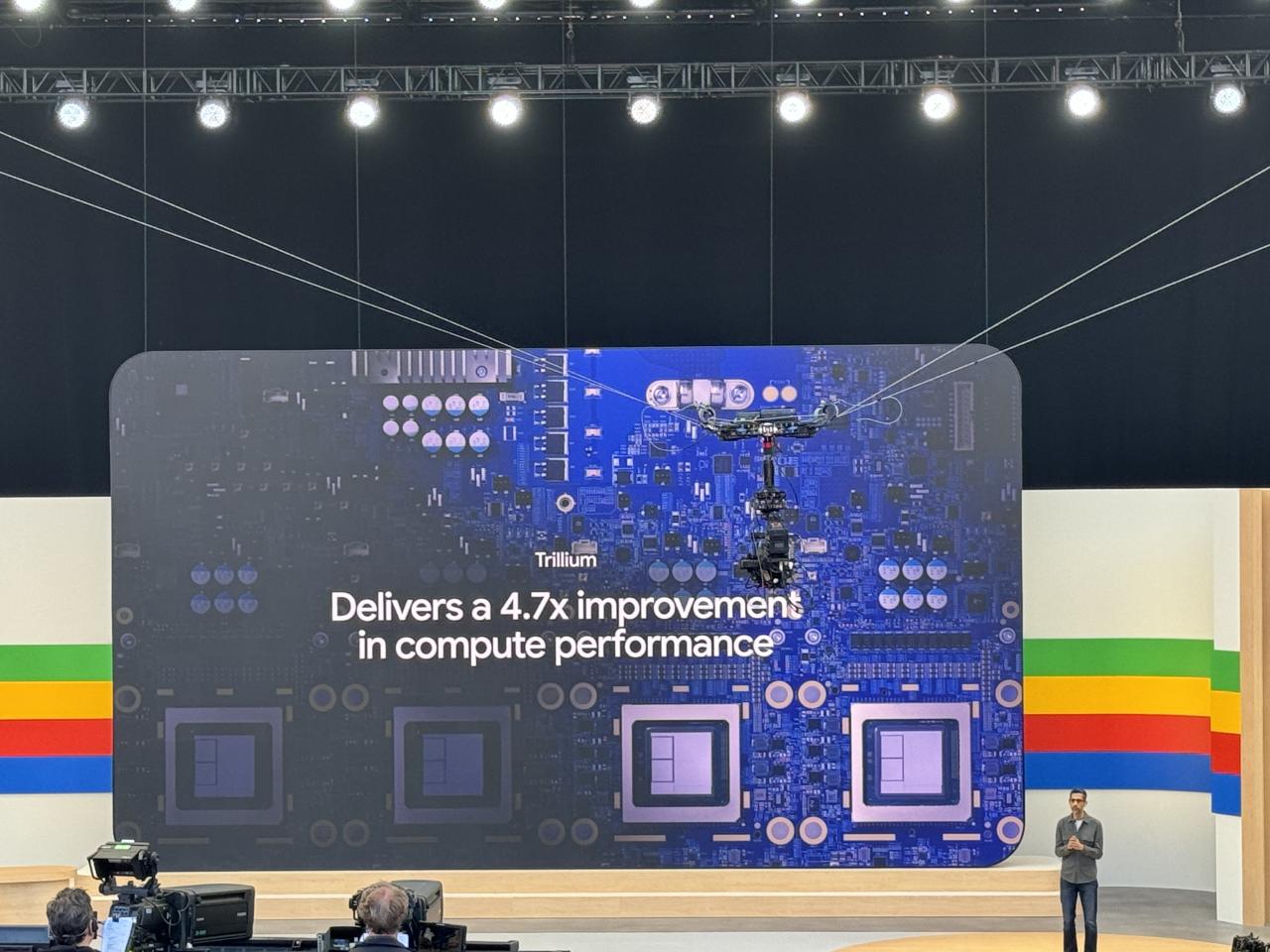

Googles next gen tpus promise a 4 7x performance boost – Google’s Next-Gen TPUs: A 4.7x Performance Boost. That’s not a typo, folks. Google’s latest Tensor Processing Units are promising a massive leap forward in AI processing power, and it’s got everyone in the tech world buzzing. Think about it: a 4.7x speed increase could mean breakthroughs in fields like natural language processing, computer vision, and even drug discovery. This isn’t just a faster chip; it’s a game-changer for the future of AI.

Google has been steadily pushing the boundaries of AI hardware with their TPUs, and this latest generation is a testament to their commitment to innovation. The new TPUs are built with cutting-edge technology, designed to handle the ever-increasing demands of complex AI models. But how does Google achieve such a dramatic performance boost? The answer lies in a combination of architectural improvements, enhanced memory, and a clever use of parallelism. It’s a symphony of engineering brilliance that’s set to revolutionize the AI landscape.

Google’s TPU Evolution

Google’s Tensor Processing Units (TPUs) have revolutionized the landscape of machine learning, offering specialized hardware designed to accelerate the training and inference of deep learning models. The TPU’s journey has been marked by continuous innovation, each generation building upon the successes of its predecessors.

TPU Generations and Their Advancements

The development of TPUs has been driven by Google’s ambition to push the boundaries of artificial intelligence. The TPU’s evolution is a testament to the company’s dedication to creating hardware tailored for the unique demands of deep learning.

- TPU v1 (2016): The first generation of TPUs was designed for matrix multiplication, a fundamental operation in deep learning. They were deployed in Google’s data centers, powering services like Google Translate and Google Photos.

- TPU v2 (2017): Building upon the success of TPU v1, the second generation introduced significant performance improvements. TPU v2 featured a custom interconnect network, enabling faster communication between processing units. This allowed for larger and more complex models to be trained efficiently.

- TPU v3 (2018): TPU v3 represented a major leap forward, with a significant increase in processing power and memory capacity. It incorporated advancements in chip design and packaging, leading to a dramatic performance boost. TPU v3 was used in Google’s flagship products, including Search, Assistant, and Cloud AI.

- Next-Gen TPUs (2023): The latest generation of TPUs promises a remarkable 4.7x performance boost over its predecessor. This leap in performance is attributed to several technological innovations, including advancements in chip design, memory architecture, and interconnect technology.

Performance Comparison and Technological Innovations

The next-gen TPUs are poised to deliver unprecedented performance, enabling researchers and developers to tackle even more complex and demanding AI tasks.

- Performance Gains: The 4.7x performance boost in the next-gen TPUs is a testament to the relentless pursuit of innovation by Google’s engineers. This significant improvement allows for faster model training, enabling researchers to iterate and experiment more rapidly.

- Technological Advancements: The performance leap is driven by a combination of technological innovations, including:

- Enhanced Chip Design: The next-gen TPUs feature a new chip design that optimizes performance for deep learning workloads. This includes advancements in the processing units, memory access, and data flow.

- Advanced Memory Architecture: The new generation of TPUs incorporates a more efficient memory architecture, allowing for faster data access and reduced latency. This is crucial for training large-scale models with massive datasets.

- Improved Interconnect Technology: The next-gen TPUs leverage a high-speed interconnect network, enabling faster communication between processing units. This ensures that data can be shared efficiently across the entire system, leading to improved training speed.

Impact on Machine Learning and AI

The next generation of TPUs promises a 4.7x performance boost, significantly accelerating research and development in various fields of machine learning and AI. This enhanced processing power will unlock new possibilities for developing complex AI models and algorithms, leading to breakthroughs in various applications.

Natural Language Processing

The enhanced performance of next-gen TPUs will revolutionize natural language processing (NLP) by enabling the development of more sophisticated language models. These models will be able to understand and generate human language with greater accuracy and nuance.

- Improved Language Translation: Next-gen TPUs will enable the development of more accurate and fluent machine translation systems. This will facilitate communication and understanding across language barriers, breaking down cultural and geographical divides.

- Advanced Text Summarization: Next-gen TPUs will power AI models that can automatically summarize large volumes of text, providing concise and informative summaries. This will be particularly useful in fields like research, news reporting, and legal documentation.

- Enhanced Chatbots: Next-gen TPUs will allow for the creation of more intelligent and engaging chatbots that can understand and respond to complex queries and conversations. This will improve customer service, provide personalized assistance, and enhance user experiences.

Implications for Cloud Computing

Google’s next-generation TPUs promise a significant leap forward in AI and machine learning capabilities. This advancement has profound implications for cloud computing, particularly for Google Cloud Platform (GCP).

The increased processing power and efficiency of these TPUs will allow GCP to offer its customers even more advanced AI and machine learning services, enabling them to tackle complex problems with greater speed and accuracy.

Impact on Cloud Computing Infrastructure

The arrival of next-generation TPUs will significantly impact cloud computing infrastructure. The increased demand for these powerful processors will drive the development of new hardware and software solutions, pushing the boundaries of what’s possible in cloud computing.

This will likely lead to a shift in cloud infrastructure, with a greater focus on specialized hardware like TPUs for AI and machine learning workloads.

Here’s a breakdown of the potential impact:

* Enhanced AI and ML Services: GCP will be able to offer more sophisticated AI and ML services, including natural language processing, computer vision, and predictive analytics, all powered by the enhanced capabilities of next-generation TPUs.

* Scalability and Efficiency: The increased processing power of TPUs will enable GCP to scale its AI and ML services more efficiently, allowing customers to handle larger datasets and more complex models without sacrificing performance.

* Reduced Costs: The efficiency of next-generation TPUs could potentially lead to lower costs for AI and ML workloads on GCP, making these technologies more accessible to a wider range of businesses and organizations.

Competition Among Cloud Providers, Googles next gen tpus promise a 4 7x performance boost

The introduction of next-generation TPUs will undoubtedly intensify competition among cloud providers. Other providers like Amazon Web Services (AWS) and Microsoft Azure will need to respond with their own advancements in AI and ML hardware and software to stay competitive.

This competition will benefit cloud computing users as it will drive innovation and lead to a wider range of choices for AI and ML services.

Benefits and Challenges for Developers and Researchers

Next-generation TPUs on GCP will offer developers and researchers significant benefits, but also present certain challenges:

Benefits

* Faster Training and Inference: TPUs will enable developers to train AI models faster and perform inference tasks more efficiently, reducing development time and accelerating research efforts.

* Access to Advanced AI Capabilities: Developers will have access to a wider range of advanced AI capabilities, including specialized algorithms and libraries optimized for TPUs, allowing them to build more sophisticated applications.

* Cost-Effective Solution: TPUs on GCP can provide a cost-effective solution for AI and ML workloads, especially for large-scale projects, enabling researchers and developers to achieve their goals with more efficient resource utilization.

Challenges

* Learning Curve: Developers and researchers will need to familiarize themselves with the specifics of programming for TPUs and understand the differences in how they operate compared to traditional CPUs and GPUs.

* Limited Availability: Initially, access to next-generation TPUs may be limited, potentially creating a bottleneck for developers and researchers eager to utilize their capabilities.

* Software Ecosystem: The ecosystem of software tools and libraries specifically designed for TPUs is still evolving, and developers may need to adapt their existing workflows and tools to work with TPUs effectively.

The Future of AI Hardware: Googles Next Gen Tpus Promise A 4 7x Performance Boost

The recent advancements in Google’s next-generation TPUs, promising a 4.7x performance boost, offer a glimpse into the exciting future of AI hardware. This leap forward not only signifies the relentless pursuit of enhanced computational power but also paves the way for groundbreaking innovations in AI development and deployment.

Potential for Further Performance Improvements

The pursuit of ever-increasing performance in AI hardware is driven by the insatiable appetite of AI models for more computational resources. As AI models grow in complexity and size, the demand for powerful hardware to train and run them efficiently becomes paramount. The next-generation TPUs represent a significant step in this direction, but the journey towards even more powerful AI hardware is far from over.

The following are some potential avenues for further performance improvements:

- Advancements in Chip Design: Ongoing research and development in semiconductor technology will continue to push the boundaries of chip design, leading to smaller, faster, and more energy-efficient processors. This could involve innovations in transistor size, architecture, and materials, allowing for even denser integration of computational units within a single chip.

- Specialized Hardware Architectures: AI workloads often exhibit unique characteristics, such as high parallelism and matrix operations. Dedicated hardware architectures, such as specialized AI accelerators, can be optimized to efficiently handle these workloads, leading to significant performance gains. This could involve developing custom processors specifically designed for AI tasks, or optimizing existing hardware for AI-specific operations.

- Innovative Memory Technologies: The speed of data access plays a crucial role in AI performance. Research in memory technologies, such as non-volatile memory and high-bandwidth memory, could lead to significant improvements in data transfer rates and overall system performance. This would allow AI models to access and process data much faster, enabling faster training and inference.

Emergence of New AI Hardware Architectures

The relentless pursuit of performance in AI hardware is not limited to simply improving existing technologies. The field is also witnessing the emergence of entirely new hardware architectures specifically designed for AI workloads. These architectures often leverage principles of parallel processing, distributed computing, and specialized hardware components to unlock new levels of computational power.

Here are some examples of emerging AI hardware architectures:

- Neuromorphic Computing: Inspired by the structure and function of the human brain, neuromorphic computing aims to develop hardware systems that mimic the way neurons and synapses interact. These systems can potentially offer significant advantages in terms of energy efficiency and learning capabilities, particularly for tasks involving complex pattern recognition and decision-making.

- Quantum Computing: Quantum computers harness the principles of quantum mechanics to perform calculations that are intractable for classical computers. While still in its early stages of development, quantum computing holds immense potential for revolutionizing AI, particularly in areas such as drug discovery, materials science, and optimization problems.

- Hybrid Architectures: Combining the strengths of different hardware architectures, such as CPUs, GPUs, and specialized AI accelerators, can create powerful hybrid systems that offer optimal performance for a wide range of AI workloads. These systems can leverage the best of each architecture to handle specific tasks efficiently, leading to improved overall performance and resource utilization.

Ethical and Societal Implications of Powerful AI Hardware

The rapid advancements in AI hardware have profound ethical and societal implications. As AI systems become more powerful, they can potentially impact various aspects of human life, raising concerns about bias, fairness, privacy, and job displacement. It is crucial to address these issues proactively and ensure that the development and deployment of AI technology align with ethical principles and societal values.

The following are some key ethical and societal implications of powerful AI hardware:

- Bias and Fairness: AI systems are trained on vast datasets, and these datasets can reflect existing societal biases. Powerful AI hardware can amplify these biases, leading to unfair or discriminatory outcomes. It is crucial to develop methods for identifying and mitigating bias in AI systems and ensure that AI algorithms are fair and equitable.

- Privacy and Security: AI systems often collect and process large amounts of personal data, raising concerns about privacy and security. Powerful AI hardware can enhance the capabilities of AI systems to analyze and manipulate data, making it even more important to implement robust data privacy and security measures.

- Job Displacement: As AI systems become more sophisticated, they can automate tasks previously performed by humans, leading to job displacement. It is important to consider the potential impact of AI on the workforce and develop strategies for retraining and reskilling workers to adapt to the changing job market.

The potential of Google’s Next-Gen TPUs extends far beyond just faster processing speeds. It’s a testament to the incredible progress being made in AI hardware, paving the way for even more powerful and efficient AI systems. We’re talking about breakthroughs in areas like personalized medicine, self-driving cars, and even understanding the mysteries of the universe. It’s a future where AI isn’t just a buzzword, it’s a force for good, changing the world in ways we can only begin to imagine. So, buckle up, folks, because the future of AI is accelerating faster than ever before.

Google’s next-gen TPUs are promising a massive 4.7x performance boost, which is awesome news for anyone who relies on AI for their daily grind. This kind of power could be a game-changer for everything from streaming services like Chromecast, which just got support for CBS content , to more complex applications like self-driving cars. We can’t wait to see what developers come up with, and how these powerful new TPUs will change the world of tech.

Standi Techno News

Standi Techno News