Too many models – it’s a problem that’s becoming increasingly prevalent in the world of technology. With the rapid advancement of artificial intelligence and machine learning, we’re seeing a surge in the development of new models for everything from image recognition to natural language processing. While this proliferation of models is exciting, it also presents a number of challenges. The sheer volume of available models can make it difficult to choose the right one for a given task, and it can also lead to redundancy and complexity in the development and deployment process.

The consequences of having too many models can be significant. For example, businesses may struggle to keep up with the latest models, leading to inefficiencies and missed opportunities. Researchers may find it difficult to compare and contrast different models, hindering progress in the field. And consumers may be overwhelmed by the sheer number of choices, making it difficult to find the right solution for their needs.

The Problem of Overabundance

In the age of artificial intelligence, the rapid development and deployment of machine learning models have brought about an unprecedented level of innovation across various fields. However, this proliferation of models has also led to a new challenge: the problem of overabundance. With countless models vying for attention, it becomes increasingly difficult to navigate this landscape and identify the most suitable option for a given task.

Consequences of Model Overabundance

Having too many models in a specific field can lead to several consequences, potentially hindering progress and innovation. One major concern is the difficulty in choosing the right model for a specific task. With a vast array of models available, it can be challenging to determine which model is most appropriate for a particular use case, especially when considering factors such as accuracy, efficiency, and computational resources. This decision-making process can be time-consuming and resource-intensive, potentially delaying the implementation of AI solutions.

Furthermore, model overabundance can contribute to the development of “model fatigue,” where users become overwhelmed by the sheer number of options and lose interest in exploring new models. This can stifle innovation and limit the adoption of AI technologies.

Factors Contributing to Model Overabundance

Several factors contribute to the proliferation of models in various fields. One significant factor is the ease of model development and deployment. Advancements in machine learning frameworks and cloud computing platforms have made it easier than ever for researchers and developers to create and share their models. This accessibility has led to an explosion of model creation, with researchers constantly seeking to improve upon existing models or develop new ones for specific tasks.

Another contributing factor is the competitive nature of the AI landscape. Companies and research institutions are constantly vying for recognition and market share, leading to a race to develop and deploy new models. This competitive pressure can drive the development of models that may not be fully vetted or validated, potentially leading to unreliable or suboptimal results.

Industries Affected by Model Overabundance

Model overabundance is a concern in various industries, particularly those heavily reliant on AI technologies. For example, in the field of natural language processing (NLP), the abundance of language models has created challenges for developers seeking to choose the right model for their specific needs.

Similarly, in the healthcare industry, the proliferation of medical imaging models has led to concerns about model accuracy and reliability. The sheer number of models available can make it difficult to assess their performance and determine which model is best suited for a particular diagnostic task.

Challenges in Choosing the Right Model

Choosing the right model for a given task is a critical step in any AI project. However, the abundance of models makes this decision-making process more challenging. Several factors must be considered when selecting a model, including:

- Accuracy: The model’s ability to provide accurate predictions or results for the specific task.

- Efficiency: The model’s computational requirements and processing speed.

- Data Requirements: The amount and type of data required to train and deploy the model.

- Transparency: The model’s explainability and interpretability, particularly in sensitive domains like healthcare.

- Cost: The cost associated with developing, deploying, and maintaining the model.

Navigating this complex landscape and making informed decisions requires a deep understanding of the available models and their strengths and limitations.

Impact on Efficiency and Innovation

The proliferation of AI models presents a double-edged sword for the advancement of technology. While a diverse model landscape offers numerous benefits, the sheer volume of models can also create challenges in terms of efficiency and innovation. This section delves into the complexities of model overabundance, exploring its impact on development, deployment, and the overall pace of technological progress.

The Impact on Development and Deployment Efficiency

The abundance of AI models can significantly impact the efficiency of development and deployment. While the availability of numerous models provides a wider selection for specific tasks, it also introduces complexities in choosing the right model and integrating it into existing systems. The process of evaluating, comparing, and selecting the most suitable model from a vast pool can be time-consuming and resource-intensive. This can lead to delays in project timelines and increase the overall cost of development.

The Need for Standardization and Collaboration: Too Many Models

The current overabundance of AI models presents a significant challenge for users trying to navigate the landscape and find the most suitable tool for their needs. To address this, standardization and collaboration are crucial to create a more efficient and accessible AI ecosystem.

A Framework for Standardizing Model Evaluation and Comparison

Standardizing model evaluation and comparison is essential for providing users with a clear understanding of the capabilities and limitations of different models. This can be achieved by establishing a common set of metrics and benchmarks for evaluating model performance across various tasks and datasets.

- Defining a Common Set of Metrics: A standardized set of metrics, such as accuracy, precision, recall, and F1-score, should be adopted for evaluating model performance across different tasks. This allows for a consistent and comparable assessment of models, regardless of their specific applications.

- Establishing Benchmarks: Standardized benchmarks should be created for various tasks, including natural language processing, image recognition, and machine translation. These benchmarks should be publicly available and used for evaluating the performance of different models under the same conditions.

- Developing a Framework for Reporting Evaluation Results: A framework for reporting evaluation results should be established to ensure consistency and transparency. This framework should include details about the dataset used, the evaluation metrics employed, and the model’s performance on different tasks.

A System for Sharing Best Practices and Knowledge Related to Model Selection

Sharing best practices and knowledge related to model selection is crucial for helping users make informed decisions about which models to use. This can be achieved by creating a centralized platform where users can access information about different models, their strengths and weaknesses, and real-world applications.

- Creating a Centralized Knowledge Base: A centralized knowledge base can be created to provide comprehensive information about different models, including their capabilities, limitations, and recommended use cases. This knowledge base can be populated by contributions from model developers, researchers, and users.

- Developing a Model Selection Guide: A model selection guide can be developed to help users navigate the vast landscape of AI models and identify the most suitable option for their specific needs. This guide should provide a framework for evaluating models based on their performance, cost, and other factors.

- Facilitating Knowledge Sharing Through Forums and Communities: Online forums and communities can be established to foster discussions and knowledge sharing among model developers and users. These platforms can serve as a valuable resource for exchanging insights, best practices, and real-world experiences related to model selection.

A Platform for Collaboration Among Model Developers and Users

Collaboration among model developers and users is crucial for accelerating innovation and addressing the challenges of model overabundance. This can be achieved by creating a platform that facilitates communication, knowledge sharing, and joint efforts in developing and deploying new models.

- Creating a Platform for Open Collaboration: A platform can be established to facilitate open collaboration among model developers, researchers, and users. This platform can provide tools for sharing code, data, and documentation, as well as for collaborating on new model development projects.

- Encouraging Open Source Model Development: Encouraging open source model development can foster collaboration and innovation. Open source models allow for greater transparency, community involvement, and the development of more robust and reliable models.

- Facilitating Knowledge Transfer Between Academia and Industry: Collaboration between academia and industry can bridge the gap between research and practical applications. This can involve joint research projects, internships, and knowledge transfer initiatives.

Addressing the Challenges of Model Overabundance

Standardization and collaboration can address the challenges of model overabundance by creating a more efficient and accessible AI ecosystem.

- Improving Model Discoverability: Standardization and collaboration can improve model discoverability by creating a centralized platform where users can easily search for and compare different models based on their specific needs. This reduces the time and effort required to identify suitable models, making the AI ecosystem more accessible.

- Facilitating Model Selection: Standardization and collaboration can facilitate model selection by providing users with a clear understanding of the capabilities and limitations of different models. This allows for more informed decisions about which models to use, leading to more effective and efficient AI deployments.

- Promoting Innovation: Collaboration among model developers and users can foster innovation by encouraging the development of new models and applications. This can lead to the creation of more powerful and versatile AI tools, addressing a wider range of challenges and opportunities.

Strategies for Model Selection and Management

The abundance of models presents a new challenge: how to choose the right one for the job and manage this growing collection effectively. This section explores key criteria for model evaluation, best practices for managing a diverse portfolio, and a step-by-step guide to choosing the right model.

Identifying Key Criteria for Model Evaluation

Evaluating models involves considering various factors based on the specific needs of the task.

- Accuracy and Performance: Models should be evaluated based on their accuracy, precision, recall, and F1-score. These metrics help determine how well the model performs on a given task. For example, a model for medical diagnosis needs to be highly accurate to avoid misdiagnosis, while a model for recommending products can tolerate a slightly lower accuracy.

- Efficiency and Speed: The time it takes for a model to process data and generate results is crucial, especially in real-time applications. Consider the model’s computational requirements, memory usage, and inference time.

- Explainability and Interpretability: Some models are easier to understand than others. Explainability is essential for building trust in the model’s decisions and for debugging issues. For example, in financial risk assessment, understanding the model’s reasoning is crucial for transparency and accountability.

- Data Requirements: Different models have varying data requirements. Some models need vast amounts of data, while others can work with smaller datasets. It’s essential to choose a model that aligns with the available data resources.

- Cost and Resources: Consider the cost of training, deploying, and maintaining the model. This includes factors like hardware, software, and human resources.

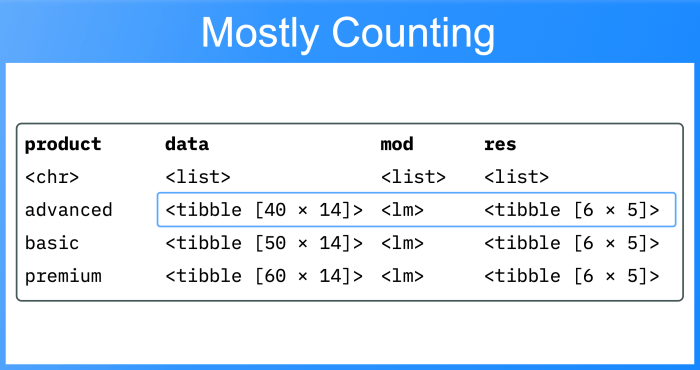

Best Practices for Managing a Diverse Model Portfolio, Too many models

Managing a diverse model portfolio requires a structured approach to ensure efficient utilization and avoid redundancy.

- Centralized Model Repository: Establish a central repository to store, track, and manage all models. This repository should include metadata about each model, such as its purpose, training data, performance metrics, and deployment details.

- Model Versioning and Tracking: Implement a system for versioning and tracking model updates. This ensures that you can easily identify and revert to previous versions if needed.

- Automated Model Evaluation and Selection: Leverage automation to streamline model evaluation and selection processes. This can involve using tools that automatically assess model performance, identify potential biases, and recommend suitable models for specific tasks.

- Model Monitoring and Retraining: Continuously monitor model performance and retrain them as needed to maintain accuracy and prevent degradation over time. This involves setting up monitoring systems that track key metrics and trigger retraining processes when performance drops below acceptable thresholds.

Choosing the Right Model for a Specific Task

Selecting the right model involves a systematic process of evaluating different options based on the task requirements.

- Define the Problem and Objectives: Clearly define the problem you are trying to solve and the desired outcomes. This will guide your model selection process. For example, if you are trying to predict customer churn, you need a model that can accurately identify customers at risk of leaving.

- Analyze Available Data: Understand the characteristics of your data, including its volume, quality, and format. This will help you narrow down the models that are suitable for your data. For instance, if you have a small dataset, you might consider using a model that is less data-hungry.

- Explore Potential Models: Research and explore different models that are relevant to your problem domain. Consider the strengths and weaknesses of each model and how they align with your requirements.

- Evaluate Model Performance: Train and evaluate the shortlisted models using appropriate metrics. Compare their performance and choose the model that achieves the best results.

- Deploy and Monitor: Once you have selected a model, deploy it into production and monitor its performance over time. Retrain the model as needed to maintain its accuracy and address any emerging issues.

Optimizing Model Use for Efficiency and Effectiveness

Maximizing the efficiency and effectiveness of model use involves optimizing various aspects of the model lifecycle.

- Model Optimization Techniques: Apply techniques like hyperparameter tuning, feature engineering, and model ensembling to improve model performance. These techniques can help enhance accuracy, reduce training time, and improve model generalization.

- Efficient Model Deployment: Optimize model deployment for fast inference times and minimal resource consumption. This might involve using specialized hardware, optimizing model architecture, or leveraging cloud-based platforms.

- Model Explainability and Transparency: Make model decisions understandable and transparent to stakeholders. This involves using techniques like feature importance analysis, decision tree visualization, and counterfactual explanations.

- Continuous Model Improvement: Establish a process for continuous model improvement. This involves regularly monitoring model performance, identifying areas for improvement, and retraining models as needed.

The Future of Model Development

The current model overabundance presents both opportunities and challenges for the future of technology development. While the sheer number of models can be overwhelming, it also reflects the rapid advancement of AI and the increasing demand for specialized solutions. This abundance necessitates innovative approaches to model selection, management, and development.

The Role of Artificial Intelligence in Addressing Model Overabundance

Artificial intelligence itself can play a crucial role in addressing the challenges posed by model overabundance. AI-powered tools can be developed to automate tasks like model selection, evaluation, and deployment, making the process more efficient and scalable. For example, AI-driven platforms can analyze model performance metrics, identify potential biases, and recommend suitable models for specific tasks. This automated approach can streamline the model selection process and reduce the manual effort required for managing a vast model landscape.

The Potential for Emerging Technologies to Simplify Model Selection and Management

Emerging technologies like federated learning and model compression can significantly simplify model selection and management. Federated learning allows models to be trained on decentralized datasets, reducing the need for centralized data storage and enabling collaboration across different organizations. Model compression techniques, on the other hand, reduce the size of models without sacrificing accuracy, making them more efficient to store, deploy, and manage. These technologies can contribute to a more sustainable and efficient model ecosystem.

The Impact of Model Overabundance on the Future of Technology Development

Model overabundance will likely lead to a more specialized and modular approach to technology development. Instead of relying on monolithic solutions, developers will increasingly focus on building modular systems composed of specialized models. This modularity will enable greater flexibility, adaptability, and scalability. For instance, in the field of natural language processing, we might see the development of specialized models for different languages, dialects, or domains, allowing for more accurate and context-aware interactions.

Ethical Considerations Associated with the Proliferation of Models

The proliferation of models raises several ethical considerations. One key concern is the potential for bias in models trained on limited or biased datasets. Another challenge is the need for transparency and explainability in model decision-making, especially in sensitive applications like healthcare or finance. It is crucial to develop ethical guidelines and frameworks to ensure responsible development and deployment of models, addressing concerns about bias, fairness, and accountability.

The challenges of model overabundance are real, but there are solutions. By focusing on standardization, collaboration, and best practices for model selection and management, we can navigate this complex landscape and unlock the full potential of these powerful tools. The future of technology development depends on our ability to address this issue head-on, ensuring that the proliferation of models leads to innovation and progress, not confusion and inefficiency.

It’s a good thing there are so many models out there because with all these fiery crashes, we’re bound to have some casualties. The FAA just finished investigating SpaceX’s second Starship test, which, let’s just say, didn’t go as planned. You can read more about the investigation here. It’s a reminder that even with all the fancy tech, space travel is still a risky business.

So next time you see a model rocket launch, maybe give it a little extra appreciation.

Standi Techno News

Standi Techno News