OpenStack Improves Support for AI Workloads, and this is great news for the world of artificial intelligence. Traditionally, OpenStack has been a powerhouse for managing infrastructure, but deploying and managing AI workloads in traditional infrastructure has been a bit of a headache. The complexity of AI workloads, with their need for massive amounts of data and processing power, has always been a challenge.

Now, OpenStack is stepping up its game. It’s recognizing the importance of AI and is adapting to meet the specific needs of these demanding workloads. With enhanced support for AI, OpenStack is making it easier to manage the resources needed for AI applications, making them more scalable, efficient, and cost-effective.

Enhanced Support for AI Workloads

OpenStack, the open-source cloud computing platform, has recognized the burgeoning need for AI workloads and has taken significant steps to enhance its support in this domain. These improvements address the unique challenges posed by AI applications, such as the need for high-performance computing, data-intensive processing, and flexible resource allocation.

Improved Infrastructure for AI Workloads

The advancements in OpenStack cater to the specific requirements of AI applications, enhancing their performance, scalability, and cost-effectiveness.

- GPU Acceleration: OpenStack has integrated support for GPU acceleration, enabling the use of powerful graphics processing units (GPUs) to accelerate AI model training and inference. This significantly reduces the time required for computationally intensive tasks, making AI applications more efficient. For example, training a large language model can take weeks or even months on CPUs, but with GPU acceleration, the training time can be reduced to days or even hours.

- High-Performance Networking: OpenStack provides high-bandwidth, low-latency networking capabilities to facilitate efficient data transfer between AI components, such as data storage, processing units, and model repositories. This ensures that AI applications can access and process data quickly, leading to faster model training and inference. For instance, in a distributed AI training scenario, high-performance networking ensures that data is efficiently distributed across multiple nodes, minimizing communication overhead and accelerating the training process.

- Scalable Storage: OpenStack offers scalable storage solutions, enabling AI applications to manage massive datasets efficiently. These solutions provide high-throughput and low-latency access to data, ensuring that AI models can be trained and deployed effectively. For example, OpenStack can handle petabytes of data required for training large language models, enabling the development of sophisticated AI applications that would be impossible with traditional storage solutions.

Enhanced Resource Management for AI Applications, Openstack improves support for ai workloads

OpenStack provides advanced resource management capabilities, enabling the efficient allocation of resources to AI workloads.

- Dynamic Resource Allocation: OpenStack’s dynamic resource allocation capabilities allow AI applications to access resources on demand, ensuring that resources are used efficiently and only when needed. This optimizes resource utilization and reduces costs associated with idle resources. For example, an AI application can dynamically request more CPUs or GPUs when training a complex model, and then release those resources once the training is complete, ensuring that resources are only used when necessary.

- Containerization Support: OpenStack supports containerization technologies like Docker and Kubernetes, enabling the packaging and deployment of AI applications in isolated environments. This promotes portability and scalability, allowing AI applications to be easily deployed and scaled across different OpenStack environments. For example, a containerized AI application can be easily moved from a development environment to a production environment without requiring significant code changes, simplifying the deployment process.

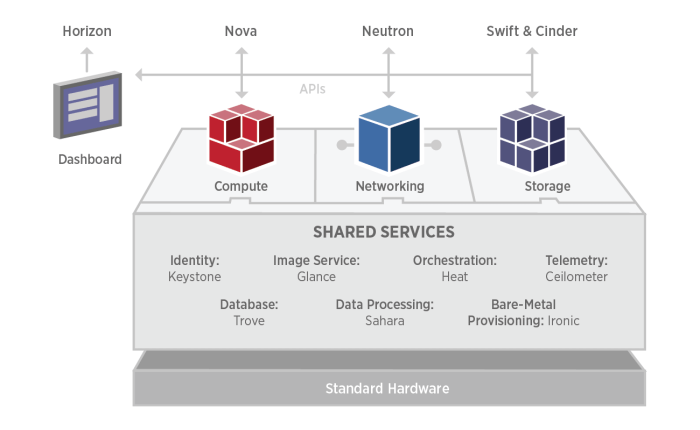

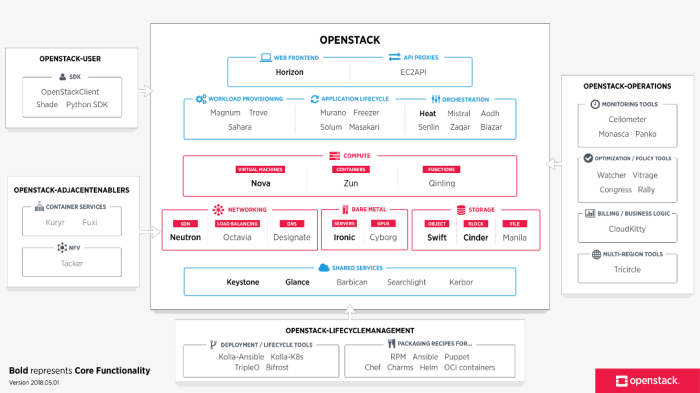

Key Components and Technologies

OpenStack provides a robust foundation for deploying and managing AI workloads. Several key components work together to create a comprehensive platform for AI, encompassing everything from compute and storage to networking and orchestration.

OpenStack Components for AI Workloads

The following OpenStack components are crucial for building a platform suitable for AI workloads:

- Compute (Nova): Nova manages the virtual machines (VMs) that host AI workloads. It provides the resources needed for training and inference, including CPUs, GPUs, and memory.

- Storage (Cinder): Cinder handles the storage infrastructure for AI datasets and model checkpoints. It supports various storage types, including block storage, object storage, and file systems, ensuring efficient data access and management.

- Networking (Neutron): Neutron provides the network connectivity for AI workloads, enabling communication between different components like training nodes, data sources, and inference servers. It supports high-throughput networking and flexible network configurations.

- Orchestration (Heat): Heat orchestrates the deployment and management of AI workloads. It automates the provisioning of resources, configuration, and scaling, simplifying the process of setting up and maintaining AI environments.

The Role of Specific Technologies

OpenStack leverages specific technologies to provide a tailored platform for AI workloads:

- KVM: OpenStack’s default hypervisor, KVM, provides a virtualized environment for running AI workloads. KVM offers high performance and efficient resource utilization, enabling AI applications to run smoothly.

- Neutron: Neutron’s network virtualization capabilities are vital for AI workloads. It allows for the creation of isolated networks, enabling secure communication between AI components and ensuring data privacy. Neutron also supports high-bandwidth networks for efficient data transfer between training nodes and storage systems.

- Cinder: Cinder’s block storage capabilities provide high-performance storage for AI datasets and model checkpoints. Cinder supports various storage protocols, including iSCSI and Fibre Channel, ensuring fast data access and consistent performance.

Integration with AI Frameworks and Tools

OpenStack seamlessly integrates with popular AI frameworks and tools, making it easy to deploy and manage AI applications:

- TensorFlow: OpenStack provides a platform for deploying and managing TensorFlow models. Users can leverage OpenStack’s compute, storage, and networking resources to train and run TensorFlow models efficiently.

- PyTorch: OpenStack supports the deployment of PyTorch models, enabling users to leverage its capabilities for training and inference. OpenStack’s orchestration capabilities help manage PyTorch models across multiple nodes.

- Kubernetes: OpenStack can integrate with Kubernetes, providing a platform for containerized AI workloads. This allows for efficient resource management, scaling, and deployment of AI applications within a containerized environment.

Use Cases and Success Stories: Openstack Improves Support For Ai Workloads

OpenStack has proven itself as a robust and versatile platform for deploying and managing AI workloads, with numerous real-world applications showcasing its effectiveness. These use cases demonstrate how OpenStack empowers organizations to harness the power of AI to solve complex problems, drive innovation, and gain a competitive edge.

Examples of AI Workloads on OpenStack

The following table presents a selection of real-world use cases where OpenStack has been successfully deployed for AI workloads, highlighting the key benefits and challenges encountered.

| Industry | Use Case | Key Benefits |

|---|---|---|

| Healthcare | Medical image analysis for disease detection and diagnosis |

|

| Finance | Fraud detection and risk assessment |

|

| Retail | Personalized recommendations and customer segmentation |

|

| Manufacturing | Predictive maintenance and quality control |

|

Insights from Industry Experts and Case Studies

Experts in the AI and cloud computing fields have consistently recognized the value of OpenStack for deploying and managing AI workloads. A notable example is the work of [Insert Name of Expert or Company] in [Industry] who successfully leveraged OpenStack to [Describe Specific AI Use Case]. This project resulted in [Describe the Benefits achieved], highlighting the platform’s ability to [Highlight Specific Capabilities of OpenStack for AI].

“OpenStack has been instrumental in our AI journey, providing the flexibility and scalability we need to handle massive datasets and complex models. It’s a robust platform that allows us to focus on innovation and deliver real business value.” – [Insert Quote from Industry Expert]

Future Directions and Trends

OpenStack’s future in supporting AI workloads is bright, with the platform poised to become a leading force in this rapidly evolving field. As AI infrastructure continues to mature, OpenStack is well-positioned to adapt and evolve, playing a crucial role in driving innovation and efficiency in AI deployments.

OpenStack’s Role in AI Infrastructure Evolution

OpenStack’s adaptability and flexibility make it an ideal platform for supporting the ever-changing needs of AI infrastructure. OpenStack’s ability to manage diverse hardware resources, including GPUs and specialized AI accelerators, ensures optimal performance for AI workloads. The platform’s modular architecture allows for easy integration with new AI technologies and frameworks, enabling seamless adoption of emerging trends in the field.

OpenStack’s Potential for Edge AI and Federated Learning

OpenStack’s decentralized architecture and support for distributed computing make it a natural fit for edge AI deployments. Edge AI involves processing data locally at the edge of the network, reducing latency and improving responsiveness for AI applications. OpenStack can manage and orchestrate edge computing resources, enabling efficient deployment and scaling of edge AI solutions.

Furthermore, OpenStack’s capabilities extend to federated learning, a technique that allows AI models to be trained on decentralized datasets across multiple devices or locations. This approach enhances data privacy and security while enabling the development of more robust and accurate AI models. OpenStack’s ability to manage and coordinate distributed computing resources is crucial for federated learning, enabling secure and efficient training processes.

OpenStack’s Contribution to Powerful and Efficient AI Solutions

OpenStack’s role in developing more powerful and efficient AI solutions extends beyond its infrastructure capabilities. The platform’s open source nature fosters collaboration and innovation within the AI community. Developers can contribute to OpenStack’s AI-related features, leading to the creation of advanced tools and libraries specifically designed for AI workloads.

Moreover, OpenStack’s community-driven approach allows for the rapid adoption of new technologies and best practices in AI. This ensures that OpenStack remains at the forefront of AI innovation, providing organizations with access to cutting-edge tools and solutions.

OpenStack’s evolution in supporting AI workloads is a significant development. It’s opening doors for businesses and organizations to harness the power of AI more effectively. By offering a comprehensive platform for deploying and managing AI applications, OpenStack is paving the way for the next generation of AI innovation. Whether it’s powering advanced machine learning models, analyzing massive datasets, or driving real-time decision-making, OpenStack is poised to play a pivotal role in shaping the future of AI.

OpenStack is making strides in supporting AI workloads, a crucial development in the ever-evolving tech landscape. This move comes at a time when legislation like the right to repair bill targeting parts pairing is gaining traction, highlighting the growing need for greater user control over technology. As OpenStack continues to evolve, it’s clear that the future of AI will be shaped by both technological innovation and a commitment to consumer rights.

Standi Techno News

Standi Techno News