Doordashs new ai powered safechat tool automatically detects verbal abuse – DoorDash’s new AI-powered SafeChat tool automatically detects verbal abuse, taking center stage in the fight for safer working conditions for gig economy workers. This innovative technology aims to combat the growing issue of verbal abuse faced by delivery drivers, creating a more respectful and professional environment for everyone involved.

SafeChat leverages advanced speech recognition and natural language processing to identify abusive language in real-time, analyzing the tone and content of conversations between drivers and customers. The tool can distinguish between legitimate communication and abusive language, ensuring that drivers are protected from harassment and disrespectful behavior.

DoorDash’s SafeChat: AI-Powered Protection for Delivery Drivers

DoorDash, the popular food delivery platform, has launched SafeChat, a groundbreaking AI-powered tool designed to protect delivery drivers from verbal abuse. This innovative technology represents a significant step forward in addressing the issue of harassment in the gig economy, where drivers are often vulnerable to verbal abuse from customers.

The rise of gig work has created a new wave of challenges for workers, including increased exposure to verbal abuse. Delivery drivers, in particular, are often on the front lines, interacting directly with customers in a variety of situations. This can make them susceptible to verbal harassment, which can have a significant impact on their well-being and job satisfaction.

The Prevalence of Verbal Abuse in the Gig Economy

The prevalence of verbal abuse faced by delivery drivers is a serious concern. Studies have shown that a significant percentage of drivers have experienced verbal abuse during their work. For example, a survey conducted by the National Domestic Workers Alliance found that 40% of gig workers reported experiencing verbal abuse from customers.

This issue is exacerbated by the fact that many delivery drivers are independent contractors, meaning they lack the same protections and support systems as traditional employees. This can make it difficult for them to report or address instances of verbal abuse.

The Role of AI in Safeguarding Drivers

DoorDash’s SafeChat leverages the power of AI to automatically detect and flag instances of verbal abuse in real-time. The tool uses natural language processing (NLP) to analyze the tone and content of conversations between drivers and customers. When it detects potentially abusive language, it alerts the driver and provides support options, such as the ability to report the incident or end the delivery.

This AI-powered solution offers several advantages:

- Real-time detection: SafeChat can identify abusive language as it occurs, providing immediate support to drivers.

- Objective analysis: AI algorithms can provide a more objective assessment of language compared to human judgment, reducing the risk of bias.

- Scalability: The AI-powered system can be deployed across a large number of drivers, ensuring consistent protection.

SafeChat is a promising development in the fight against verbal abuse in the gig economy. By leveraging AI technology, DoorDash is taking a proactive approach to protecting its drivers and creating a safer working environment.

How SafeChat Works

SafeChat is a powerful tool that uses advanced AI technology to protect DoorDash drivers from verbal abuse. This AI-powered system analyzes conversations in real-time, identifying and flagging instances of inappropriate language.

Speech Recognition and Natural Language Processing

SafeChat utilizes sophisticated speech recognition algorithms to transcribe audio from conversations between drivers and customers. This transcribed text is then analyzed by natural language processing (NLP) models, which are trained to recognize patterns and nuances in language. These NLP models are designed to understand the context and intent behind words, allowing them to differentiate between legitimate communication and abusive language.

Identifying Verbal Abuse

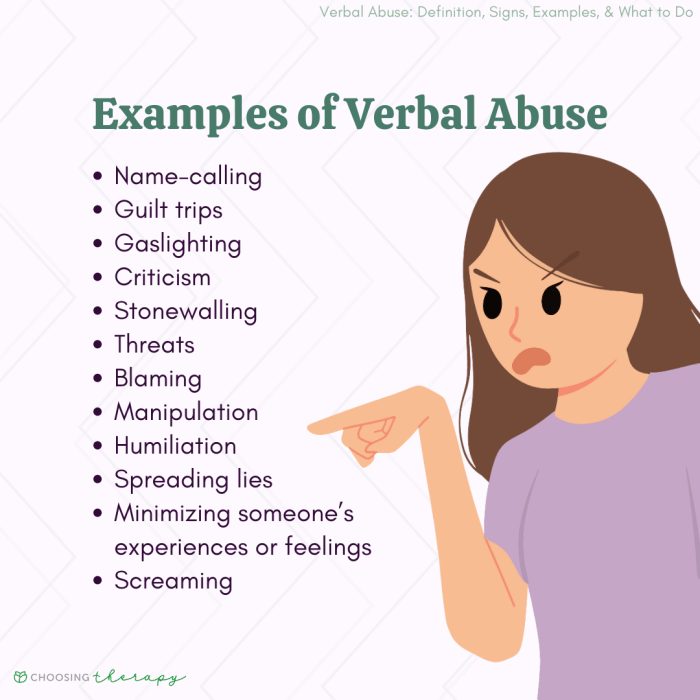

The NLP models within SafeChat are trained on a vast dataset of conversations, including examples of both polite and abusive language. This extensive training allows the AI to identify specific words, phrases, and even tone of voice that indicate verbal abuse.

For example, SafeChat can recognize the use of profanity, insults, threats, and other forms of offensive language.

Furthermore, the system takes into account the context of the conversation. This means that SafeChat can distinguish between a customer expressing frustration about a delayed order and a customer using abusive language.

Differentiating Legitimate Communication from Abusive Language, Doordashs new ai powered safechat tool automatically detects verbal abuse

SafeChat uses a multi-layered approach to ensure accuracy in identifying verbal abuse. This includes:

- Analyzing the frequency and intensity of offensive language

- Considering the context of the conversation

- Evaluating the overall tone and sentiment of the interaction

By combining these factors, SafeChat can effectively differentiate between legitimate communication and abusive language.

Benefits of SafeChat

SafeChat is more than just a tool; it’s a lifeline for DoorDash drivers, empowering them to feel safer and more secure on the road. By leveraging AI technology to identify and address verbal abuse, SafeChat fosters a more respectful and professional work environment, ultimately contributing to the well-being of drivers.

Enhanced Driver Safety and Well-being

SafeChat acts as a shield, protecting drivers from the harsh realities of verbal abuse. By automatically detecting and flagging instances of abuse, SafeChat empowers drivers to take immediate action, whether it’s reporting the incident or simply disengaging from the interaction. This proactive approach significantly reduces the risk of escalating situations and potential harm to drivers.

Promoting a Respectful and Professional Work Environment

SafeChat helps cultivate a culture of respect and professionalism within the DoorDash community. By establishing clear boundaries and consequences for abusive behavior, SafeChat discourages such conduct and encourages drivers to treat each other with courtesy and dignity. This creates a more positive and supportive work environment, fostering a sense of camaraderie and mutual respect among drivers.

Reducing Stress and Anxiety

The constant threat of verbal abuse can be a significant source of stress and anxiety for delivery drivers. SafeChat helps alleviate this burden by providing drivers with a sense of security and control. Knowing that their safety is prioritized and that abusive behavior will not be tolerated can significantly reduce stress levels and promote a more positive and fulfilling work experience.

Challenges and Future Developments: Doordashs New Ai Powered Safechat Tool Automatically Detects Verbal Abuse

SafeChat, while a groundbreaking initiative, faces inherent challenges and opportunities for future advancement. Addressing these aspects will be crucial for its effectiveness and long-term success.

Challenges in Detecting Subtle Forms of Verbal Abuse

Identifying and addressing subtle forms of verbal abuse presents a significant challenge for SafeChat. While AI can effectively detect explicit threats and insults, nuanced forms of verbal abuse, such as sarcasm, passive-aggressive comments, and manipulation, are more difficult to discern.

- Contextual Understanding: AI models need to be trained on a vast dataset of diverse communication styles and contexts to understand subtle nuances and recognize potentially abusive language within specific situations.

- Cultural Sensitivity: Verbal abuse can vary across cultures, making it challenging to develop a universally applicable system. SafeChat needs to be sensitive to different cultural norms and expressions.

- Intention vs. Impact: Determining the intent behind a message is crucial. A comment might be perceived as abusive even if it wasn’t intended as such. SafeChat must consider the impact of language, not just the intention.

Ethical Implications of AI-Powered Communication Monitoring

The use of AI in monitoring and regulating communication raises ethical concerns. Striking a balance between safety and privacy is essential.

- Privacy Concerns: Monitoring communication raises concerns about privacy violations. Ensuring data security and transparency is paramount.

- Bias in AI: AI models can inherit biases from the data they are trained on. This can lead to unfair or discriminatory outcomes. Addressing bias in AI development is crucial.

- Transparency and Accountability: Clear guidelines and mechanisms for transparency and accountability are needed to ensure responsible use of AI in communication monitoring.

Future Advancements for SafeChat

SafeChat has the potential to evolve and improve with ongoing research and development.

- Enhanced AI Capabilities: Developing more sophisticated AI models with improved natural language processing and contextual understanding will enhance SafeChat’s ability to detect subtle forms of verbal abuse.

- User Feedback and Training: Collecting user feedback and continuously training AI models with real-world data will improve accuracy and address emerging challenges.

- Human-AI Collaboration: Integrating human oversight and review into the system can provide a more nuanced and ethical approach to detecting and addressing verbal abuse.

Impact on the Gig Economy

SafeChat, with its AI-powered abuse detection, represents a significant development in the gig economy, particularly for delivery drivers. It has the potential to reshape the landscape of worker safety and rights in this rapidly growing sector.

The Potential for Widespread Adoption

The success of SafeChat could inspire other gig economy platforms to adopt similar AI-powered solutions. The growing demand for safety and security in the gig economy, coupled with the increasing availability of advanced AI technologies, makes this a likely scenario. Platforms like Uber, Lyft, and TaskRabbit might implement similar features to protect their drivers and service providers. This could lead to a more standardized approach to worker safety across the gig economy, potentially improving conditions for all gig workers.

Impact on Worker Rights and Workplace Safety

SafeChat has the potential to significantly impact worker rights and workplace safety in the gig economy. By automatically detecting and reporting verbal abuse, the tool can empower drivers to feel safer and more confident while on the job. This can lead to a reduction in stress and anxiety, potentially improving overall job satisfaction and retention rates. However, the effectiveness of SafeChat relies on its ability to accurately identify and report abuse. False positives could lead to unfair consequences for drivers, highlighting the need for careful development and implementation of such technologies.

The Future of Workplace Safety

The development of AI-powered tools like SafeChat signifies a shift towards a more proactive approach to workplace safety in the gig economy. These tools can play a vital role in creating a safer and more equitable environment for gig workers. However, it is crucial to ensure that these technologies are implemented responsibly and ethically, addressing potential biases and ensuring worker rights are not compromised.

SafeChat represents a significant step towards a safer and more respectful gig economy, empowering drivers with a tool that protects them from verbal abuse. As the technology evolves, we can expect to see further advancements in AI-powered tools designed to enhance workplace safety and create a more equitable environment for all gig workers.

DoorDash’s new AI-powered SafeChat tool is a game-changer for delivery drivers, automatically detecting and flagging verbal abuse. It’s a step in the right direction for creating a safer work environment, much like how True Anomaly’s CEO found a silver lining in their startup’s anomalous first mission, as detailed in this article. By leveraging technology to protect its workers, DoorDash is setting a new standard for the gig economy, ensuring a more respectful and secure experience for everyone involved.

Standi Techno News

Standi Techno News