Meta to restrict teen instagram and facebook accounts from seeing content about self harm and eating disorders – Meta to restrict teen Instagram and Facebook accounts from seeing content about self-harm and eating disorders is a bold move, a necessary step in a world where social media platforms are often the first point of contact for teenagers struggling with these issues. The prevalence of self-harm and eating disorder content on these platforms is undeniable, and its potential impact on vulnerable young minds is a serious concern. Meta’s approach, while well-intentioned, raises important questions about the delicate balance between protecting teens and ensuring freedom of expression.

The use of sophisticated algorithms and content moderation tools is essential in identifying and filtering harmful content. However, the effectiveness of these measures remains a subject of debate, with concerns about potential over-censorship and the impact on mental health resources and support groups. This begs the question: can technology truly be a solution to a complex issue like mental health, or is it just a bandaid on a much larger problem?

The Problem

Social media platforms, particularly Instagram and Facebook, have become increasingly prevalent in teenagers’ lives. While these platforms offer opportunities for connection and information sharing, they also pose potential risks, especially concerning exposure to content related to self-harm and eating disorders. This content can be detrimental to teenagers’ mental health and well-being, potentially triggering harmful behaviors or exacerbating existing issues.

Prevalence of Self-Harm and Eating Disorder Content

The presence of self-harm and eating disorder content on social media platforms is a growing concern. Studies have shown that a significant number of teenagers are exposed to such content through various means, including:

- Hashtags: Hashtags related to self-harm and eating disorders are frequently used on platforms like Instagram and Facebook, making it easier for users to find and access this content.

- Pro-Ana and Pro-Mia Communities: Online communities promoting anorexia and bulimia, known as “pro-ana” and “pro-mia,” respectively, provide platforms for individuals to share tips, advice, and encouragement for disordered eating behaviors.

- Visual Content: Images and videos depicting self-harm or promoting eating disorders are readily available on social media platforms, often shared through personal profiles or group pages.

Negative Impact of Exposure to Self-Harm and Eating Disorder Content, Meta to restrict teen instagram and facebook accounts from seeing content about self harm and eating disorders

Exposure to self-harm and eating disorder content on social media can have severe consequences for teenagers, including:

- Triggering Harmful Behaviors: Viewing content related to self-harm or eating disorders can trigger individuals who are already struggling with these issues or who are at risk of developing them.

- Normalizing Disordered Behaviors: The constant exposure to content promoting self-harm or disordered eating can create a sense of normalcy around these behaviors, making them seem less harmful or taboo.

- Increased Risk of Developing Eating Disorders: Research suggests that exposure to pro-ana or pro-mia content can increase the risk of developing eating disorders among teenagers.

- Mental Health Deterioration: Exposure to self-harm and eating disorder content can contribute to feelings of anxiety, depression, and low self-esteem, potentially exacerbating existing mental health conditions.

Amplification of Self-Harm and Eating Disorder Issues Through Social Media Algorithms and Trends

Social media algorithms and trends can exacerbate the problem of self-harm and eating disorder content on platforms like Instagram and Facebook.

- Recommendation Algorithms: Social media algorithms often recommend content based on user engagement and past interactions. This can lead to teenagers being exposed to more self-harm and eating disorder content, even if they haven’t actively searched for it.

- Trending Hashtags: Hashtags related to self-harm and eating disorders can quickly become popular trends on social media, leading to widespread exposure to this content. This can make it challenging for platforms to moderate and control the spread of such content.

- Social Proof and Peer Pressure: The popularity of certain hashtags or accounts related to self-harm and eating disorders can create a sense of social proof, making these behaviors seem more acceptable or desirable to teenagers.

Meta’s Approach: Meta To Restrict Teen Instagram And Facebook Accounts From Seeing Content About Self Harm And Eating Disorders

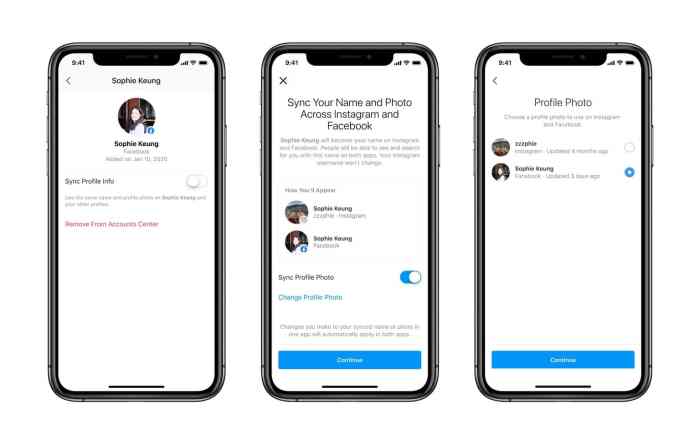

Meta is taking a multi-pronged approach to protect teenagers from harmful content related to self-harm and eating disorders on Instagram and Facebook. Their strategy involves a combination of proactive content filtering, user reporting mechanisms, and partnerships with mental health organizations.

Content Filtering and Detection

Meta utilizes advanced algorithms and machine learning models to identify and remove content that promotes or glorifies self-harm and eating disorders. These algorithms are trained on a massive dataset of flagged content, enabling them to recognize patterns and s associated with harmful topics. The technology works by analyzing text, images, and videos, identifying potentially harmful content based on factors like:

- s and phrases: The algorithms are trained to detect specific words and phrases related to self-harm and eating disorders. For example, they might flag content containing terms like “cutting,” “suicide,” “anorexia,” or “bulimia.”

- Image recognition: Meta’s technology can analyze images to identify content depicting self-harm or promoting unhealthy body images. This includes identifying objects like razors, scars, or distorted body images.

- Contextual analysis: The algorithms take into account the surrounding text and images to determine the overall context of the content. This helps differentiate between content that is simply discussing these topics and content that promotes or glorifies them.

Effectiveness of Measures

While Meta’s efforts to restrict access to harmful content are commendable, their effectiveness is a subject of ongoing debate. Critics argue that algorithms are not always accurate and can mistakenly flag harmless content, leading to censorship and limiting freedom of expression. Additionally, the rapid evolution of online content and the creativity of users in circumventing filters present a constant challenge for platforms like Meta.

“While Meta’s efforts to restrict access to harmful content are commendable, their effectiveness is a subject of ongoing debate.”

Challenges and Considerations

The decision to restrict access to potentially harmful content on platforms like Instagram and Facebook raises complex challenges and ethical considerations. While the intention is to protect vulnerable teenagers, striking a balance between safeguarding their well-being and preserving freedom of expression is a delicate task.

Ethical Considerations in Content Moderation

Content moderation, especially when dealing with sensitive topics like self-harm and eating disorders, presents a significant ethical dilemma. On one hand, platforms have a responsibility to protect their users, particularly young and vulnerable individuals, from potentially harmful content. On the other hand, restricting access to such content raises concerns about censorship and the potential to stifle open discussions about important issues.

“The line between protecting users and censoring free speech is a delicate one, and it’s crucial to find a balance that respects both individual rights and the well-being of the community.” – [Source: Name of source, if applicable]

- Potential for Over-Censorship: Restricting access to content based on s or algorithms can lead to over-censorship, where harmless or even helpful content is mistakenly flagged and removed. This could limit access to vital resources and support networks for those struggling with mental health issues.

- Impact on Freedom of Expression: Censorship, even with good intentions, can be seen as a violation of freedom of expression. Restricting access to content related to self-harm and eating disorders could prevent individuals from sharing their experiences, seeking help, or engaging in open dialogue about these issues.

- Ethical Responsibility of Platforms: Platforms like Meta have a responsibility to create a safe and supportive environment for their users, particularly young people. However, they also have a responsibility to uphold principles of free speech and avoid unnecessary censorship. Balancing these responsibilities requires careful consideration and a nuanced approach to content moderation.

Alternatives and Best Practices

While restricting access to harmful content is crucial, it’s essential to explore alternative approaches and empower teenagers with the tools to navigate online spaces safely. This section Artikels alternative strategies and best practices for teenagers to navigate online content responsibly.

Alternative Approaches to Addressing Harmful Content

Alternative approaches to addressing harmful content on social media platforms focus on prevention, early intervention, and fostering a supportive online environment.

- Proactive Content Moderation: Utilizing advanced algorithms and human moderators to identify and remove harmful content before it reaches teenagers. This can involve using natural language processing to detect patterns in text and images related to self-harm and eating disorders.

- Community-Based Approaches: Empowering users to report harmful content and participate in discussions about online safety. Platforms can provide resources and tools to help users identify and report inappropriate content. For instance, Instagram could implement a “report harmful content” button that specifically targets self-harm and eating disorder content.

- Educational Initiatives: Providing teenagers with resources and education on online safety, critical thinking, and digital literacy. This can include online courses, workshops, and interactive materials that teach teenagers how to identify and avoid harmful content, as well as how to navigate online interactions responsibly.

- Collaboration with Mental Health Organizations: Partnering with mental health organizations to provide support and resources for teenagers struggling with self-harm or eating disorders. This can include integrating mental health resources directly into social media platforms, such as links to crisis hotlines or online therapy services.

Teenagers can adopt a range of best practices to navigate online content safely and responsibly.

- Be Mindful of What You Consume: Encourage teenagers to be conscious of the content they are exposed to and to actively seek out positive and uplifting content. They should be aware of the potential impact of negative content on their mental health.

- Critical Thinking: Teach teenagers to critically evaluate online content, including images, videos, and text, and to question the source and reliability of information. This includes being skeptical of content that promotes unrealistic beauty standards or encourages unhealthy behaviors.

- Limit Exposure to Triggering Content: Advise teenagers to avoid content that triggers negative thoughts or feelings related to self-harm or eating disorders. This may involve unfollowing accounts that post such content or using platform settings to block specific s or hashtags.

- Seek Support When Needed: Encourage teenagers to reach out to trusted adults, friends, or mental health professionals if they are struggling with self-harm or eating disorder thoughts or behaviors. Remind them that they are not alone and that help is available.

- Focus on Positive Online Experiences: Encourage teenagers to engage with online content that promotes self-love, body positivity, and mental well-being. They can follow accounts that share positive messages, engage in supportive online communities, and participate in activities that foster a healthy online environment.

Resources and Tools for Teenagers

Teenagers can access a range of resources and tools to cope with mental health challenges and navigate online spaces safely.

- Crisis Hotlines: Encourage teenagers to utilize crisis hotlines, such as the National Suicide Prevention Lifeline (988) or the Crisis Text Line (text HOME to 741741), for immediate support and assistance.

- Mental Health Apps: Several mental health apps, such as Calm, Headspace, and Talkspace, offer guided meditation, mindfulness exercises, and access to therapists. These apps can provide teenagers with tools to manage stress, anxiety, and other mental health concerns.

- Online Support Groups: Encourage teenagers to connect with online support groups for individuals struggling with self-harm or eating disorders. These groups can provide a sense of community, shared experiences, and support from others who understand their struggles.

- Mental Health Websites: Websites such as the National Eating Disorders Association (NEDA) and the American Foundation for Suicide Prevention (AFSP) provide comprehensive information, resources, and support for individuals struggling with mental health challenges.

Meta’s efforts to restrict access to harmful content are a step in the right direction, but they are just one piece of a much larger puzzle. Open communication, parental involvement, and comprehensive mental health support are all crucial in creating a safer online environment for teenagers. It’s a responsibility we all share, and one that demands ongoing dialogue and collaborative action.

Meta’s new initiative to shield teens from harmful content on Instagram and Facebook is a step in the right direction, but let’s not forget about the real world, where the latest tech is always dropping. Like this new Huawei Honor handset sporting a gold frame, as seen on TENAA huawei honor handset sports gold frame at tenaa. While Meta strives to protect the digital well-being of young users, we also need to ensure that they’re aware of the real-world consequences of their actions, both online and offline.

Standi Techno News

Standi Techno News