Largest text to speech ai model yet shows emergent abilities – Largest text-to-speech AI model yet shows emergent abilities sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. Imagine a world where machines can speak with the same nuance and emotion as humans, effortlessly adapting their voice to convey a wide range of feelings. This isn’t science fiction; it’s the reality we’re rapidly approaching with the advent of incredibly powerful text-to-speech AI models. These models, pushing the boundaries of what’s possible, are not just mimicking human speech; they’re evolving to understand and express the complexities of human communication.

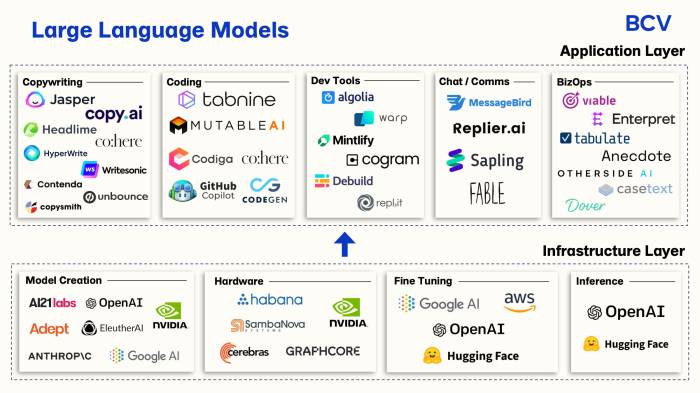

The emergence of these large language models has revolutionized the field of text-to-speech, opening up a world of possibilities across various industries. From virtual assistants that feel more human to educational tools that engage students in new ways, the impact of these AI models is undeniable. As these models continue to evolve, they’re not just making machines sound more human; they’re changing how we interact with technology, and perhaps, how we understand ourselves.

The Rise of Large Text-to-Speech AI Models

The world of text-to-speech (TTS) is undergoing a revolution, fueled by the rapid advancements in artificial intelligence (AI). We’re witnessing the emergence of larger and more sophisticated TTS models, pushing the boundaries of what’s possible in synthetic speech generation. These models are not only delivering more natural-sounding voices but also unlocking new levels of expressiveness and versatility.

Benefits of Large Text-to-Speech Models

The use of large models in text-to-speech offers several advantages:

- Enhanced Naturalness: Larger models are trained on massive datasets, enabling them to capture the nuances and complexities of human speech. This leads to more natural-sounding voices that are closer to human speech in terms of intonation, rhythm, and pronunciation.

- Increased Expressiveness: Large models can convey a wider range of emotions and styles. They can express happiness, sadness, anger, and other emotions in a more convincing manner, making the synthetic speech sound more engaging and impactful.

- Greater Versatility: Larger models can be adapted to different languages, accents, and voice styles. This flexibility allows developers to create custom voices that meet specific needs and preferences.

Prominent Large Text-to-Speech Models

Several prominent large text-to-speech models are making waves in the industry:

- Google’s WaveNet: This model, based on deep neural networks, generates audio waveforms directly, resulting in remarkably realistic and expressive voices. It’s been used to create voices for Google Assistant and other applications.

- Microsoft’s VALL-E: This model uses a neural network architecture called a transformer to generate speech based on text prompts. It can mimic different voices and speaking styles, even with limited training data. VALL-E is known for its ability to produce high-quality speech with a wide range of emotions and speaking styles.

- Amazon’s Polly: Polly offers a suite of text-to-speech services with a wide selection of voices, languages, and features. It’s used in various applications, including e-commerce, education, and entertainment.

Emergent Abilities in Text-to-Speech AI

Imagine a world where AI voices not only speak your words but also capture the nuances of your emotions, adapt to different situations, and even sound like your favorite celebrity. This isn’t science fiction; it’s the reality of the latest advancements in text-to-speech (TTS) AI. As these models grow larger and more complex, they’re exhibiting “emergent abilities” – capabilities that weren’t explicitly programmed but emerged naturally from their training data and architecture.

Improved Prosody and Natural Speech Rhythm

Large text-to-speech models are getting better at mimicking the natural flow and rhythm of human speech, a quality known as prosody. This is achieved through advanced algorithms that analyze the context of the text, identify key phrases, and adjust the pacing, intonation, and emphasis accordingly. This results in more engaging and natural-sounding speech, making it easier for listeners to understand and connect with the AI voice.

Nuanced Emotional Expression, Largest text to speech ai model yet shows emergent abilities

One of the most impressive emergent abilities is the ability to convey emotions in speech. These models can now capture the subtle nuances of human emotions, such as happiness, sadness, anger, and surprise, by analyzing the emotional content of the text and adjusting the pitch, tone, and speed of the voice. This allows for more expressive and engaging speech, making it possible to create AI voices that can evoke a wide range of emotions in listeners.

Adaptive Speech Styles

Another significant development is the ability of TTS models to adapt their speech style to different contexts. For example, an AI voice can switch between a formal, professional tone for a business presentation and a more casual, conversational tone for a friendly chat. This adaptability allows for a wider range of applications, from creating realistic virtual assistants to providing personalized voice experiences for different users.

Impact of Emergent Abilities on Various Applications: Largest Text To Speech Ai Model Yet Shows Emergent Abilities

The emergence of advanced text-to-speech (TTS) models with newfound abilities has the potential to revolutionize various applications that rely on synthesized speech. These capabilities, including more natural-sounding voices, improved emotional expression, and even the ability to understand context and adapt speech accordingly, can significantly enhance user experience and open up new possibilities across different domains.

Virtual Assistants

Virtual assistants are becoming increasingly prevalent in our daily lives, providing assistance with tasks like scheduling appointments, setting reminders, and answering questions. Emergent abilities in TTS can significantly enhance the user experience with virtual assistants.

- More natural-sounding voices can make interactions feel more human and engaging, fostering a greater sense of connection with the assistant.

- The ability to express emotions through speech can make virtual assistants more empathetic and responsive, allowing them to better understand and adapt to user moods.

- Contextual awareness can enable virtual assistants to provide more personalized and relevant responses, tailoring their speech to the specific situation and user preferences.

These advancements can lead to more intuitive and engaging interactions, making virtual assistants even more valuable tools in our daily lives.

Educational Tools

TTS technology is widely used in educational tools, such as audiobooks, reading software, and interactive learning platforms. Emergent abilities can further enhance the learning experience in various ways.

- More expressive voices can make learning materials more engaging and enjoyable, especially for younger learners.

- The ability to adjust speech rate and volume can cater to individual learning styles and preferences, allowing students to learn at their own pace.

- Contextual awareness can enable TTS systems to highlight important information or provide additional explanations, making learning more effective and efficient.

By leveraging these capabilities, TTS can create more accessible and engaging learning environments, fostering a love of learning in students of all ages.

Accessibility Software

TTS plays a crucial role in accessibility software, enabling individuals with visual impairments or reading difficulties to access information and engage with technology. Emergent abilities can significantly improve the accessibility and inclusivity of these tools.

- More natural-sounding voices can make reading more enjoyable and less tiring, improving user experience and reducing fatigue.

- The ability to express emotions can make reading more engaging and expressive, enhancing comprehension and enjoyment.

- Contextual awareness can enable TTS systems to provide more accurate and nuanced pronunciations, making it easier for users to understand complex or unfamiliar words.

By leveraging these capabilities, TTS can create more accessible and inclusive digital environments, empowering individuals with disabilities to fully participate in society.

Entertainment and Gaming

TTS is increasingly being used in entertainment and gaming, providing voiceovers for characters, narrating storylines, and enhancing immersive experiences. Emergent abilities can further elevate the entertainment value of these applications.

- More expressive voices can bring characters to life, making them more relatable and engaging for players and viewers.

- The ability to understand context and adapt speech accordingly can create more dynamic and immersive narratives, enhancing the overall gaming experience.

- Contextual awareness can enable TTS systems to provide more natural and realistic dialogue, making interactions between characters feel more authentic.

By leveraging these capabilities, TTS can create more engaging and immersive entertainment experiences, further blurring the lines between reality and fiction.

Future Directions and Research Opportunities

The emergence of emergent abilities in large text-to-speech AI models has opened up a new frontier in the field. This transformative potential necessitates a focused exploration of future directions and research opportunities that can harness these abilities to their fullest extent.

Further Development of Emergent Abilities

The continued development of emergent abilities in large text-to-speech models presents a significant opportunity for advancement. This involves enhancing existing capabilities and exploring new avenues for these abilities to manifest.

- Improving Emotional Expression: Enhancing the ability of these models to convey a wider range of emotions with nuance and authenticity. This can be achieved through training on larger datasets that include diverse emotional expressions and developing techniques to better capture and replicate the subtle nuances of human emotion.

- Refining Prosodic Control: Further refining the models’ ability to control prosodic features like pitch, intonation, and rhythm. This can involve exploring new techniques for learning and applying prosodic rules, as well as developing more sophisticated methods for analyzing and replicating human prosodic patterns.

- Developing Conversational Abilities: Enabling these models to engage in more natural and engaging conversations. This involves incorporating techniques from natural language processing (NLP) to understand context, interpret intent, and generate appropriate responses.

Integration with Other AI Technologies

The integration of large text-to-speech models with other AI technologies can lead to the development of novel applications and functionalities.

- Computer Vision Integration: Combining text-to-speech with computer vision to create applications that can synthesize speech based on visual input. This can enable applications like generating descriptions of images or videos, or creating audio guides for visually impaired individuals.

- Natural Language Processing Integration: Integrating text-to-speech with NLP to create more sophisticated dialogue systems and virtual assistants. This can involve using NLP techniques to understand user intent, generate contextually relevant responses, and personalize the user experience.

- Machine Learning Integration: Utilizing machine learning to optimize the training and performance of text-to-speech models. This can involve developing new algorithms for learning from data, as well as exploring techniques for transferring knowledge between different models.

Exploration of New Applications and Use Cases

The emergence of emergent abilities has opened up a wide range of new applications and use cases for text-to-speech technology.

- Personalized Learning: Creating personalized learning experiences that adapt to individual learning styles and preferences. This can involve generating speech tailored to specific learning needs, providing feedback and encouragement, and creating engaging and interactive learning environments.

- Interactive Entertainment: Enhancing interactive entertainment experiences through more immersive and engaging audio. This can involve creating realistic and believable characters, generating dynamic and responsive soundtracks, and providing interactive audio experiences.

- Accessibility: Improving accessibility for individuals with disabilities by providing alternative communication methods. This can involve creating text-to-speech applications for individuals with visual impairments, developing speech-to-text applications for individuals with motor impairments, and creating assistive technologies that enhance communication and interaction.

Open Research Questions and Challenges

While the emergence of emergent abilities in large text-to-speech models presents exciting possibilities, there are also several open research questions and challenges that need to be addressed.

- Ethical Considerations: Addressing ethical concerns related to the use of these models, such as the potential for misuse, the creation of deepfakes, and the impact on human interaction. Developing guidelines and best practices for responsible development and deployment of these models is crucial.

- Bias and Fairness: Mitigating bias and ensuring fairness in the outputs of these models. This involves addressing potential biases in training data, developing techniques for identifying and mitigating bias, and ensuring that the models are fair and equitable in their outputs.

- Transparency and Explainability: Improving the transparency and explainability of these models. This involves understanding how these models work, identifying the factors that contribute to their outputs, and developing methods for explaining their decisions to users.

The future of text-to-speech is brimming with potential. As these models continue to evolve, we can expect even more nuanced and expressive communication from machines. Imagine a world where virtual assistants can truly understand our emotions and respond with empathy, where educational tools can tailor their approach to individual learning styles, and where accessibility tools can empower individuals with disabilities to communicate more effectively. The possibilities are endless, and the journey is just beginning. The emergence of these large text-to-speech AI models is not just a technological advancement; it’s a testament to the incredible power of artificial intelligence to bridge the gap between humans and machines, and to create a more inclusive and accessible world for all.

Imagine a text-to-speech AI so advanced, it can actually understand and respond to complex prompts, like a human. This is the future we’re building, and we need your support to make it a reality. If you’re interested in learning more about how to pitch your own AI startup, check out this sample pre-seed pitch deck for inspiration.

We’re confident that with the right tools and resources, we can create a world where AI seamlessly integrates with our lives, and text-to-speech is just the beginning.

Standi Techno News

Standi Techno News