Discord took no action against server that coordinated costly mastodon spam attacks – Discord Ignored Server Behind Costly Mastodon Spam Attacks sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset.

The recent wave of spam attacks on Mastodon, a decentralized social media platform, has highlighted a critical issue: the responsibility of online platforms to combat malicious activity. A Discord server, allegedly responsible for orchestrating these attacks, has drawn attention for its coordinated efforts to disrupt Mastodon’s ecosystem. The attacks, which involved flooding Mastodon with unwanted content, resulted in significant financial and reputational damage for the platform. However, Discord’s response to the incident has raised concerns about its commitment to protecting its users and the broader online community.

The Discord Incident

The Discord incident involved a server that coordinated costly spam attacks on Mastodon, a decentralized social media platform. The server, which was dedicated to a specific online community, was used to launch a barrage of unwanted messages and content onto Mastodon, disrupting the platform’s normal operations and causing significant inconvenience to its users.

The Scale and Nature of the Attacks

The spam attacks were characterized by their sheer volume and coordinated nature. A large number of accounts, likely controlled by members of the Discord server, simultaneously flooded Mastodon with irrelevant and repetitive messages, overwhelming the platform’s infrastructure and making it difficult for legitimate users to engage in meaningful conversations.

The Costs Associated with the Attacks

The spam attacks resulted in substantial costs, both financial and reputational, for Mastodon and its users.

- Financial Costs: The attacks strained Mastodon’s servers, leading to increased costs for infrastructure maintenance and bandwidth. The platform’s resources were diverted from other essential tasks to deal with the spam onslaught.

- Reputational Damage: The attacks tarnished Mastodon’s reputation as a reliable and user-friendly platform. The spam made it difficult for users to find valuable content and contributed to a negative perception of the platform.

Discord’s Response and its Implications

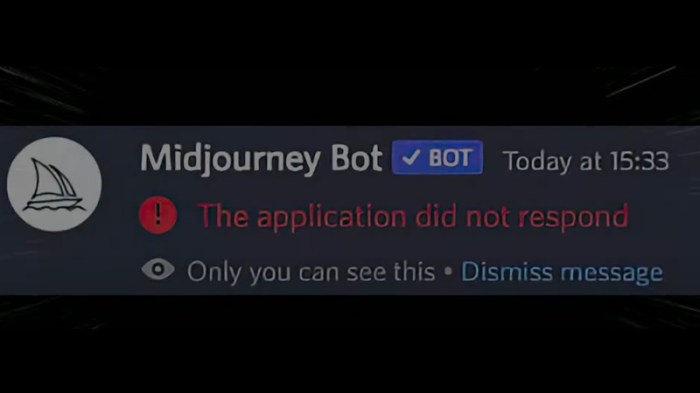

Discord’s official response to the Mastodon spam attacks was a mix of silence and a delayed, somewhat vague statement. The platform initially remained silent, allowing the attacks to continue for days, causing significant disruption and frustration among Mastodon users. This inaction raised concerns about Discord’s commitment to addressing malicious activity on its platform. Later, Discord released a statement acknowledging the issue and stating that they were investigating the matter, but provided no concrete details about the actions taken or the outcome of their investigation.

Discord’s Response: A Lack of Transparency and Action

Discord’s initial silence and subsequent vague statement were met with criticism from both users and cybersecurity experts. Many argued that the company’s lack of transparency and swift action demonstrated a lack of seriousness in addressing the issue. The delayed response, coupled with the lack of specific details about the actions taken, further fueled concerns about Discord’s commitment to platform security.

The Impact of Discord’s Inaction on User Trust

The incident has potentially damaged user trust in Discord. Many users felt that Discord’s inaction demonstrated a lack of concern for their security and well-being. This perception could lead to a decline in user engagement and a shift towards alternative platforms that prioritize user safety and security.

Potential Implications for Discord’s Future

Discord’s inaction could have long-term implications for its platform’s future. If the company fails to effectively address security issues and build user trust, it could face increased competition from platforms that prioritize security and user safety. Additionally, the incident could also lead to regulatory scrutiny and legal challenges, potentially impacting Discord’s growth and profitability.

The Role of Mastodon in the Incident: Discord Took No Action Against Server That Coordinated Costly Mastodon Spam Attacks

Mastodon, a decentralized social media platform, played a significant role in the Discord spam attack incident. While not directly involved in the attack itself, it served as a crucial platform for the coordination and execution of the spam campaign. This section explores Mastodon’s role, the vulnerabilities of decentralized platforms to such attacks, and the impact of the incident on Mastodon’s reputation and user base.

Mastodon’s Response to the Spam Attacks

Mastodon’s response to the spam attacks was characterized by a combination of proactive measures and community-driven efforts. The platform’s administrators took swift action to identify and block malicious accounts involved in the spamming activities. They also collaborated with other Mastodon instances to share information and coordinate responses. Additionally, Mastodon’s community actively participated in reporting spam accounts and promoting awareness about the attacks.

Vulnerabilities of Decentralized Platforms

The incident highlighted potential vulnerabilities inherent in decentralized platforms like Mastodon. While decentralization offers advantages like censorship resistance and user control, it also creates challenges in managing spam and malicious activity. The distributed nature of Mastodon makes it difficult to implement centralized controls and monitor activity across all instances.

Impact on Mastodon’s Reputation and User Base

The spam attack incident had a mixed impact on Mastodon’s reputation and user base. While some users expressed concerns about the platform’s ability to handle such attacks, others viewed it as a testament to the platform’s decentralized nature and its resilience against centralized control. The incident also led to increased awareness of Mastodon, attracting new users who were seeking alternatives to centralized social media platforms.

Ethical and Legal Considerations

The Discord server’s actions and Discord’s response raise significant ethical and legal concerns. This incident underscores the complex relationship between online platforms, their users, and the potential for misuse of their services.

Ethical Implications of the Discord Server’s Actions

The Discord server’s actions were unethical on several levels. The coordinated spam attacks on Mastodon were intended to disrupt the platform and its users, causing inconvenience and potential financial harm. The server’s actions also violated Mastodon’s terms of service and community guidelines. Moreover, the server’s actions demonstrated a disregard for the principles of online civility and respect for others.

Legal Ramifications for the Discord Server

The Discord server’s actions could have legal ramifications, depending on the specific laws and regulations in the jurisdiction where the server is located. The server’s actions could potentially violate laws related to:

- Cyberbullying: The server’s actions could be considered cyberbullying if they were intended to harass or intimidate Mastodon users.

- Harassment: The server’s actions could be considered harassment if they were intended to cause distress or alarm to Mastodon users.

- Defamation: The server’s actions could be considered defamation if they spread false or misleading information about Mastodon or its users.

- Violation of Terms of Service: The server’s actions could be considered a violation of Mastodon’s terms of service, which could result in account suspension or termination.

Legal Ramifications for Discord

Discord’s response to the incident could also have legal ramifications. Discord could be held liable for the actions of its users if it failed to take reasonable steps to prevent or mitigate the server’s actions. This could include:

- Negligence: Discord could be found negligent if it failed to take reasonable steps to prevent the server’s actions, despite being aware of the potential for harm.

- Vicarious Liability: Discord could be held vicariously liable for the server’s actions if it was aware of the server’s activities and failed to take action to stop them.

- Failure to Comply with Laws and Regulations: Discord could be found in violation of laws and regulations if it failed to take appropriate action to prevent or mitigate the server’s actions.

Broader Implications for Online Platforms

The Discord incident highlights the challenges faced by online platforms in balancing free speech with the need to prevent harmful content and activities. This incident raises important questions about the responsibilities of online platforms to:

- Moderate Content: Online platforms have a responsibility to moderate content that is harmful, illegal, or violates their terms of service.

- Prevent Abuse: Online platforms have a responsibility to prevent abuse of their services, including coordinated attacks and spam.

- Protect Users: Online platforms have a responsibility to protect their users from harm, including cyberbullying, harassment, and defamation.

Future Directions and Recommendations

The Discord incident highlighted significant vulnerabilities in both Discord’s platform and Mastodon’s infrastructure, exposing the need for proactive measures to prevent similar incidents in the future. Addressing these issues requires a collaborative effort involving both platforms and the broader online community.

Recommendations for Discord, Discord took no action against server that coordinated costly mastodon spam attacks

Discord needs to implement robust mechanisms to detect and mitigate coordinated malicious activity, such as spam attacks. These recommendations aim to enhance Discord’s ability to proactively address such incidents.

- Improved Detection Mechanisms: Discord should invest in advanced AI-powered detection systems that can identify patterns indicative of coordinated spam attacks. This includes analyzing user behavior, message content, and network traffic to identify suspicious activities.

- Enhanced Response Capabilities: Discord needs to streamline its response to reported incidents. This involves faster identification and isolation of compromised accounts, quicker removal of malicious content, and more efficient communication with affected users.

- Collaboration with Other Platforms: Discord should actively collaborate with other platforms, including Mastodon, to share information and best practices for combating coordinated attacks. This can involve joint investigations and the development of shared mitigation strategies.

- Increased Transparency: Discord should provide more transparency about its policies and procedures for addressing malicious activity. This includes clear guidelines for users to report incidents and regular updates on the platform’s efforts to combat spam and abuse.

Strategies for Mastodon

Mastodon, as a decentralized platform, faces unique challenges in mitigating spam attacks. These recommendations aim to strengthen Mastodon’s resilience against such incidents.

- Improved Spam Filtering: Mastodon instances should implement more effective spam filters that can identify and block malicious content. This could involve leveraging AI algorithms, user-reported spam, and collaborative filtering techniques.

- Enhanced Account Verification: Mastodon should explore ways to implement account verification processes to reduce the risk of spam originating from fake or compromised accounts. This could involve email verification, social media integration, or other mechanisms.

- Community Moderation Tools: Mastodon should provide robust tools for community moderators to manage spam and abuse on their instances. This includes features for flagging suspicious accounts, removing spam content, and blocking malicious users.

- Increased Awareness and Education: Mastodon users should be educated about the risks of spam attacks and encouraged to report suspicious activity. This can be achieved through user guides, community forums, and awareness campaigns.

Steps for Online Platforms

The Discord incident highlights the need for a broader approach to combating coordinated malicious activity across online platforms.

- Industry-Wide Collaboration: Online platforms should establish a collaborative framework for sharing information and best practices related to combating spam and abuse. This can involve industry-specific working groups, joint research initiatives, and information-sharing platforms.

- Standardized Reporting Mechanisms: Platforms should adopt standardized reporting mechanisms for users to flag suspicious activity. This ensures consistency and facilitates cross-platform investigations.

- Proactive Measures: Platforms should invest in proactive measures to identify and mitigate malicious activity before it impacts users. This includes developing robust AI-powered detection systems, implementing account verification processes, and fostering community moderation.

- Legal and Regulatory Framework: Governments and regulatory bodies should consider establishing a legal and regulatory framework that addresses coordinated malicious activity on online platforms. This can include provisions for platform accountability, penalties for malicious actors, and mechanisms for cross-border cooperation.

The Discord server’s actions and Discord’s inaction have sparked a broader discussion about the role of online platforms in combating malicious activity. This incident serves as a stark reminder of the challenges faced by decentralized platforms in dealing with coordinated attacks. The future of online communication depends on platforms taking a proactive stance against such threats, fostering a more secure and trustworthy online environment for all.

Discord’s inaction against the server that orchestrated costly Mastodon spam attacks raises questions about platform responsibility. While the decentralized nature of Web3 is often touted as a positive, it can also create loopholes for malicious actors. As we look towards web3 founders’ 2024 expectations , ensuring robust security and accountability across platforms will be crucial for the continued growth and adoption of this technology.

This incident highlights the need for more proactive measures from platforms like Discord to prevent similar attacks in the future.

Standi Techno News

Standi Techno News