Eu ai act gpai rules evolve – The EU AI Act: GPAI Rules Evolve, a groundbreaking piece of legislation, is shaking up the world of artificial intelligence. This Act, designed to regulate the development and deployment of AI systems, specifically focuses on General-Purpose AI (GPAI), those versatile systems capable of performing a wide range of tasks. This shift towards stricter regulation signifies a growing global awareness of the potential risks and ethical considerations associated with AI, particularly when it comes to powerful, adaptable technologies like GPAI.

The EU AI Act introduces a comprehensive framework for governing GPAI, encompassing everything from risk assessment and mitigation to the responsibilities of developers, deployers, and authorities. It aims to strike a delicate balance, fostering innovation while ensuring responsible and ethical use of AI. The Act’s impact extends far beyond Europe, influencing global discussions on AI regulation and setting a precedent for responsible AI development.

The EU AI Act and its Impact on GPAI Rules

The EU AI Act, a landmark legislation aimed at regulating artificial intelligence (AI) systems, has significant implications for general-purpose AI (GPAI). GPAI, characterized by its versatility and adaptability across various domains, poses unique challenges for regulatory frameworks. The EU AI Act seeks to address these challenges by introducing specific provisions tailored to GPAI, aiming to foster responsible innovation while safeguarding ethical considerations.

Key Provisions of the EU AI Act Related to GPAI

The EU AI Act identifies GPAI systems as a distinct category requiring specific attention. This recognition acknowledges the potential for both immense benefits and risks associated with these systems. Key provisions of the EU AI Act related to GPAI include:

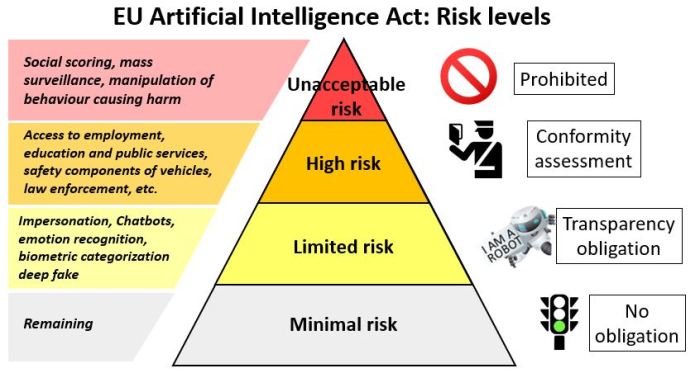

- Risk-Based Approach: The EU AI Act adopts a risk-based approach to regulating AI systems, classifying them into four risk categories: unacceptable risk, high risk, limited risk, and minimal risk. GPAI systems are likely to fall under the high-risk category, requiring stringent regulatory oversight.

- Transparency and Explainability: The EU AI Act emphasizes transparency and explainability for high-risk AI systems, including GPAI. This provision aims to ensure users understand the decision-making processes of these systems, fostering trust and accountability.

- Human Oversight: The EU AI Act mandates human oversight for high-risk AI systems, including GPAI. This provision ensures that human judgment and control remain central to the deployment and operation of these systems, mitigating potential risks and biases.

- Data Governance: The EU AI Act addresses data governance considerations for GPAI systems, emphasizing the importance of data quality, privacy, and security. This provision aims to create a robust framework for data management and utilization, safeguarding individual rights and promoting responsible data practices.

Key Concepts and Definitions

The EU AI Act introduces a framework for regulating AI systems, focusing on their potential risks and ensuring ethical and responsible development and deployment. Understanding the key concepts and definitions Artikeld in the Act is crucial for navigating the evolving landscape of AI governance, particularly for GPAI systems.

General-Purpose AI vs. Specialized AI Systems

The EU AI Act differentiates between general-purpose AI and specialized AI systems.

- General-purpose AI systems are designed to perform a wide range of tasks across various domains. These systems are highly adaptable and can be trained on diverse datasets, making them capable of learning and performing different functions. Examples include large language models (LLMs) like Kami, which can be used for tasks such as text generation, translation, and question answering.

- Specialized AI systems, on the other hand, are designed for specific tasks or domains. These systems are typically trained on specialized datasets and are optimized for specific applications. Examples include image recognition systems used in medical diagnosis or fraud detection systems used in financial institutions.

High-Risk AI Systems, Eu ai act gpai rules evolve

The EU AI Act identifies “high-risk AI systems” as those that pose a significant risk to the safety, health, or fundamental rights of individuals. These systems are subject to stricter regulatory requirements, including conformity assessments and mandatory risk mitigation measures.

The Act defines high-risk AI systems as those used in areas such as:

- Biometric identification and categorization of individuals (e.g., facial recognition systems)

- Management and control of critical infrastructure (e.g., traffic management systems)

- Education and training (e.g., AI-powered assessment tools)

- Employment and recruitment (e.g., AI-based hiring platforms)

- Access to and enjoyment of essential private services (e.g., credit scoring algorithms)

- Law enforcement and justice (e.g., predictive policing systems)

- Migration, asylum, and border control (e.g., AI-powered border surveillance systems)

- Healthcare (e.g., medical diagnosis and treatment systems)

Risk Assessment and Mitigation Measures

The EU AI Act emphasizes the importance of risk assessment and mitigation measures for high-risk AI systems. This involves:

- Identifying potential risks: This involves assessing the potential negative impacts of the AI system on individuals, society, and the environment.

- Evaluating the likelihood and severity of risks: This involves quantifying the probability and impact of potential risks.

- Developing and implementing mitigation measures: This involves taking steps to reduce or eliminate identified risks.

- Monitoring and reviewing the effectiveness of mitigation measures: This involves regularly evaluating the effectiveness of implemented measures and adjusting them as needed.

Governance and Oversight of GPAI Systems

The EU AI Act recognizes the unique challenges posed by GPAI systems, particularly in terms of transparency, accountability, and potential risks. The Act proposes a comprehensive governance framework designed to ensure responsible development and deployment of GPAI, balancing innovation with ethical considerations and human oversight.

Responsibilities of Developers, Deployers, and Authorities

The EU AI Act assigns specific responsibilities to different stakeholders involved in GPAI development and deployment. These responsibilities are crucial for managing the risks associated with GPAI systems, ensuring their ethical use, and maintaining public trust.

- Developers are tasked with ensuring that their GPAI systems meet the requirements Artikeld in the Act. This includes incorporating appropriate safeguards to mitigate risks, documenting the system’s design and functionality, and conducting thorough risk assessments. They must also provide clear and accessible information to users about the system’s capabilities and limitations.

- Deployers are responsible for implementing appropriate risk management measures and ensuring that GPAI systems are used in accordance with the Act’s provisions. They must monitor the system’s performance, address any emerging risks, and ensure that the system’s use aligns with ethical principles and human rights.

- Authorities play a crucial role in overseeing the development and deployment of GPAI systems. They are responsible for enforcing the Act’s provisions, conducting audits, and investigating potential violations. They also play a role in promoting responsible innovation, fostering collaboration among stakeholders, and providing guidance on best practices for GPAI development and use.

Role of Ethical Considerations and Human Oversight

Ethical considerations and human oversight are fundamental to the responsible development and use of GPAI systems. The EU AI Act emphasizes the importance of integrating ethical principles into the design, development, and deployment of these systems.

- Ethical Principles: The Act encourages the adoption of ethical principles such as fairness, transparency, accountability, and non-discrimination. These principles should guide the design and development of GPAI systems, ensuring that they are used in a way that respects human rights and promotes societal well-being.

- Human Oversight: The Act recognizes the importance of human oversight in the development and use of GPAI systems. This involves ensuring that human experts are involved in all stages of the system’s lifecycle, from design and development to deployment and monitoring. Human oversight is crucial for ensuring that GPAI systems are used responsibly and ethically, and that they do not lead to unintended consequences or harm.

Impact on Innovation and Competition

The EU AI Act’s influence on innovation and competition in the GPAI sector is a complex issue with both potential challenges and opportunities. The Act’s aim to foster responsible AI development while ensuring a level playing field for businesses presents a delicate balancing act.

Impact on Innovation

The EU AI Act’s impact on innovation in GPAI is multifaceted. On the one hand, the Act’s emphasis on transparency, accountability, and risk assessment can create a more predictable and trustworthy environment for GPAI development, potentially attracting investment and encouraging innovation. On the other hand, the Act’s stringent requirements, particularly for high-risk GPAI systems, could deter some businesses from entering the market, especially startups with limited resources.

Global Implications and Future Trends: Eu Ai Act Gpai Rules Evolve

The EU AI Act, with its comprehensive approach to regulating AI systems, has the potential to significantly influence global AI governance and regulation. Its impact extends beyond the European Union, setting a precedent for other countries and regions considering their own AI regulatory frameworks. The Act’s emphasis on transparency, accountability, and human oversight has sparked discussions and prompted other nations to evaluate their own AI policies.

Impact on Global AI Governance

The EU AI Act’s influence on global AI governance is multifaceted. The Act’s risk-based approach, categorizing AI systems based on their potential harm, has resonated with many nations seeking to strike a balance between promoting innovation and mitigating risks. The Act’s provisions regarding transparency, data governance, and human oversight are likely to be adopted or adapted by other countries as they develop their own AI regulations.

- The Act’s requirement for AI systems to be developed and deployed in a transparent and accountable manner could influence the development of global standards for AI transparency and explainability.

- The Act’s focus on human oversight and the establishment of independent bodies to monitor AI systems could inspire similar initiatives in other countries, promoting ethical and responsible AI development and deployment.

Adoption of Similar Regulations by Other Countries

Several countries and regions are actively developing their own AI regulations, drawing inspiration from the EU AI Act.

- China, with its ambitious AI development plans, has introduced regulations addressing AI ethics, data security, and algorithmic transparency.

- The United States, while lacking a comprehensive AI law, has adopted sector-specific regulations and issued guidance on AI ethics and responsible AI development.

- Canada, known for its strong data protection laws, has released guidelines on AI ethics and is exploring the development of a national AI strategy.

Emerging Trends and Challenges in GPAI

The field of GPAI is constantly evolving, presenting new challenges and opportunities.

- The increasing use of generative AI models, such as large language models (LLMs) and image generation models, raises concerns about potential biases, misinformation, and the ethical implications of AI-generated content.

- The rise of cross-border AI applications, particularly in areas like healthcare and finance, necessitates international cooperation to address issues of data privacy, security, and regulatory harmonization.

- The increasing reliance on AI for critical infrastructure, such as transportation and energy, demands robust safety and security measures to prevent potential failures or malicious attacks.

The EU AI Act: GPAI Rules Evolve marks a pivotal moment in the development of AI. It signifies a move towards a more proactive approach to managing the potential risks associated with advanced AI systems. By setting clear guidelines for responsible development and deployment, the Act aims to pave the way for a future where AI innovation is not only groundbreaking but also ethically sound and beneficial to society. As the world grapples with the implications of AI, the EU AI Act’s impact will undoubtedly continue to be felt, shaping the landscape of AI regulation and fostering a more responsible and ethical approach to this transformative technology.

The EU AI Act’s General Purpose AI (GPAI) rules are evolving, aiming to strike a balance between innovation and ethical AI development. This is a complex task, but one that’s becoming increasingly important as AI technologies become more powerful and integrated into our lives. Meanwhile, in the realm of space exploration, Rocket Lab has announced that its Electron rocket will likely return to the skies before the year is out, electron will likely return to the skies before the year is out rocket lab says.

These developments highlight the rapid pace of technological advancement, emphasizing the need for regulations that ensure responsible and ethical development of AI.

Standi Techno News

Standi Techno News