Google Assistant Lens Integration

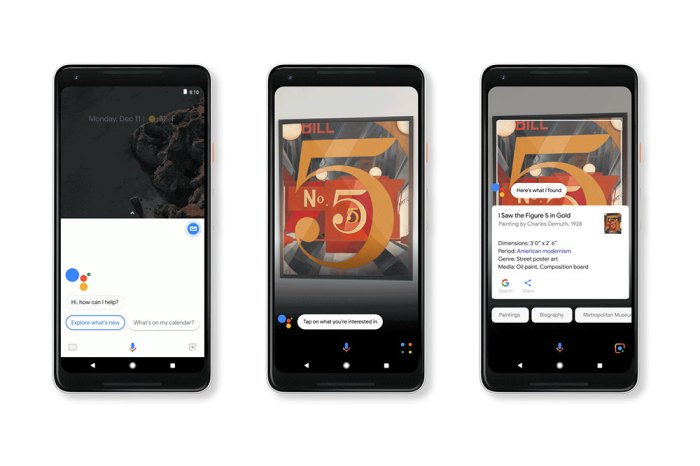

Google Assistant’s updated Lens support marks a significant leap forward in the world of mobile AI, offering users a more intuitive and powerful way to interact with the physical world. This integration seamlessly blends the capabilities of Google Lens with the convenience of Google Assistant, making everyday tasks easier and more efficient.

New Features and Functionalities, Google assistant updated lens support

The integration of Google Assistant with Lens brings a host of new features and functionalities that enhance the user experience.

- Direct Actions: Now, users can directly interact with objects identified by Lens through Google Assistant. For example, you can ask Assistant to set a timer based on the duration displayed on a microwave or translate text captured by Lens in real-time.

- Contextual Information: Lens can now provide contextual information about objects identified, drawing on Google’s vast knowledge base. For instance, pointing your phone at a landmark using Lens can provide historical facts, directions, and nearby attractions.

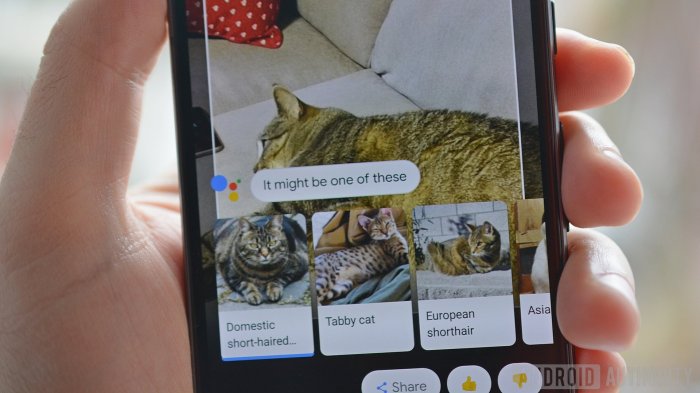

- Enhanced Object Recognition: The updated Lens integration offers improved object recognition capabilities, allowing users to identify a wider range of items with greater accuracy. This can be helpful for identifying plants, products, or even artwork.

- Multi-Language Support: Lens now supports multiple languages, making it easier to translate text, menus, or signage in real-time. This feature is particularly useful for travelers or anyone who encounters text in a foreign language.

Examples of Enhanced User Experience

The integration of Lens into Google Assistant provides a multitude of real-world applications, making everyday tasks more efficient and enjoyable.

- Shopping: You can point your phone at a product in a store using Lens and get instant information about its price, reviews, and availability at other retailers. This eliminates the need to manually search for the product online.

- Travel: While traveling, Lens can be used to translate signs, menus, and even currency. It can also provide information about nearby attractions, restaurants, and transportation options.

- Education: Students can use Lens to identify plants, animals, or historical artifacts. They can also use it to translate foreign language texts or get definitions of unfamiliar words.

- Home Improvement: You can point your phone at a damaged appliance using Lens and get information about its model, repair options, and even replacement parts.

Integration with Google Lens: Google Assistant Updated Lens Support

The integration of Google Assistant and Google Lens marks a significant step towards a more intuitive and powerful mobile experience. This integration empowers users to interact with the physical world through the lens of their smartphones, leveraging the combined capabilities of voice commands and visual recognition.

Technical Aspects of the Integration

The integration of Google Assistant and Lens relies on a sophisticated interplay of technologies, including computer vision, natural language processing, and cloud computing. Here’s a breakdown of how it works:

- Data Capture and Processing: When a user activates Google Lens through Google Assistant, the camera captures an image of the real-world object or scene. This image is then transmitted to Google’s servers, where it undergoes a series of complex processing steps.

- Visual Recognition: Google Lens utilizes advanced machine learning algorithms to analyze the captured image and identify the objects, text, or scenes present. This process involves comparing the image to a vast database of visual information, enabling accurate identification.

- Natural Language Processing: Once Google Lens has identified the objects or text in the image, the information is passed to Google Assistant’s natural language processing engine. This engine interprets the visual information and translates it into a human-understandable form, enabling users to interact with it through voice commands.

- Contextualized Responses: Based on the processed information, Google Assistant generates relevant responses, providing users with information, actions, or suggestions related to the identified object or scene. This includes tasks such as translating text, identifying products, providing directions, or searching for relevant information online.

Benefits and Challenges

The integration of Google Assistant and Lens offers a range of potential benefits, including:

- Enhanced Convenience: By combining voice commands and visual recognition, the integration eliminates the need for users to manually type or search for information. This streamlines the user experience and makes it easier to access information and complete tasks.

- Improved Accessibility: The integration can be particularly beneficial for users with disabilities who may find it challenging to use traditional input methods. By leveraging voice commands and visual recognition, the integration provides a more accessible way to interact with their devices.

- Expanded Functionality: The integration expands the capabilities of both Google Assistant and Lens, allowing users to perform a wider range of tasks and access a more comprehensive set of information. For instance, users can now ask Google Assistant to identify a product they see in a store using Lens, or to translate a sign in a foreign language.

However, there are also some challenges associated with this integration:

- Privacy Concerns: As the integration involves the capture and processing of visual data, it raises concerns about user privacy. Google has implemented measures to protect user privacy, but it remains a critical issue that needs to be addressed.

- Accuracy and Reliability: While Google Lens has made significant progress in visual recognition, it is not infallible. There may be instances where the system fails to accurately identify objects or text, leading to inaccurate or misleading information.

- Limited Functionality: The integration is still in its early stages, and there are limitations to the types of tasks and information that can be accessed. As the technology continues to develop, these limitations are expected to be addressed.

Impact on User Interactions

The integration of Google Lens with Google Assistant has significantly altered how users interact with the virtual assistant, making it more intuitive and efficient. This integration allows users to leverage the power of visual recognition to perform tasks and access information with greater ease.

Enhanced Information Retrieval

The integration has streamlined the process of information retrieval. Before the integration, users had to manually describe what they were looking at to Google Assistant. Now, users can simply point their camera at an object or text and Google Assistant can automatically identify it and provide relevant information. This is particularly helpful for identifying products, landmarks, plants, and text in different languages.

- Users can now point their camera at a product they see in a store and Google Assistant will provide information about the product, including price comparisons, reviews, and availability.

- When traveling, users can point their camera at a landmark and Google Assistant will provide information about its history, significance, and nearby attractions.

- For language learners, users can point their camera at a sign or menu and Google Assistant can translate it into their preferred language.

Future Implications and Possibilities

The integration of Google Assistant with Google Lens opens a world of possibilities, transforming the way we interact with information and the world around us. This fusion has the potential to revolutionize how we access information, complete tasks, and even understand our surroundings.

Enhanced Information Access

The combination of Google Assistant’s voice-driven interface and Google Lens’s visual recognition capabilities creates a powerful tool for accessing information. Users can simply point their phone camera at an object, a scene, or even a text, and Google Assistant will instantly provide relevant information. Imagine pointing your phone at a historical landmark and having Google Assistant provide a detailed description, historical context, and even nearby attractions. This integration could revolutionize how we explore our surroundings and learn about the world around us.

Google assistant updated lens support – The updated Lens support within Google Assistant marks a significant leap forward in how we interact with technology. It’s a powerful combination of visual and voice recognition that empowers us to explore our world with greater ease and understanding. This integration is just the beginning, with future possibilities promising even more innovative ways to utilize these powerful tools.

Google Assistant’s updated Lens support is pretty awesome, letting you identify objects and text in the real world with ease. But if you’re a photography enthusiast, you might be more interested in the schematics for the Fujifilm X-A5 , which have been revealed, giving us a sneak peek at the upcoming camera’s internals. Of course, Google Assistant’s Lens support can also help you identify different camera models, so you can decide which one is right for you.

Standi Techno News

Standi Techno News