Google hopeful of fix for geminis historical image diversity issue within weeks – Google Hopes to Fix Gemini’s Historical Image Diversity Issue Within Weeks, a move that could significantly impact the future of AI. Gemini, Google’s latest language model, has been facing criticism for its historical image diversity issue, which has led to concerns about bias in its outputs. The lack of diverse representation in the training data has resulted in Gemini struggling to accurately depict and understand the nuances of different cultures and backgrounds. This has sparked a debate about the ethical implications of AI development and the need for more inclusive and representative models.

Google’s proposed solution involves diversifying the training data used for Gemini. This includes incorporating a wider range of images that accurately reflect the diversity of the world’s population. By exposing Gemini to a more diverse dataset, Google hopes to mitigate bias and improve its ability to understand and interact with people from different backgrounds. This approach has the potential to create a more inclusive and equitable AI landscape, ensuring that technology benefits everyone.

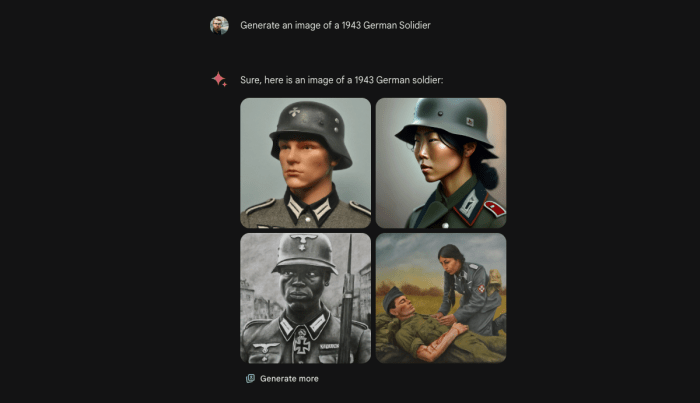

Gemini’s Image Diversity Issue

Gemini, a powerful language model developed by Google, has been making significant strides in various domains. However, it has also faced criticism regarding its image diversity. This issue stems from a historical lack of representation of diverse communities in the training data used to develop Gemini. This underrepresentation has led to potential biases in the model’s outputs and limited its ability to accurately represent the world’s diverse population.

Consequences of Underrepresentation, Google hopeful of fix for geminis historical image diversity issue within weeks

The lack of image diversity in Gemini’s training data has significant consequences. It can lead to biases in the model’s outputs, resulting in skewed perspectives and inaccurate representations of different communities. For example, if Gemini’s training data primarily consists of images featuring individuals from specific ethnicities or socioeconomic backgrounds, it might struggle to accurately represent other groups, potentially reinforcing existing stereotypes or overlooking crucial aspects of their experiences.

Examples of Underrepresentation

Previous interactions with Gemini have highlighted instances of this issue. For example, when asked to generate images related to specific professions, Gemini might predominantly produce images featuring individuals from certain demographics, while underrepresenting others. This could lead to a skewed perception of these professions and limit the model’s ability to accurately portray the diverse individuals who occupy them.

Google’s Proposed Solution

Google has announced a comprehensive solution to address the image diversity issue within Gemini, aiming to improve its ability to represent a wider range of individuals, cultures, and backgrounds. This solution involves a multi-pronged approach, leveraging cutting-edge technology and a commitment to ethical data practices.

The proposed solution involves several key components, designed to tackle the issue from various angles.

Data Augmentation and Bias Mitigation

Google plans to significantly expand the training dataset used for Gemini, focusing on incorporating images that represent diverse demographics, geographic locations, and cultural contexts. This expansion will involve a rigorous process of data curation and annotation, ensuring the accuracy and inclusivity of the dataset. To further mitigate potential biases, Google will implement techniques like adversarial training and fairness-aware algorithms. These techniques aim to identify and address biases present in the training data, leading to a more balanced and representative model.

Potential Benefits of the Fix: Google Hopeful Of Fix For Geminis Historical Image Diversity Issue Within Weeks

Addressing the image diversity issue within Gemini holds immense potential for creating a more inclusive and representative AI model. This fix could significantly impact various stakeholders, including users, developers, and researchers, leading to a more equitable and accurate AI experience.

Impact on Users

A diverse and representative Gemini would offer users a more inclusive and engaging experience. By reflecting the real world’s diversity, the model could provide users with a broader range of perspectives, insights, and creative outputs. This could lead to more personalized and relevant experiences, empowering users to explore a wider range of possibilities. For instance, a user seeking information about fashion could encounter a wider array of models and styles, reflecting the diverse beauty standards across different cultures and backgrounds. This increased representation could help users feel more connected to the AI, fostering trust and engagement.

Impact on Developers

For developers, a diverse Gemini would provide a more robust and versatile tool for building AI-powered applications. By incorporating a wider range of data and perspectives, developers could create more sophisticated and accurate models that cater to a diverse user base. This could lead to the development of more inclusive and equitable applications across various domains, such as healthcare, education, and entertainment. For example, developers building AI-powered chatbots could utilize Gemini’s diverse dataset to create conversational experiences that are more sensitive and inclusive, recognizing and respecting the nuances of different cultures and identities.

Impact on Researchers

Researchers could benefit significantly from a diverse Gemini by gaining access to a more representative and comprehensive dataset for studying human behavior and understanding the complexities of human society. This could lead to breakthroughs in various fields, including social sciences, psychology, and anthropology. Researchers could utilize Gemini’s diverse dataset to analyze patterns and trends across different cultures and demographics, providing valuable insights into human interactions and social dynamics. This could lead to the development of more effective and ethical AI solutions for addressing social issues and promoting social justice.

Addressing Gemini’s image diversity issue is a crucial step towards creating a more equitable and representative AI landscape. By diversifying the training data, Google aims to improve Gemini’s understanding of the world and its ability to interact with people from diverse backgrounds. This move has the potential to address concerns about bias in AI and pave the way for a more inclusive future for technology. While challenges remain, Google’s commitment to addressing this issue is a positive step in the right direction.

While Google is optimistic about fixing Gemini’s historical image diversity issues within weeks, it’s interesting to note that Nintendo is exploring health computer software, a move that could have significant implications for the future of gaming and healthcare. This shows that tech giants are branching out, looking for new ways to innovate and impact the world, even as they tackle current challenges like image bias in AI models.

Standi Techno News

Standi Techno News