Guardrails AI Builds Hub for GenAI Model Mitigations: Imagine a world where AI, especially generative AI models (GenAI), are not just powerful tools but also responsible ones. That’s the vision behind this groundbreaking hub, designed to address the potential risks of uncontrolled GenAI models. It’s a centralized platform for managing and mitigating risks, empowering developers and organizations to build responsible AI solutions.

This hub is like a safety net for GenAI models, equipped with sophisticated features like model monitoring, bias detection, and risk assessment. It’s a proactive approach to ensure that AI technology is used ethically and effectively, safeguarding against potential biases, misuse, and unintended consequences.

The Rise of Generative AI Models (GenAI)

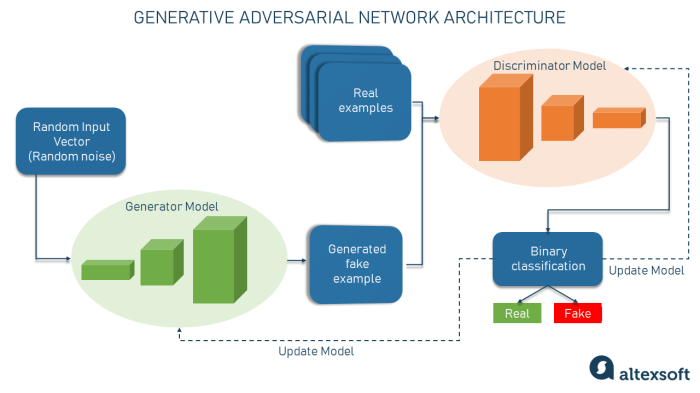

Generative AI models, often referred to as GenAI, are revolutionizing various industries by enabling machines to create new content, such as text, images, audio, and video, that resembles human-generated content. The rapid advancements in artificial intelligence (AI) and the availability of vast amounts of data have fueled the emergence of GenAI models, leading to their widespread adoption across diverse domains.

GenAI models leverage sophisticated algorithms and deep learning techniques to learn patterns and relationships from existing data, enabling them to generate new, original content. These models are trained on massive datasets and can be customized for specific tasks, making them versatile tools for various applications.

Examples of Successful GenAI Implementations

The increasing adoption of GenAI models can be attributed to their successful implementation across various industries, showcasing their transformative potential.

- Content Creation: GenAI models are widely used in content creation, including writing articles, generating social media posts, and composing music. For instance, OpenAI’s GPT-3 can generate human-quality text, while Google’s Magenta project explores the use of AI for music generation.

- Image Generation: GenAI models are employed in image generation, creating realistic and artistic images. DALL-E 2, developed by OpenAI, can generate images from textual descriptions, while Stable Diffusion allows users to create images from text prompts.

- Drug Discovery: GenAI models are being used to accelerate drug discovery by generating new molecules with desired properties. DeepMind’s AlphaFold has successfully predicted the structure of proteins, aiding in drug development.

- Personalized Marketing: GenAI models are used to personalize marketing campaigns by generating tailored content and recommendations based on customer preferences and behavior. Companies like Netflix and Amazon leverage AI to provide personalized recommendations.

Challenges Associated with Deploying GenAI Models

Despite their promising potential, deploying GenAI models in real-world scenarios presents several challenges.

- Data Bias: GenAI models are trained on large datasets, which may contain biases, leading to the generation of biased or discriminatory content. It is crucial to address data bias and ensure fairness in model outputs.

- Ethical Concerns: The use of GenAI models raises ethical concerns, such as the potential for misuse in creating deepfakes or generating misleading information. Establishing clear guidelines and regulations is essential to mitigate these risks.

- Model Explainability: Understanding how GenAI models make decisions is crucial for ensuring transparency and accountability. However, the complex nature of these models makes it challenging to interpret their outputs.

- Computational Resources: Training and deploying GenAI models require significant computational resources, which can be a barrier for some organizations.

The Need for AI Guardrails

The rise of generative AI models (GenAI) presents incredible opportunities, but it also brings forth a new set of challenges. These powerful models, capable of generating realistic and creative content, can be misused, leading to potential harm. This underscores the urgent need for AI guardrails to ensure responsible development and deployment.

Potential Risks of Uncontrolled GenAI Models

The potential risks associated with uncontrolled GenAI models are multifaceted and require careful consideration. These models can be susceptible to biases, leading to discriminatory outcomes. They can be misused for malicious purposes, generating harmful content or manipulating information. Moreover, the lack of transparency and explainability in their decision-making processes can erode trust and hinder accountability.

Examples of GenAI Model Biases, Ethical Concerns, and Potential Misuse, Guardrails ai builds hub for genai model mitigations

- Bias in Training Data: GenAI models learn from the data they are trained on. If this data reflects existing societal biases, the models can perpetuate and amplify these biases in their outputs. For example, a language model trained on a dataset with predominantly male authors might generate text that reinforces gender stereotypes.

- Ethical Concerns in Content Generation: GenAI models can be used to create realistic deepfakes, which can be used to spread misinformation or damage reputations. They can also be used to generate harmful content, such as hate speech or propaganda.

- Potential Misuse for Malicious Purposes: GenAI models can be used to create sophisticated phishing attacks or generate fake news articles. They can also be used to automate spam campaigns or generate malicious code.

The Critical Role of Guardrails in Mitigating Risks

AI guardrails are essential for mitigating the risks associated with uncontrolled GenAI models. They act as safeguards, ensuring that these models are developed and deployed responsibly. Guardrails can take various forms, including:

- Data Governance and Bias Mitigation: Implementing robust data governance practices to ensure that training data is diverse, representative, and free from biases. This includes data auditing, bias detection, and mitigation techniques.

- Ethical Guidelines and Responsible AI Principles: Establishing clear ethical guidelines and principles for the development and deployment of GenAI models. These principles should address issues such as fairness, transparency, accountability, and human oversight.

- Model Validation and Testing: Rigorous model validation and testing to identify and mitigate potential risks, including biases, vulnerabilities, and unintended consequences. This includes adversarial testing and red teaming exercises.

- Transparency and Explainability: Promoting transparency and explainability in the decision-making processes of GenAI models. This includes providing insights into how the models arrive at their outputs and allowing for human oversight and intervention.

- Human Oversight and Control: Implementing mechanisms for human oversight and control, ensuring that GenAI models are used responsibly and ethically. This includes human-in-the-loop systems and mechanisms for de-escalation and intervention in case of potential misuse.

Key Components of the Hub: Guardrails Ai Builds Hub For Genai Model Mitigations

This section delves into the critical components that make up the GenAI model mitigation hub, providing a comprehensive overview of their functions and the benefits they offer.

Components of the GenAI Model Mitigation Hub

The GenAI model mitigation hub is a multi-faceted tool designed to ensure the responsible and ethical development and deployment of GenAI models. It comprises several key components, each playing a crucial role in mitigating potential risks and promoting trust in AI systems.

| Component | Function | Benefits |

|---|---|---|

| Model Monitoring | Continuously tracks the performance of GenAI models, identifying anomalies, biases, and potential risks in real-time. | Early detection of issues, enabling timely intervention and prevention of negative impacts. |

| Bias Detection | Analyzes GenAI model outputs to identify and quantify biases, ensuring fairness and inclusivity in decision-making. | Reduces discriminatory outcomes, promoting equitable and unbiased AI systems. |

| Risk Assessment | Evaluates the potential risks associated with GenAI models, considering factors such as data privacy, security, and societal impact. | Provides a comprehensive understanding of potential risks, enabling proactive mitigation strategies. |

| Explainability | Provides insights into the decision-making process of GenAI models, making their outputs more transparent and understandable. | Enhances trust and accountability by enabling users to comprehend the reasoning behind model predictions. |

| Data Governance | Ensures the ethical and responsible use of data in GenAI model development and deployment, adhering to privacy regulations and ethical guidelines. | Protects sensitive information, promotes data privacy, and fosters public trust in AI systems. |

| Human Oversight | Integrates human expertise and judgment into the GenAI model development and deployment process, ensuring responsible and ethical decision-making. | Provides a critical layer of control and accountability, preventing potential misuse of AI technology. |

Workflow of the GenAI Model Mitigation Hub

The GenAI model mitigation hub operates as a continuous cycle, encompassing model training, deployment, and ongoing monitoring. This workflow ensures that GenAI models are developed and deployed responsibly, with built-in safeguards to mitigate potential risks.

Flowchart:

[Insert flowchart here]Description:

1. Model Training: The process begins with the training of a GenAI model using carefully curated datasets.

2. Bias Detection and Risk Assessment: Before deployment, the model undergoes rigorous bias detection and risk assessment to identify potential issues.

3. Model Deployment: Once deemed safe and responsible, the model is deployed into a controlled environment for real-world testing and monitoring.

4. Model Monitoring: Continuous monitoring tracks the model’s performance, identifying any emerging biases or risks.

5. Feedback Loop: Any detected issues are fed back into the model training and development process, leading to iterative improvements and refinements.

Future Directions

The landscape of generative AI is evolving rapidly, with new models and applications emerging constantly. This dynamic environment necessitates a forward-looking approach to AI guardrails, ensuring they remain effective and adaptable. The AI Guardrails Hub is designed to be a living resource, continuously updated to reflect the latest advancements in GenAI model mitigation.

Emerging Trends in GenAI Model Mitigation

The field of GenAI model mitigation is experiencing exciting developments, with researchers and developers exploring innovative approaches to address emerging challenges. Here are some key trends shaping the future of AI guardrails:

- Explainable AI (XAI): Understanding the decision-making processes of GenAI models is crucial for building effective guardrails. XAI techniques are being developed to provide insights into how models arrive at their outputs, enabling better identification and mitigation of biases and unintended consequences.

- Federated Learning: This approach allows for collaborative training of AI models without sharing sensitive data, enhancing privacy and security. Federated learning is particularly relevant for mitigating risks associated with the use of personal data in GenAI models.

- Adversarial Training: This technique involves exposing GenAI models to adversarial examples—inputs designed to trick them—to improve their robustness and resilience against malicious attacks. Adversarial training helps strengthen guardrails by making models more resistant to manipulation.

Advancements in the AI Guardrails Hub

The AI Guardrails Hub will evolve alongside these trends, incorporating the latest research and best practices in GenAI model mitigation. Key areas of development include:

- Expanded Toolkit: The hub will offer a wider range of tools and resources, including pre-trained models, code libraries, and documentation, to assist developers in implementing effective guardrails.

- Community Engagement: The hub will foster a vibrant community of AI practitioners, researchers, and policymakers to share knowledge, collaborate on solutions, and promote responsible GenAI development.

- Dynamic Content: The hub will be updated regularly with new information, case studies, and best practices, ensuring its relevance and usefulness for navigating the evolving landscape of GenAI.

Future of Responsible GenAI Development

The future of responsible GenAI development hinges on a collaborative effort between researchers, developers, policymakers, and society as a whole. The AI Guardrails Hub plays a vital role in this effort by providing a platform for sharing knowledge, fostering innovation, and promoting ethical considerations. Here’s a vision for the future:

GenAI models are developed and deployed with robust guardrails in place, ensuring their safety, fairness, and transparency. These models are used to address societal challenges, promoting economic growth, improving human well-being, and fostering a more equitable and sustainable future.

The Guardrails AI hub represents a paradigm shift in AI development. By building a robust infrastructure for GenAI model mitigations, we can harness the transformative power of AI while ensuring its responsible and ethical use. This hub is a testament to the growing understanding of the importance of responsible AI, paving the way for a future where AI technology empowers and benefits all.

Guardrails AI is building a hub for GenAI model mitigations, which is pretty cool, right? But let’s be real, sometimes even the most advanced tech needs a reality check. Just like Peloton announcing 400 layoffs , sometimes things don’t go as planned. Guardrails AI is tackling the tough questions around AI safety and responsibility, and that’s a crucial step towards building a future where GenAI models are actually beneficial to humanity.

Standi Techno News

Standi Techno News