The Rise of Human-Robot Interaction

The ability for humans to seamlessly interact with robots is rapidly becoming crucial across various fields. From manufacturing and healthcare to space exploration and disaster response, the demand for intuitive and efficient human-robot interfaces is steadily increasing. This evolution is driven by the need for robots to perform complex tasks, adapt to changing environments, and collaborate effectively with human operators.

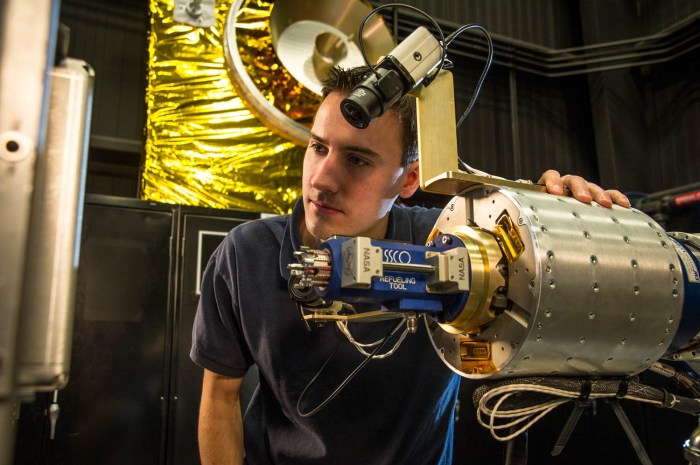

NASA’s groundbreaking work in space exploration has played a pivotal role in advancing robotics and human-machine interfaces. The agency’s missions have required the development of robots capable of operating in extreme and unpredictable environments, prompting advancements in areas like teleoperation, autonomous navigation, and human-robot communication.

Challenges and Opportunities of VR/AR Integration

Integrating virtual reality (VR) and augmented reality (AR) technologies into robotics presents both challenges and exciting opportunities. VR/AR can significantly enhance the user experience by providing immersive and intuitive control interfaces. However, challenges include:

- Latency and Bandwidth: Ensuring real-time communication between the VR/AR system and the robot is crucial for seamless control and feedback. This requires robust network infrastructure and low latency communication protocols.

- Motion Tracking and Calibration: Accurate tracking of the user’s movements in the VR/AR environment is essential for translating those movements into precise robot actions. This requires advanced motion tracking systems and precise calibration procedures.

- Human Factors and Ergonomics: Designing VR/AR interfaces that are intuitive, comfortable, and safe for extended use is crucial for preventing user fatigue and errors.

Despite these challenges, the potential benefits of VR/AR integration in robotics are substantial.

- Enhanced Teleoperation: VR/AR allows for more immersive and intuitive teleoperation, enabling operators to control robots remotely as if they were physically present in the environment.

- Improved Training and Simulation: VR/AR can create realistic simulations of complex robot operations, providing a safe and cost-effective way for operators to train and learn new skills.

- Enhanced Collaboration: VR/AR can facilitate collaboration between human operators and robots by providing shared virtual environments where both can interact and contribute to task completion.

Oculus Rift and Kinect 2

The Oculus Rift and Kinect 2 have revolutionized human-robot interaction by providing intuitive and immersive control mechanisms. These technologies offer a natural and intuitive way to interact with robots, moving beyond traditional interfaces like joysticks and keyboards.

Features of Oculus Rift and Kinect 2 for Robotic Control

The Oculus Rift and Kinect 2 offer unique features that make them ideal for controlling robots.

- Oculus Rift:

- Immersive 3D Visualization: The Oculus Rift provides a fully immersive 3D experience, allowing users to “see” the robot’s environment from the robot’s perspective. This provides a clear understanding of the robot’s surroundings and facilitates better decision-making.

- Head Tracking and Gestures: The Oculus Rift tracks the user’s head movements, translating them into corresponding robot actions. This allows for intuitive control of the robot’s movement and orientation.

- Intuitive Interface: The Oculus Rift offers a user-friendly interface, simplifying complex commands and providing a natural and intuitive control experience.

- Kinect 2:

- Depth Sensing and Skeleton Tracking: The Kinect 2’s depth sensor accurately captures the user’s body movements and translates them into real-time robot control. This allows for precise manipulation of the robot’s limbs and tools.

- Voice Recognition: The Kinect 2 integrates voice recognition, enabling users to issue verbal commands to the robot, further enhancing the control experience.

- Object Recognition: The Kinect 2’s object recognition capabilities allow the robot to understand its surroundings and respond accordingly. This enhances the robot’s ability to navigate and interact with objects in its environment.

Comparison of Oculus Rift and Kinect 2 for Robotic Control

Both Oculus Rift and Kinect 2 offer distinct advantages for robotic control, but they also have specific strengths and weaknesses.

- Oculus Rift:

- Strengths: Provides immersive 3D visualization, head tracking for intuitive control, and a user-friendly interface.

- Weaknesses: Limited to head movements and gestures, lacks object recognition capabilities, and requires a dedicated headset.

- Kinect 2:

- Strengths: Offers depth sensing and skeleton tracking for precise control, integrates voice recognition for hands-free commands, and includes object recognition for enhanced situational awareness.

- Weaknesses: Limited to full-body movements, less immersive than Oculus Rift, and requires a dedicated sensor.

Natural and Immersive Control

The Oculus Rift and Kinect 2 enable more natural and immersive control compared to traditional methods.

- Traditional methods often involve complex interfaces with multiple buttons and controls, requiring significant training and effort to operate. This can lead to errors and hinder the user’s ability to interact effectively with the robot.

- Oculus Rift and Kinect 2 offer a more intuitive and user-friendly approach. By utilizing natural human movements and gestures, these technologies simplify the control process and allow users to interact with robots in a more natural and intuitive way. This enhances the overall user experience and enables more efficient and effective control.

Applications of VR/AR in Robotic Control

The integration of virtual reality (VR) and augmented reality (AR) technologies into robotic control systems has opened up new avenues for human-robot interaction, particularly in complex and challenging environments. By leveraging immersive experiences and intuitive interfaces, VR/AR allows operators to interact with robots in a more natural and efficient manner, enabling them to perform tasks that were previously impossible or highly demanding. This section explores the diverse applications of VR/AR in robotic control, showcasing how NASA engineers are harnessing these technologies to advance exploration, disaster response, and manufacturing.

Applications of VR/AR in Robotic Control

The integration of VR/AR technologies in robotic control offers numerous advantages, particularly in scenarios where direct human intervention is impractical or dangerous. These technologies provide intuitive interfaces, enhanced situational awareness, and remote manipulation capabilities, enabling operators to interact with robots effectively in various environments. To illustrate the diverse applications of VR/AR in robotic control, the following table Artikels specific examples of how NASA engineers are utilizing Oculus Rift and Kinect 2 to control robots in space exploration, disaster response, and manufacturing.

| Application | Oculus Rift Use | Kinect 2 Use | Benefits |

|---|---|---|---|

| Space Exploration | Operators can use the Oculus Rift to experience a virtual representation of the Martian landscape, allowing them to navigate and control rovers remotely. This provides a sense of presence and enables them to make informed decisions based on real-time visual feedback. | Kinect 2 can be used to track the operator’s movements and translate them into commands for the rover, enabling intuitive and natural control. It also allows for gesture-based interaction, simplifying complex tasks. | The use of VR/AR in space exploration provides a more immersive and intuitive control experience, enabling operators to make informed decisions based on real-time visual feedback. This enhances situational awareness and reduces the risk of human error. |

| Disaster Response | During disaster response operations, the Oculus Rift can be used to provide operators with a virtual view of the affected area, allowing them to assess the situation and guide rescue robots. This enables them to make informed decisions and deploy robots effectively. | Kinect 2 can be used to track the operator’s movements and translate them into commands for the rescue robots, enabling intuitive and natural control. It also allows for gesture-based interaction, simplifying complex tasks. | VR/AR enhances situational awareness and enables operators to control rescue robots remotely, minimizing the risk to human responders. This technology allows for rapid deployment and effective response to disaster situations. |

| Manufacturing | The Oculus Rift can be used to create virtual representations of complex manufacturing processes, allowing operators to train and simulate tasks before working with real robots. This reduces the risk of errors and improves efficiency. | Kinect 2 can be used to track the operator’s movements and translate them into commands for industrial robots, enabling intuitive and natural control. It also allows for gesture-based interaction, simplifying complex tasks. | VR/AR in manufacturing allows for efficient training, improved safety, and reduced errors. It also enables operators to control industrial robots remotely, minimizing the need for physical presence in hazardous environments. |

Technical Aspects of Integration

Integrating Oculus Rift and Kinect 2 with robotic systems presents unique technical challenges, requiring a careful consideration of hardware and software components, as well as data processing for seamless operation. The following sections delve into the intricacies of this integration.

Hardware Components

The hardware components play a crucial role in establishing a robust and reliable connection between the VR headset, depth sensor, and the robotic system.

- Oculus Rift: The Oculus Rift provides a virtual reality experience, allowing the user to interact with the robot’s environment in a simulated space. It consists of a head-mounted display (HMD) with sensors that track the user’s head movements, enabling a realistic and immersive experience.

- Kinect 2: The Kinect 2 sensor captures depth information and skeletal data, providing real-time information about the user’s body movements and the surrounding environment. It utilizes a structured light projector and infrared camera to generate a depth map, which is crucial for accurate hand gesture recognition and object detection.

- Robot Control System: The robot control system, typically a computer or microcontroller, receives commands from the Oculus Rift and Kinect 2, translating them into actionable instructions for the robot’s actuators. It is responsible for processing the data from the sensors, planning the robot’s movements, and executing them.

- Communication Interface: A reliable communication interface is essential to facilitate data exchange between the Oculus Rift, Kinect 2, and the robot control system. This interface can be implemented using protocols such as USB, Ethernet, or Wi-Fi, ensuring seamless data transmission and control.

Software Components

Software components play a crucial role in bridging the gap between the human input from Oculus Rift and Kinect 2 and the robotic control system.

- VR SDK: The VR SDK provides a software interface for developers to access and control the Oculus Rift’s functionalities. It enables the development of applications that leverage the VR headset’s capabilities, including head tracking, rendering, and user interaction.

- Kinect SDK: The Kinect SDK offers a software library for accessing and processing data from the Kinect 2 sensor. It provides tools for capturing depth information, skeletal data, and recognizing gestures, which are essential for intuitive human-robot interaction.

- Robot Control Software: The robot control software interprets the data received from the Oculus Rift and Kinect 2, translating it into commands for the robot’s actuators. It involves algorithms for motion planning, path generation, and real-time control, ensuring smooth and precise robot movements.

- Data Processing and Fusion: Data processing and fusion algorithms are crucial for integrating the information from the Oculus Rift and Kinect 2 into a coherent representation of the user’s intentions. This involves combining data from multiple sensors, filtering noise, and estimating the user’s position and orientation in the robot’s environment.

Sensors and Actuators

Sensors and actuators are the crucial elements that connect the human input with the robot’s physical actions.

- Sensors: Sensors gather information about the robot’s environment and its own state, providing feedback for control algorithms. Examples include:

- Position Sensors: These sensors, such as encoders or potentiometers, provide information about the robot’s joint positions.

- Force Sensors: Force sensors measure the forces exerted by the robot on its environment, providing information about contact and interaction.

- Proximity Sensors: Proximity sensors detect objects in the robot’s vicinity, enabling obstacle avoidance and safe navigation.

- Actuators: Actuators translate control signals into physical movements, enabling the robot to interact with its environment. Examples include:

- Electric Motors: Electric motors are commonly used to power robot joints, providing precise and controlled movements.

- Pneumatic Actuators: Pneumatic actuators utilize compressed air to generate force, providing a cost-effective solution for certain applications.

- Hydraulic Actuators: Hydraulic actuators use hydraulic fluid to generate force, offering high power density and precise control.

Real-Time Control

Real-time control is crucial for achieving seamless and responsive human-robot interaction.

- Data Acquisition and Processing: The Oculus Rift and Kinect 2 continuously provide data about the user’s movements and the environment. This data must be acquired and processed in real-time to ensure timely responses from the robot.

- Control Algorithms: Control algorithms are essential for translating the user’s intentions into precise robot movements. These algorithms must be designed to handle real-time constraints and adapt to changing conditions.

- Feedback Control: Feedback control mechanisms use sensor data to adjust the robot’s movements, ensuring accuracy and stability. This involves continuously monitoring the robot’s state and adjusting control signals based on the feedback received.

Future Directions and Innovations: Nasa Engineers Use Oculus Rift And Kinect 2 To Control Robots

The integration of VR/AR with robotics represents a significant step towards a future where humans and robots collaborate seamlessly. This technology has the potential to revolutionize various fields, from healthcare and education to entertainment. The continued development of VR/AR technologies, along with advancements in other fields like haptics, artificial intelligence, and machine learning, will further enhance human-robot interaction and unlock new possibilities.

Advancements in VR/AR Technologies

The future of VR/AR technologies holds exciting possibilities for enhancing human-robot interaction. These advancements will enable more intuitive and immersive control of robots, improving their efficiency and effectiveness.

- High-Fidelity Visuals and Haptics: VR/AR systems will offer more realistic and detailed visual representations of the robot’s environment and actions. Advancements in haptics will provide users with tactile feedback, allowing them to “feel” the robot’s movements and interactions, further enhancing the sense of presence and control.

- Improved Tracking and Motion Capture: Advanced motion capture systems will allow users to control robots with greater precision and fluidity. Real-time tracking of user movements and gestures will translate seamlessly into robotic actions, enabling more natural and intuitive control.

- Enhanced Immersive Experiences: VR/AR systems will provide more immersive experiences, allowing users to feel fully immersed in the robot’s environment. This will enable better situational awareness and facilitate more complex tasks requiring spatial understanding.

Advancements in Haptics, AI, and Machine Learning

The integration of haptics, artificial intelligence, and machine learning with VR/AR technologies will significantly enhance human-robot interaction, enabling more intuitive control, improved safety, and greater autonomy.

- Haptic Feedback: Haptic feedback systems will provide users with tactile sensations, allowing them to “feel” the robot’s actions and interactions. This will improve control precision, enhance situational awareness, and enhance safety by providing real-time feedback on the robot’s movements.

- Artificial Intelligence: AI algorithms will enable robots to learn from user actions and adapt their behavior based on real-time feedback. This will allow robots to perform tasks more efficiently and effectively, while also improving their ability to collaborate with humans.

- Machine Learning: Machine learning will play a crucial role in training robots to perform complex tasks. By analyzing large datasets of human actions and robot performance, machine learning algorithms can optimize robot behavior and enable them to learn new skills autonomously.

Applications Beyond NASA, Nasa engineers use oculus rift and kinect 2 to control robots

The applications of VR/AR in robotics extend far beyond NASA’s work. These technologies hold the potential to revolutionize various industries, including healthcare, education, and entertainment.

- Healthcare: VR/AR can be used to train surgeons for complex procedures, allowing them to practice in a simulated environment. This can improve surgical outcomes and reduce risks for patients. VR/AR can also be used to create immersive rehabilitation programs for patients with physical disabilities, helping them regain mobility and independence.

- Education: VR/AR can be used to create interactive and engaging learning experiences for students of all ages. Students can use VR/AR to explore historical sites, learn about different cultures, or dissect virtual organs without the need for physical specimens.

- Entertainment: VR/AR can be used to create immersive and interactive entertainment experiences. Users can explore virtual worlds, interact with characters, and participate in games and simulations that would be impossible in the real world.

Nasa engineers use oculus rift and kinect 2 to control robots – The use of Oculus Rift and Kinect 2 for robotic control marks a significant leap forward in human-robot interaction. This technology empowers engineers with intuitive control, fostering a deeper connection between humans and machines. As VR/AR technologies continue to evolve, we can expect even more transformative applications in robotics, revolutionizing industries like healthcare, education, and entertainment. The future of human-robot collaboration is bright, and NASA’s pioneering work with Oculus Rift and Kinect 2 is paving the way for a new era of robotic innovation.

NASA engineers are using Oculus Rift and Kinect 2 to control robots, paving the way for a future where humans can remotely operate complex machinery in dangerous environments. This technology is also finding its way into the world of photography, with Nikon working on new firmware for their D4S, D810, and D750 DSLRs, potentially adding new features and enhancing image quality.

While the connection between robotics and photography may seem unusual, both fields are pushing the boundaries of technology and innovation, creating exciting possibilities for the future.

Standi Techno News

Standi Techno News