Openai gpt 4 with vision release research flaws – OpenAI GPT-4 Vision Release: Research Flaws Exposed. The release of GPT-4 Vision, a powerful AI system that can understand and interpret images, has sent shockwaves through the tech world. While its potential applications are vast, spanning from medical diagnosis to artistic creation, concerns about its accuracy, bias, and ethical implications are rapidly surfacing. Researchers are questioning the robustness of the technology, highlighting potential flaws in its development and raising serious concerns about its misuse.

The ability of GPT-4 Vision to analyze images and provide insightful interpretations is a significant leap forward in AI. This technology promises to revolutionize various industries, from healthcare to education. However, as with any powerful technology, it comes with its share of risks. Research flaws, potential biases, and ethical concerns surrounding GPT-4 Vision necessitate careful consideration and open dialogue. This article explores the intricacies of this groundbreaking technology, diving into its capabilities, limitations, and the ethical dilemmas it presents.

GPT-4 Vision

GPT-4 Vision is a revolutionary advancement in the field of artificial intelligence, merging the power of large language models with the ability to understand and interpret visual information. This groundbreaking technology opens up a world of possibilities, enabling AI to engage with the world in a more comprehensive and nuanced way.

Capabilities of GPT-4 Vision

GPT-4 Vision’s capabilities are multifaceted, encompassing a wide range of visual understanding tasks.

- Image Description: GPT-4 Vision can generate detailed and accurate descriptions of images, capturing the essence of the visual content. It can identify objects, scenes, and their relationships, providing a comprehensive understanding of the image.

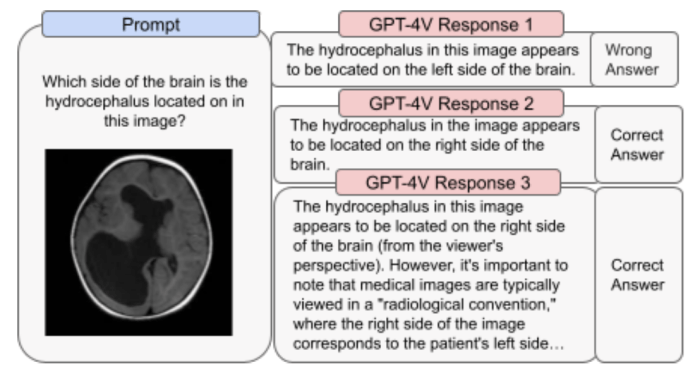

- Visual Question Answering: GPT-4 Vision can answer questions about images, drawing on its understanding of the visual content and its vast knowledge base. This enables it to provide insightful answers to complex questions about images, such as “What is the woman holding?” or “Where is the dog located?”.

- Image Captioning: GPT-4 Vision can generate concise and informative captions for images, summarizing the key elements and conveying the overall message. This is particularly useful for social media platforms, where images often require captions to provide context and engagement.

- Object Detection and Recognition: GPT-4 Vision can accurately identify and classify objects within images, recognizing their types, sizes, and positions. This capability has applications in various fields, such as autonomous driving, medical imaging, and security.

- Visual Reasoning: GPT-4 Vision can go beyond simply understanding images; it can reason about the visual content, drawing inferences and making predictions based on the relationships between objects and scenes. This allows it to understand the context of an image and make informed judgments.

Potential Applications of GPT-4 Vision

The capabilities of GPT-4 Vision have far-reaching implications across numerous industries, revolutionizing how we interact with visual information.

- E-commerce: GPT-4 Vision can enhance online shopping experiences by providing detailed descriptions of products, suggesting similar items based on visual similarity, and enabling virtual try-ons. It can also assist with product search by understanding user queries and providing relevant visual results.

- Healthcare: GPT-4 Vision can analyze medical images, such as X-rays and MRIs, to assist doctors in diagnosis and treatment planning. It can also help in drug discovery by identifying potential targets and optimizing drug formulations.

- Education: GPT-4 Vision can create interactive learning experiences, providing visual explanations for complex concepts and allowing students to explore and understand visual information in a more engaging way. It can also personalize learning by adapting to individual learning styles and needs.

- Accessibility: GPT-4 Vision can improve accessibility for visually impaired individuals by providing descriptions of images and videos, enabling them to experience the world in a more inclusive way.

- Marketing and Advertising: GPT-4 Vision can create targeted advertising campaigns based on visual content, understanding consumer preferences and delivering relevant messages. It can also generate compelling visual content for marketing materials, such as product images and videos.

Limitations of GPT-4 Vision

While GPT-4 Vision offers significant advancements, it is essential to acknowledge its limitations.

- Accuracy: GPT-4 Vision’s performance is dependent on the quality and diversity of the training data. It can struggle with images that are unfamiliar or contain complex visual relationships, leading to inaccuracies in its understanding and interpretation.

- Bias: Like other AI models, GPT-4 Vision can exhibit bias based on the training data it receives. This can lead to biased interpretations and outputs, reinforcing existing societal prejudices and stereotypes.

- Ethical Considerations: The use of GPT-4 Vision raises ethical concerns, such as the potential for misuse in surveillance, manipulation, and the creation of deepfakes. It is crucial to develop responsible guidelines and regulations for the development and deployment of this technology.

Research Flaws and Concerns: Openai Gpt 4 With Vision Release Research Flaws

While GPT-4 Vision marks a significant leap in AI capabilities, its development raises concerns about potential research flaws and ethical implications. It’s crucial to understand these issues to ensure responsible and beneficial use of this technology.

Data Bias and Limitations

The training data used for GPT-4 Vision, like any large language model, can reflect existing biases and limitations in the real world. This can lead to biased outputs, perpetuating stereotypes and potentially causing harm. For example, if the training data contains predominantly images of white people in leadership roles, the model might be more likely to generate images of white people in leadership positions, even when prompted with diverse individuals. This bias can be exacerbated by the limited availability of diverse datasets, particularly in specific domains like healthcare or law enforcement.

Transparency and Explainability

The black box nature of deep learning models, including GPT-4 Vision, poses challenges in understanding how the model arrives at its outputs. This lack of transparency can make it difficult to identify and address biases, as well as to assess the reliability and trustworthiness of the model’s predictions. For example, if GPT-4 Vision generates a false image based on a particular prompt, it might be difficult to pinpoint the specific input data or internal processes that led to the error.

Misuse and Malicious Intent

The ability of GPT-4 Vision to generate realistic images raises concerns about its potential for misuse. For instance, it could be used to create deepfakes, which are manipulated images or videos that can be used to spread misinformation or harm individuals’ reputations. Furthermore, the model’s ability to understand and respond to complex prompts could be exploited to generate harmful or offensive content.

Ethical Considerations

The development and deployment of GPT-4 Vision raise fundamental ethical questions about the responsible use of AI. For example, concerns exist about the potential for job displacement as AI systems automate tasks previously performed by humans. Additionally, the model’s ability to generate images that can be indistinguishable from reality raises questions about the authenticity of information and the potential for manipulation.

Comparison with Existing Vision Technologies

GPT-4 Vision represents a significant leap in the field of vision AI, offering capabilities that surpass traditional computer vision and image recognition techniques. While these existing technologies have proven valuable in specific applications, GPT-4 Vision brings a unique blend of contextual understanding, multi-modal processing, and reasoning abilities that opens up new possibilities.

Strengths and Weaknesses of GPT-4 Vision Compared to Traditional Vision Technologies, Openai gpt 4 with vision release research flaws

This section explores the strengths and weaknesses of GPT-4 Vision in comparison to traditional computer vision and image recognition technologies.

- GPT-4 Vision: Strengths

- Contextual Understanding: GPT-4 Vision excels in understanding the context of images, going beyond simple object detection to interpret relationships, actions, and emotions within a scene. This ability to “see” and “understand” makes it ideal for complex tasks like image captioning, visual question answering, and scene analysis. For example, GPT-4 Vision can distinguish between a person holding a knife for cooking and a person holding a knife in a threatening manner, demonstrating its ability to grasp the context and intent behind visual information.

- Multi-modal Processing: GPT-4 Vision seamlessly integrates vision with language, enabling it to process and understand information from both images and text. This multi-modal capability allows it to perform tasks like image translation, where it can generate descriptions of images in different languages, or image-based text generation, where it can create stories or summaries based on visual input.

- Reasoning and Inference: GPT-4 Vision possesses advanced reasoning abilities, allowing it to draw inferences and make predictions based on visual information. This capability opens up opportunities for applications like anomaly detection, where it can identify unusual patterns in images, or predictive maintenance, where it can analyze images of machinery to anticipate potential failures.

- GPT-4 Vision: Weaknesses

- Data Requirements: Like other deep learning models, GPT-4 Vision requires extensive training data, which can be expensive and time-consuming to acquire and annotate. This data dependency can limit its applicability in domains where labeled data is scarce.

- Interpretability: The inner workings of GPT-4 Vision can be complex and opaque, making it challenging to understand the reasoning behind its predictions. This lack of interpretability can hinder its adoption in applications where explainability is crucial, such as medical diagnosis or legal proceedings.

- Bias and Fairness: As with any AI system trained on real-world data, GPT-4 Vision is susceptible to biases present in the training data. This can lead to biased predictions and unfair outcomes, highlighting the need for careful consideration of data selection and model training strategies to mitigate potential biases.

- Traditional Vision Technologies: Strengths

- Object Detection and Recognition: Traditional computer vision techniques, like convolutional neural networks (CNNs), excel at tasks like object detection and recognition, identifying specific objects within images with high accuracy. This makes them suitable for applications such as autonomous driving, where accurate object identification is crucial for safe navigation.

- Real-time Processing: Many computer vision algorithms are optimized for real-time processing, making them ideal for applications where speed is essential, such as video surveillance or robotics.

- Maturity and Stability: Traditional vision technologies have been extensively researched and developed over decades, resulting in robust and mature solutions with proven reliability.

- Traditional Vision Technologies: Weaknesses

- Limited Contextual Understanding: Traditional vision technologies often struggle to understand the context of images, relying on predefined object classes and features. This limitation hinders their ability to interpret complex scenes or understand relationships between objects.

- Lack of Multi-modal Capabilities: Most traditional vision technologies are primarily designed for image-based tasks, lacking the ability to integrate information from other modalities like language. This limits their potential for applications requiring multi-modal understanding.

- Limited Reasoning Abilities: Traditional vision technologies typically lack the reasoning and inference capabilities that are essential for tasks requiring complex decision-making or understanding of relationships between objects and actions.

Synergy and Collaboration between Technologies

GPT-4 Vision and traditional vision technologies can complement each other, creating powerful synergies. For example, GPT-4 Vision can leverage the strengths of traditional vision technologies for tasks like object detection, while adding its own contextual understanding and reasoning capabilities to enhance the overall performance.

“The combination of GPT-4 Vision’s contextual understanding and traditional vision’s object detection capabilities opens up new possibilities for applications like autonomous driving, where understanding the scene and predicting the actions of other vehicles is crucial.”

In the future, we can expect to see more collaboration between these technologies, leading to the development of hybrid systems that combine the best of both worlds. This integration will pave the way for more sophisticated and intelligent vision applications, capable of tackling complex challenges across various domains.

Future Directions and Research Opportunities

GPT-4 Vision represents a significant advancement in the field of computer vision, but there are still numerous areas for further research and development. The focus should be on enhancing the model’s accuracy, efficiency, and robustness to make it more reliable and applicable in diverse real-world scenarios.

Improving Accuracy and Robustness

The accuracy of GPT-4 Vision can be further improved by addressing the challenges of generalization, robustness, and interpretability.

- Data Augmentation and Domain Adaptation: Training GPT-4 Vision on larger and more diverse datasets, including synthetic data, can enhance its ability to generalize to unseen scenarios. Domain adaptation techniques can be employed to improve the model’s performance in specific domains, such as medical imaging or autonomous driving.

- Adversarial Training: Adversarial training involves exposing the model to carefully crafted adversarial examples, which are designed to fool the model. This technique can help improve the model’s robustness to real-world noise and distortions.

- Interpretability and Explainability: Understanding how GPT-4 Vision makes decisions is crucial for building trust and ensuring responsible use. Research on interpretability methods can help explain the model’s reasoning process, making it easier to identify and mitigate potential biases.

The emergence of GPT-4 Vision marks a pivotal moment in the development of AI. While its potential is undeniable, its inherent flaws and ethical implications require careful scrutiny. Moving forward, it is crucial to prioritize research and development that addresses these concerns. By fostering transparency, collaboration, and ethical guidelines, we can harness the power of GPT-4 Vision for the betterment of society while mitigating its potential risks.

While OpenAI’s GPT-4 with vision release might be revolutionizing the way we interact with technology, it’s not without its flaws. Research has uncovered potential biases and ethical concerns, reminding us that even the most advanced AI needs careful oversight. But hey, at least we can escape the real world for a bit with the final fantasy xx 2 hd remaster makes its ps4 debut , a classic RPG that brings nostalgia and adventure to the PS4.

It’s a nice reminder that sometimes, simpler things are just as enjoyable, and maybe, just maybe, we can learn a thing or two about responsible development from the world of Final Fantasy.

Standi Techno News

Standi Techno News