Openai says its building a tool to let content creators opt out of ai training – OpenAI says it’s building a tool to let content creators opt out of AI training. This move, a first for a major AI developer, could reshape the ethical landscape of AI development and empower creators in a digital world increasingly reliant on AI.

The tool aims to address growing concerns about the use of copyrighted material in AI training datasets. Content creators have expressed anxieties about their work being used without their consent, potentially impacting their intellectual property rights and revenue streams. This opt-out tool represents a significant step towards acknowledging and addressing these concerns.

OpenAI’s New Opt-Out Tool: A Shift in AI Training Practices

OpenAI’s decision to offer content creators an opt-out option for AI training marks a significant shift in the landscape of artificial intelligence development. This move, while seemingly straightforward, has profound implications for both the future of AI and the privacy of content creators.

The Impact on AI Development

OpenAI’s opt-out tool represents a step towards a more ethical and responsible approach to AI training. By giving content creators control over how their work is used, OpenAI acknowledges the importance of respecting intellectual property and data privacy. This move could potentially influence other AI companies to adopt similar practices, setting a new standard for data usage in AI development.

The Impact on Data Privacy, Openai says its building a tool to let content creators opt out of ai training

The tool allows content creators to protect their work from being used without their consent in AI training datasets. This is particularly important for creators who may be concerned about the potential misuse of their work or the unintended consequences of their data being used in AI models. For instance, a novelist may not want their work used to train an AI model that generates plagiarized content, or a journalist may not want their reporting used to create AI-generated news articles that spread misinformation.

Benefits and Drawbacks for Content Creators

- Increased Control over Data: The opt-out tool empowers content creators with greater control over their data, allowing them to choose how their work is used in AI development.

- Enhanced Privacy: Content creators can protect their work from being used without their consent, safeguarding their intellectual property and privacy.

- Potential for Increased Revenue: Some creators may choose to opt out of AI training but offer their work for licensing, potentially generating revenue from their creative output.

- Reduced Incentives for AI Training: The opt-out option could potentially reduce the availability of high-quality data for AI training, which could impact the performance and accuracy of AI models.

- Potential for Legal Challenges: The legality and enforceability of opt-out provisions may be challenged in court, creating uncertainty for both creators and AI developers.

Benefits and Drawbacks for AI Developers

- More Ethical Data Usage: AI developers can demonstrate a commitment to ethical data practices by offering opt-out options to content creators.

- Improved Trust and Transparency: By respecting content creators’ rights, AI developers can build trust and transparency with both users and stakeholders.

- Reduced Legal Risks: AI developers can mitigate potential legal risks associated with data usage by implementing opt-out mechanisms.

- Reduced Availability of Data: The opt-out option could potentially reduce the availability of high-quality data for AI training, which could impact the performance and accuracy of AI models.

- Increased Complexity in Data Management: AI developers may need to implement complex systems to manage opt-out requests and ensure compliance with data privacy regulations.

Understanding the Concerns

Content creators are increasingly voicing concerns about their work being used for AI training without their consent. They worry about the potential for their creations to be exploited, leading to financial loss and diminished creative control. This concern is not unfounded, as AI models are trained on vast datasets that often include copyrighted content without explicit permission.

Ethical and Legal Ramifications of Using Copyrighted Material

The use of copyrighted material for AI training raises complex ethical and legal questions. While AI developers argue that using copyrighted material is necessary for building powerful models, content creators argue that their rights are being violated. The legal landscape is still evolving, but several key concerns emerge:

- Copyright Infringement: Using copyrighted material without permission could constitute copyright infringement, potentially leading to legal action.

- Fair Use Doctrine: The fair use doctrine, which allows limited use of copyrighted material for purposes like criticism, commentary, or parody, is often cited as a justification for using copyrighted content in AI training. However, the application of fair use in this context is highly debated, and there is no clear consensus on whether AI training falls under its scope.

- Moral Rights: Content creators may have moral rights, such as the right to attribution and the right to prevent their work from being used in a way that harms their reputation. These rights can be violated when copyrighted content is used for AI training without permission.

Examples of AI Training Datasets Raising Concerns

Several examples illustrate the concerns surrounding the use of copyrighted content in AI training:

- The LAION-5B dataset, a massive dataset used for training text-to-image models, includes images scraped from the internet without permission. This has led to concerns about copyright infringement and the potential for AI models to generate images that are too similar to copyrighted works.

- The GPT-3 model, developed by OpenAI, was trained on a massive dataset of text and code, including copyrighted works. While OpenAI claims to have taken steps to mitigate copyright concerns, some content creators argue that their work was used without their consent.

- The use of code repositories, such as GitHub, for AI training raises concerns about the potential for AI models to generate code that is too similar to copyrighted code, potentially leading to intellectual property disputes.

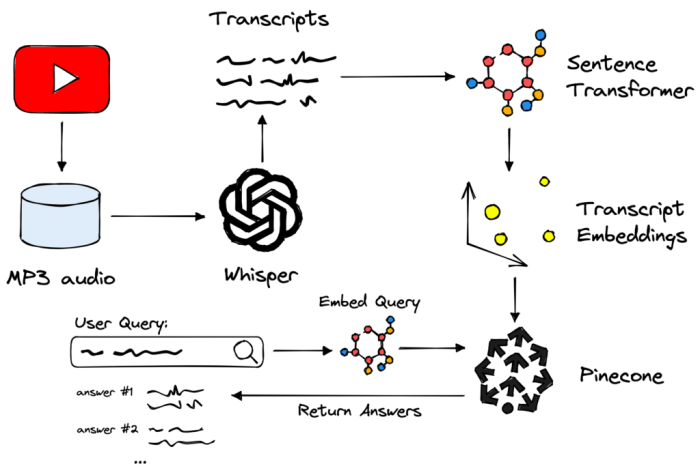

Exploring the Technicalities

OpenAI’s opt-out tool, a significant step towards responsible AI development, allows content creators to control how their work is used for training AI models. Understanding how this tool works is crucial to assessing its effectiveness and the potential impact on the future of AI training.

Technical Mechanisms Behind the Opt-Out Tool

OpenAI’s opt-out tool utilizes a combination of technical mechanisms to ensure content creators have control over their work. The process involves identifying and labeling content, allowing content creators to explicitly opt out of its use for AI training. This can be achieved through various methods, including:

- Digital Fingerprinting: This technique assigns unique identifiers to content, allowing OpenAI to identify and track specific pieces of content.

- Metadata Tagging: Content creators can add metadata tags to their work, specifying their opt-out preferences.

- Direct User Interface: OpenAI may provide a dedicated user interface where content creators can explicitly opt out of their content being used for training.

Effectiveness of the Opt-Out Tool

The effectiveness of OpenAI’s opt-out tool in preventing unauthorized use of content for AI training depends on several factors:

- Accuracy of Content Identification: The ability to accurately identify and track content is critical for the tool’s effectiveness. If OpenAI cannot reliably identify content, the opt-out process becomes ineffective.

- Compliance and Enforcement: OpenAI needs to enforce the opt-out preferences of content creators. This involves monitoring the use of content and taking action against any violations.

- Scope of the Tool: The effectiveness of the tool depends on the scope of its application. If it only applies to specific types of content or platforms, its impact may be limited.

Limitations and Challenges

Implementing an effective opt-out tool presents several challenges:

- Scalability: The tool needs to be scalable to handle the vast amount of content available online. This requires robust infrastructure and efficient processing capabilities.

- Dynamic Content: The constant flow of new content poses a challenge. The tool needs to adapt to new content and ensure its effectiveness in identifying and tracking it.

- Content Transformation: AI models can learn from content even if it has been transformed or modified. The tool needs to address the challenge of identifying content that has been altered in a way that preserves its original meaning.

- Transparency and Trust: Building trust in the opt-out tool requires transparency from OpenAI. Content creators need to be confident that their preferences are being respected and that the tool is working as intended.

The Broader Impact: Openai Says Its Building A Tool To Let Content Creators Opt Out Of Ai Training

OpenAI’s opt-out tool is more than just a technical feature; it’s a potential game-changer in the ethical and legal landscape of AI development. This tool could set a precedent for other AI companies to follow, shaping the future of AI in profound ways.

Ethical Considerations

This tool directly addresses concerns about data privacy and consent in AI training. By giving content creators the power to opt out, OpenAI acknowledges the importance of respecting individual rights in the context of AI development. This could pave the way for a more ethical approach to AI, where data is used responsibly and with transparency.

Legal Precedents

OpenAI’s move could set a precedent for other AI companies, potentially influencing future legislation and regulations. Governments and regulatory bodies might adopt similar opt-out mechanisms, ensuring that AI development adheres to ethical standards and respects individual rights.

Impact on Training Data

The opt-out tool could significantly impact the availability and quality of AI training data. While some creators might opt out, others might choose to contribute their data, potentially leading to more diverse and representative datasets. This could improve the fairness and accuracy of AI models, reducing bias and promoting inclusivity.

Implications for Content Creators

This opt-out tool marks a significant step towards recognizing the rights of content creators in the age of AI. It empowers them to exert greater control over how their work is used, ensuring their contributions are acknowledged and respected.

Content Creator Rights and Responsibilities

The opt-out tool presents content creators with a powerful mechanism to safeguard their work from unauthorized use in AI training datasets. This aligns with the broader movement advocating for digital rights and the fair treatment of creative output in the digital realm.

- Right to Control Use: The opt-out tool empowers content creators to decide how their work is used. They can choose to allow or prohibit its inclusion in AI training datasets, ensuring their creative vision is not distorted or exploited.

- Right to Attribution: The tool encourages transparency and accountability in AI training. By opting out, content creators can demand recognition for their contributions, ensuring their work is not used anonymously or without their consent.

- Responsibility for Ethical Use: Content creators also have a responsibility to use the opt-out tool ethically. They should carefully consider the implications of opting out, especially in situations where their work might contribute to the development of beneficial AI applications.

Leveraging the Opt-Out Tool for Protection

Content creators can utilize this tool to protect their work in several ways:

- Active Opt-Out: Proactively opting out of AI training datasets ensures their work is not used without their consent. This can be particularly important for creators whose work is unique or highly recognizable.

- Monitoring and Reporting: Content creators can actively monitor the use of their work in AI training datasets and report any instances of unauthorized use. This can help ensure their rights are respected and their work is not exploited.

- Collaboration and Advocacy: Content creators can collaborate with other creators and organizations to advocate for stronger legal frameworks that protect their rights in the AI era. This includes advocating for legislation that ensures fair compensation for the use of their work in AI training datasets.

Empowering Content Creators in the Digital Landscape

The opt-out tool has the potential to significantly empower content creators in the evolving digital landscape:

- Increased Control: The tool gives creators more control over their work, ensuring it is not used in ways they find objectionable or unethical.

- Enhanced Value: By controlling the use of their work, content creators can enhance its value and potentially negotiate more favorable terms for its use in AI applications.

- Driving Ethical AI Development: The opt-out tool encourages ethical AI development by ensuring that AI models are trained on data that is obtained with the consent of the creators.

The Role of OpenAI

OpenAI’s introduction of an opt-out tool for content creators in AI training signifies a crucial step towards ethical AI development. This initiative is not just about giving creators control over their work, but also about fostering a more responsible and transparent approach to AI training. It demonstrates OpenAI’s commitment to ethical AI development, setting a precedent for other AI companies to follow.

The Significance of OpenAI’s Leadership

OpenAI’s move to offer an opt-out tool for content creators is a significant development in the field of AI ethics. By giving creators control over how their work is used for AI training, OpenAI is acknowledging the importance of consent and fairness in AI development. This sets a positive precedent for other AI companies, encouraging them to adopt similar practices.

The Potential Impact on AI Training

OpenAI’s opt-out tool has the potential to significantly impact AI training practices. By allowing creators to opt out, AI models will be trained on a more diverse and representative dataset, reducing the risk of bias and promoting fairness. The tool could also lead to increased transparency in AI training, as creators will have a better understanding of how their work is being used.

The Broader Implications for AI Ethics

OpenAI’s leadership in ethical AI development extends beyond the opt-out tool. The company’s commitment to transparency and responsible AI development is evident in its research and public discourse. OpenAI’s efforts to shape the ethical landscape of AI have a far-reaching impact, influencing the development of AI policies and regulations globally.

OpenAI’s opt-out tool is a testament to the growing awareness of ethical considerations in AI development. It signifies a shift towards a more collaborative and transparent approach to AI training, where creators have a say in how their work is used. While challenges remain, this initiative sets a precedent for other AI companies to follow, potentially paving the way for a future where AI development is both innovative and responsible.

OpenAI’s move to give creators control over their work for AI training is a step in the right direction, especially considering the ongoing debate about copyright and ownership in the digital age. It’s like choosing the right iPad – do you need the power of the Apple iPad Pro M4 or will the iPad Air M2 suffice?

Ultimately, it’s about finding the balance between innovation and respecting the creators who fuel it.

Standi Techno News

Standi Techno News