Opera will now allow users download and use llms locally – Opera, the web browser known for its innovative features, is now letting users download and use LLMs (Large Language Models) locally. This groundbreaking move opens up a world of possibilities, potentially revolutionizing how we interact with the web.

Imagine having access to the power of AI, like Kami, right on your device, without relying on internet connectivity. This could mean generating creative content, translating languages, and even coding, all while maintaining your privacy and control over your data.

Opera’s New Feature: Local LLM Use

Opera, the renowned web browser, has taken a bold step by allowing users to download and use Large Language Models (LLMs) locally. This innovative feature introduces a paradigm shift in how users interact with AI, promising a range of benefits while also presenting some challenges.

The Significance of Local LLM Use

Opera’s new feature allows users to download and use LLMs locally, directly on their devices. This marks a significant departure from the traditional cloud-based approach, where users rely on remote servers to process their requests. By bringing LLMs to the user’s device, Opera aims to revolutionize the way we interact with AI, empowering users with greater control and flexibility.

Benefits of Local LLM Use

The ability to use LLMs locally offers several potential benefits for users.

- Enhanced Privacy: Local LLM use eliminates the need to send sensitive data to remote servers, ensuring greater privacy and control over personal information. By keeping data local, users can be more confident that their information is not being accessed or used by third parties.

- Offline Access: With local LLMs, users can access AI capabilities even without an internet connection. This is particularly valuable in situations where internet access is limited or unreliable, such as during travel or in remote areas.

- Customization: Local LLMs can be customized to suit individual preferences and needs. Users can fine-tune the models to generate specific types of outputs or to better understand their unique language patterns.

Challenges of Local LLM Use

While the benefits of local LLM use are enticing, there are also potential challenges that need to be addressed.

- Computational Power and Storage: LLMs are computationally intensive, requiring significant processing power and storage space. Local use may strain the resources of older or less powerful devices.

- Model Updates: Keeping local LLMs updated with the latest advancements can be a challenge, as new models and improvements are constantly being released. Users will need to ensure they have access to the most recent versions to maintain optimal performance.

- Security: Local LLM use introduces new security considerations. Users need to be cautious about the source of the models they download and ensure they are from trusted providers to mitigate the risk of malware or malicious code.

LLM Technology and Opera’s Integration

Opera’s latest update allows users to download and use large language models (LLMs) locally, bringing the power of AI directly to their browsers. This integration represents a significant step forward in the evolution of web browsing, potentially transforming how we interact with the internet.

LLM Technology Explained

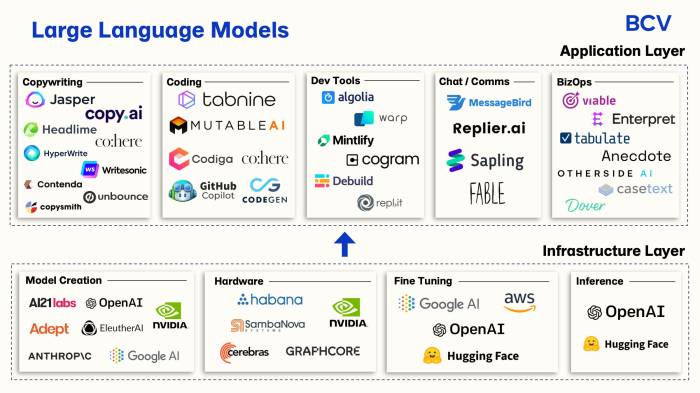

LLMs are a type of artificial intelligence (AI) that excels at understanding and generating human-like text. Trained on massive datasets of text and code, these models can perform a wide range of tasks, including:

- Summarizing large amounts of text.

- Translating languages.

- Generating creative content, such as poems, stories, and scripts.

- Answering questions and providing information.

- Writing different types of text, such as emails, articles, and social media posts.

While LLMs possess impressive capabilities, it’s important to recognize their limitations. These models are not perfect and can sometimes generate incorrect or misleading information. They are also susceptible to biases present in the data they were trained on.

Opera’s Integration of LLMs

Opera’s integration of LLMs allows users to download and run these models directly on their devices, eliminating the need for constant internet connectivity. This approach offers several advantages:

- Privacy: Users can keep their data private, as it is not being sent to a remote server for processing.

- Offline access: Users can access LLM functionality even when they are not connected to the internet.

- Speed: Processing occurs locally, resulting in faster response times.

- Customization: Users can choose which LLM to download and use, allowing for tailored experiences.

Impact on Web Browsing

Opera’s integration of LLMs could revolutionize web browsing, creating a more personalized, interactive, and intelligent experience. Users can expect to see advancements in:

- Search: LLMs can provide more relevant and comprehensive search results, understanding user intent and context.

- Content creation: Users can leverage LLMs to generate high-quality content, such as blog posts, articles, and social media updates.

- Translation: LLMs can provide real-time translation, breaking down language barriers and facilitating global communication.

- Personalization: LLMs can personalize web browsing experiences, recommending relevant content and adjusting settings based on user preferences.

Privacy and Security Considerations

Running large language models (LLMs) locally presents both opportunities and challenges, particularly when it comes to privacy and security. While the ability to process data without relying on cloud services offers potential benefits, it also introduces new vulnerabilities that need careful consideration.

Potential Risks of Local LLM Use

Storing and using LLMs locally raises several privacy and security concerns. These include the risk of data breaches, misuse of sensitive information, and the potential for malicious actors to exploit vulnerabilities in the LLM itself.

- Data Breaches: If an attacker gains access to a device storing an LLM, they could potentially steal sensitive data used for training or inference. This data could include personal information, proprietary business secrets, or other confidential materials.

- Misuse of Sensitive Information: LLMs trained on sensitive data could be used for malicious purposes, such as generating fake news, spreading misinformation, or creating deepfakes.

- LLM Vulnerability Exploitation: Attackers could exploit vulnerabilities in the LLM itself to gain unauthorized access to data, manipulate its behavior, or inject malicious code.

Opera’s Approach to Privacy and Security

Opera is actively addressing these concerns by implementing several measures to enhance the privacy and security of its LLM integration.

- Data Encryption: Opera uses strong encryption to protect data stored and processed locally. This ensures that even if an attacker gains access to the device, they cannot read or use the sensitive data.

- Sandboxing: Opera isolates LLMs within a secure sandbox environment, limiting their access to system resources and preventing them from interacting with other applications or accessing sensitive data outside the sandbox.

- Regular Security Updates: Opera provides regular security updates to address vulnerabilities and improve the overall security posture of its LLM integration.

- Privacy-Focused Design: Opera prioritizes user privacy by implementing features that allow users to control the data used for training and inference. This ensures users have transparency and control over their data.

User Experience and Functionality

Opera’s integration of local LLM use offers a seamless and user-friendly experience, empowering users to harness the power of LLMs directly within their browser. This feature opens up a world of possibilities for content creation, language translation, code generation, and more.

Downloading and Using LLMs

Opera’s intuitive interface simplifies the process of downloading and using LLMs. Users can easily access a curated library of pre-trained LLMs, categorized by their specific functionalities. The download process is streamlined, with clear instructions and progress indicators. Once downloaded, LLMs are readily accessible through a dedicated panel within Opera, allowing users to interact with them effortlessly.

LLM Functionalities and Capabilities, Opera will now allow users download and use llms locally

LLMs within Opera offer a wide range of functionalities, empowering users to tackle diverse tasks:

- Content Creation: LLMs can assist in generating creative content, such as writing articles, poems, scripts, and marketing copy. Users can provide prompts and instructions to guide the LLM’s output, tailoring the content to their specific needs.

- Language Translation: LLMs can translate text between multiple languages with high accuracy. This feature is invaluable for communication, research, and global collaboration.

- Code Generation: LLMs can assist in generating code in various programming languages, making it easier for developers to write and debug code. Users can provide code snippets or descriptions of their desired functionality, and the LLM will generate corresponding code.

- Summarization and Analysis: LLMs can summarize lengthy texts, extract key insights, and analyze data to provide concise and actionable information. This functionality is useful for research, decision-making, and information processing.

- Conversation and Chatbots: LLMs can power conversational agents, providing engaging and informative interactions with users. This feature is applicable to customer service, education, and entertainment.

Examples of LLM Use Cases

- Content Creation: A blogger can use an LLM to generate creative ideas for blog posts or to write drafts for articles.

- Language Translation: A traveler can use an LLM to translate menus, signs, and conversations in a foreign language.

- Code Generation: A developer can use an LLM to generate code for a specific task, such as creating a web form or connecting to a database.

- Summarization and Analysis: A researcher can use an LLM to summarize a lengthy research paper or to analyze data for insights.

- Conversation and Chatbots: A company can use an LLM to power a chatbot for customer service, providing 24/7 support and resolving queries.

Potential Applications and Use Cases

Opera’s integration of local LLMs opens up a world of possibilities, empowering users with the ability to leverage the power of AI directly within their browser. This groundbreaking feature has the potential to revolutionize how we interact with the web and access information.

Businesses and individuals can benefit from this technology in numerous ways, ranging from streamlining customer service to enhancing research and education.

Customer Service

Local LLMs can significantly enhance customer service interactions by providing real-time assistance and personalized responses.

- Imagine a customer service chatbot powered by a local LLM that can understand complex queries, provide accurate answers, and even suggest relevant solutions based on the user’s browsing history and preferences. This would allow businesses to offer 24/7 support, reduce wait times, and improve customer satisfaction.

- LLMs can also be used to analyze customer feedback and identify recurring issues, enabling businesses to proactively address concerns and improve their products and services.

Research and Education

Local LLMs can revolutionize the way we conduct research and learn by providing instant access to information and insights.

- Students can use local LLMs to summarize complex texts, generate study guides, and even get personalized explanations of difficult concepts. This can enhance their understanding and improve their learning outcomes.

- Researchers can leverage local LLMs to analyze large datasets, identify trends, and generate hypotheses, accelerating the pace of scientific discovery.

Other Use Cases

- Local LLMs can be used to create personalized content, such as blog posts, articles, and even creative writing. This can empower individuals to express themselves more effectively and reach wider audiences.

- The ability to run LLMs locally can also be used for privacy-sensitive tasks, such as generating personalized recommendations or analyzing personal data without sharing it with external servers.

- Local LLMs can also be used for translation, language learning, and even coding assistance. This can empower users to communicate more effectively across language barriers and improve their coding skills.

Comparison to Other Browsers: Opera Will Now Allow Users Download And Use Llms Locally

Opera’s approach to integrating LLMs stands out in the browser landscape, offering a unique proposition compared to dominant players like Chrome and Firefox. While these browsers are exploring LLM integration, Opera’s approach prioritizes local processing, granting users greater control and potentially enhancing privacy.

The comparison highlights the distinct strategies employed by different browsers in navigating the evolving world of LLMs. This section will explore the advantages and disadvantages of Opera’s approach, its potential impact on the browser market, and the broader implications for the future of web browsing.

User Experience and Functionality

Opera’s approach to local LLM processing offers a distinct user experience compared to Chrome and Firefox. While these browsers rely primarily on cloud-based LLM services, Opera’s approach empowers users with greater control over their data and processing.

- Offline Access: Opera’s local LLM integration enables users to access and utilize LLMs even without an internet connection. This provides a significant advantage in situations with limited or unreliable network access. In contrast, cloud-based LLM services require constant internet connectivity, limiting functionality in offline scenarios.

- Privacy Enhancement: By processing data locally, Opera’s approach potentially minimizes the amount of personal information sent to external servers. This can be particularly valuable for users concerned about data privacy and security. While Chrome and Firefox are implementing privacy-focused features, their reliance on cloud-based LLMs introduces potential vulnerabilities for data breaches or misuse.

- Faster Response Times: Local processing can potentially lead to faster response times compared to cloud-based LLMs, which involve data transfer and processing on remote servers. This can enhance the user experience, especially for tasks requiring quick and efficient responses.

Security Considerations

Opera’s approach to local LLM integration presents both advantages and disadvantages in terms of security. While local processing can potentially reduce the risk of data breaches associated with cloud-based services, it also introduces new challenges.

- Vulnerability to Local Attacks: Local LLM processing makes the system susceptible to attacks targeting the user’s device. Malicious actors could exploit vulnerabilities in the local LLM software to gain access to sensitive data or compromise the system. This contrasts with cloud-based LLMs, where security is primarily the responsibility of the service provider.

- Data Leakage: While Opera’s approach aims to enhance privacy, there’s a potential risk of data leakage through vulnerabilities in the local LLM software or the user’s device. This highlights the importance of robust security measures and regular updates to mitigate potential risks.

Potential Impact on the Browser Market

Opera’s innovative approach to LLM integration could have a significant impact on the browser market, potentially challenging the dominance of Chrome and Firefox.

- Attracting Privacy-Conscious Users: Opera’s focus on local processing and data privacy could attract users concerned about the security and transparency of cloud-based LLM services. This could lead to increased market share for Opera, especially among users seeking greater control over their data.

- Driving Innovation: Opera’s approach could stimulate innovation in the browser market, encouraging other developers to explore alternative LLM integration strategies. This could lead to a more diverse and competitive browser landscape, benefiting users with a wider range of choices.

- Increased Adoption of LLMs: Opera’s feature could accelerate the adoption of LLMs by making them more accessible and user-friendly. By offering a seamless and secure integration, Opera could lower the barrier to entry for users unfamiliar with LLMs, driving wider adoption and exploration of their potential.

Future Directions and Development

Opera’s integration of local LLM functionality opens up a world of possibilities for the future of web browsing and online interactions. This feature represents a significant step towards a more personalized and interactive web experience, and its potential for further development is vast.

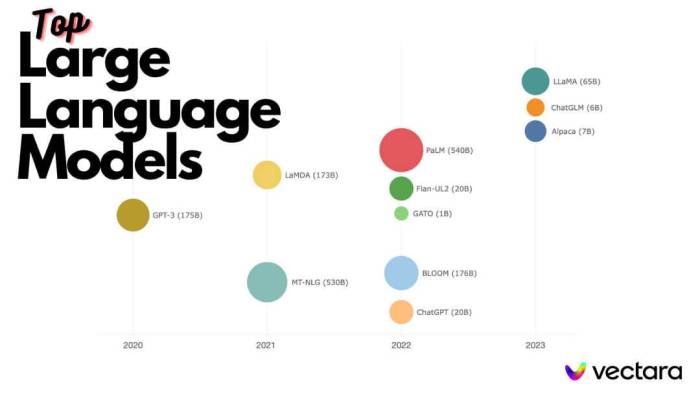

Integration of More Advanced LLM Models

The current implementation of local LLM functionality in Opera allows users to download and use smaller, less resource-intensive models. However, as LLM technology continues to evolve, it is likely that Opera will integrate more advanced and powerful models. This will allow users to access a wider range of capabilities and functionalities, including:

- Enhanced language understanding and generation: Larger and more sophisticated models will be able to comprehend and generate more nuanced and complex text, leading to more accurate and engaging interactions.

- Advanced code generation and debugging: LLM models can be trained to generate and debug code in various programming languages, making it easier for developers to write and maintain software.

- Improved translation and summarization: Larger models will be able to provide more accurate and natural-sounding translations and summaries of complex documents and web content.

- Personalized content creation: LLM models can be used to create personalized content tailored to individual users’ interests and preferences.

Expansion of Functionalities

Opera’s local LLM functionality can be expanded beyond its current capabilities, offering users a more versatile and interactive web experience. This could involve:

- Real-time content generation: LLM models could be used to generate real-time content, such as news articles, blog posts, and social media updates, based on user input or external data sources.

- Interactive web applications: LLM models could be used to create interactive web applications that allow users to engage with content in more dynamic and engaging ways.

- Enhanced search and discovery: LLM models could be used to improve search results by understanding user intent and providing more relevant and personalized recommendations.

- Personalized learning and education: LLM models could be used to create personalized learning experiences that adapt to individual user needs and learning styles.

Impact on Web Browsing and Online Interactions

The integration of local LLM functionality in Opera has the potential to significantly impact the future of web browsing and online interactions. This could lead to:

- A more personalized and interactive web experience: Users will be able to interact with websites and web applications in more natural and intuitive ways, leading to a more personalized and engaging experience.

- Increased accessibility and inclusivity: LLM models can be used to make the web more accessible to people with disabilities, such as by providing text-to-speech and speech-to-text functionalities.

- Enhanced productivity and efficiency: LLM models can be used to automate tasks and streamline workflows, making users more productive and efficient in their online activities.

- New opportunities for creativity and innovation: LLM models can be used to create new forms of online content and experiences, leading to a more vibrant and innovative web.

Opera’s local LLM feature is a game-changer. It empowers users with offline access to powerful AI tools, while also prioritizing privacy and security. This development could significantly impact the future of web browsing, potentially leading to more personalized and efficient online experiences.

Opera’s new feature lets you download and use LLMs locally, giving you more control over your data and privacy. This move comes at a time when data privacy is a hot topic, with the recent IAB TCF CJEU ruling highlighting the need for more transparency and user control over data collection. By offering local LLM access, Opera is essentially putting the power back in the hands of the user, allowing them to interact with these powerful AI models without relying on cloud-based services.

Standi Techno News

Standi Techno News