Posts with misinformation on X become ineligible for revenue share says Musk, a move that’s sending shockwaves through the social media landscape. This policy, which aims to combat the spread of false information, has ignited a debate about the role of platforms in regulating content and the potential impact on creators who rely on revenue share. The policy raises crucial questions about the definition of misinformation, the effectiveness of content moderation, and the future of revenue share models in the digital age.

The policy has been met with mixed reactions. Some creators applaud the effort to curb misinformation, arguing that it will create a more trustworthy and reliable platform. Others, however, express concerns about the potential for censorship and the difficulty of objectively defining misinformation. The policy also raises questions about the feasibility of implementing such a system on a large scale, given the vast amount of content generated on X and the challenges of identifying and verifying information.

Elon Musk’s recent announcement that creators who spread misinformation on X (formerly Twitter) will be ineligible for revenue share has sparked a heated debate about the future of content moderation on the platform. While the policy aims to curb the spread of false information, it raises concerns about the potential impact on creators, particularly those who rely on revenue share for their livelihoods.

The policy could have a significant impact on creators who rely on revenue share for their income. For example, creators who produce educational content or news commentary may be particularly vulnerable to this policy, as they may inadvertently spread misinformation without intending to do so.

Examples of Misinformation Handling on Twitter

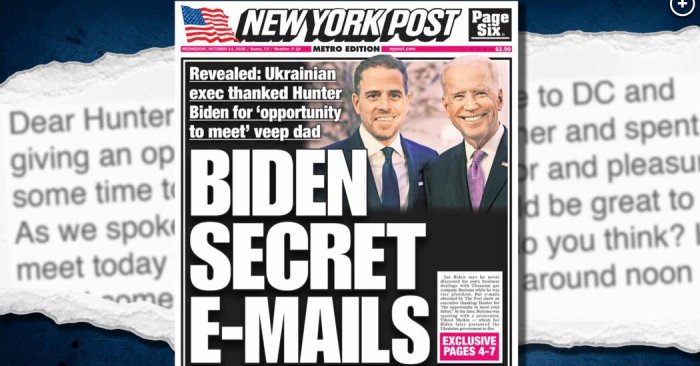

The handling of misinformation on Twitter has been a contentious issue for years. The platform has faced criticism for its slow response to the spread of false information, particularly during high-profile events like elections. For example, during the 2020 US presidential election, Twitter faced scrutiny for its handling of misinformation related to the election process and results.

Comparison to Other Platforms

Musk’s policy aligns with content moderation practices on other social media platforms like Facebook and YouTube, which have implemented policies to combat misinformation and restrict the spread of false information. However, the policy’s specific implementation and its potential impact on creators remain to be seen.

The Definition of Misinformation

Defining misinformation can be tricky, as it often blurs the lines with other forms of content. It’s not just about incorrect information; it’s about information that’s intentionally misleading or presented in a way that deceives the audience.

This policy’s impact on the spread of information, both accurate and inaccurate, is a complex topic. It could potentially curb the spread of misinformation, but it also raises concerns about censorship and the potential for suppressing legitimate dissent or differing opinions.

Examples of Misinformation

Here are some examples of content that could be considered misinformation under this policy:

- Fabricated news stories: These stories are completely made up and presented as factual news, often with the intention of swaying public opinion or causing harm.

- Manipulated images and videos: Images and videos can be altered to create a false impression, often using sophisticated editing techniques.

- Misleading headlines and captions: Headlines and captions can be used to present information in a way that is misleading or sensationalized, even if the underlying content is accurate.

- Out-of-context information: Information taken out of context can be used to create a false narrative or support a particular viewpoint.

The Role of Content Moderation

Content moderation plays a crucial role in enforcing Musk’s policy on misinformation and revenue share. It involves the identification, review, and potential removal of content that violates the platform’s guidelines. However, this task is complex and presents significant challenges.

Identifying misinformation accurately and consistently is a major hurdle for content moderators. The nature of misinformation is multifaceted, ranging from outright fabrications to misleading interpretations of facts. Additionally, the rapid evolution of information online, including the spread of manipulated media and deepfakes, further complicates the process.

- Subjectivity and Context: Determining what constitutes misinformation can be subjective, as the truthfulness of a claim often depends on context and interpretation. For example, a statement might be factually accurate but presented in a misleading way to create a false narrative.

- Cultural and Linguistic Nuances: Misinformation can be disseminated across diverse cultures and languages, making it challenging for moderators to understand the nuances and potential for misinterpretation.

- Rapid Evolution of Information: The constant flow of new information and the emergence of novel forms of misinformation, such as deepfakes, require continuous adaptation and training for moderators.

Potential Biases in Content Moderation

Content moderation processes can be susceptible to biases, potentially leading to the disproportionate removal of content from certain groups or perspectives.

- Algorithmic Bias: The algorithms used to identify misinformation can be biased based on the training data used to develop them. For example, an algorithm trained on a dataset primarily composed of Western news sources might struggle to accurately identify misinformation in content from other regions or cultures.

- Human Bias: Content moderators, like any human, can be influenced by their own personal beliefs and experiences. This can lead to biased decisions regarding content removal, particularly when dealing with sensitive or controversial topics.

Impact on the Relationship Between Content Creators and Platform Moderators

Musk’s policy could potentially strain the relationship between content creators and platform moderators. Content creators might perceive the policy as an infringement on their freedom of expression, particularly if they disagree with the platform’s definition of misinformation. This could lead to increased friction and mistrust between creators and moderators, potentially impacting the overall platform ecosystem.

Musk’s policy change regarding misinformation and revenue share has significant implications for the future of social media platforms. This move signifies a shift in the way content creators are incentivized, potentially impacting the entire landscape of content creation and platform monetization.

The Impact on Content Creators

This policy creates a direct link between content quality and financial rewards. Content creators who prioritize accuracy and reliability will be more likely to benefit from revenue share, while those who spread misinformation may face financial repercussions. This could lead to a more informed and trustworthy online environment.

Alternative Approaches to Content Incentive

While revenue share tied to accuracy is a novel approach, other strategies can also be employed to encourage high-quality content and discourage misinformation. These include:

- Community-Based Fact-Checking: Empowering users to flag and report misinformation, with verification mechanisms in place to ensure accuracy.

- Content Quality Ratings: Implementing a system where users can rate the quality and reliability of content, with higher ratings leading to greater visibility and potential monetization.

- Algorithmic Prioritization: Prioritizing content from verified sources and those with a track record of accuracy in platform algorithms.

A Hypothetical System for Rewarding Accurate Content

One potential system for rewarding accurate content could involve a combination of user engagement and independent fact-checking. Content creators would receive a base revenue share based on user interactions, such as likes, shares, and comments. However, this base share would be adjusted based on the accuracy of the content, verified by a third-party fact-checking organization. For example, if a post is verified as accurate, the creator might receive a bonus to their base revenue share, while inaccurate content could result in a reduction or even forfeiture of revenue.

Public Perception and Trust

Musk’s policy could significantly impact public perception of the platform and its users, potentially fostering a more skeptical environment regarding information shared on the platform. This shift in perception could influence user behavior and engagement, as individuals may become more cautious about interacting with the platform or sharing their own content.

Impact on Public Perception

The policy could lead to a decline in public trust in the platform, particularly among those who rely on it for news and information. This is because the policy might be perceived as an attempt to silence dissenting voices or suppress information that is inconvenient to certain interests. Additionally, the policy could be seen as a step towards censorship, raising concerns about the platform’s commitment to free speech and open discourse.

Musk’s policy on misinformation and revenue share is a significant development that could reshape the social media landscape. It highlights the ongoing struggle to balance freedom of expression with the need to combat harmful content. The success of this policy will depend on its implementation, the clarity of its definition of misinformation, and the public’s acceptance of its consequences. The debate over this policy is likely to continue, raising crucial questions about the role of social media platforms in shaping the flow of information and the future of content creation in the digital age.

Musk’s crackdown on misinformation might be a good thing for the platform, but it also raises questions about the responsibility of tech giants to verify user information. This is especially relevant when considering the potential for harm with VR experiences, like those offered by Meta Quest. With the implementation of Meta Quest age verification , Meta is taking steps to protect younger users, but it’s unclear how this will be enforced and whether it will be enough to prevent harmful content from reaching vulnerable audiences.

The same goes for misinformation – how can we ensure that content on platforms like X is verified and accurate, especially when it comes to sensitive topics?

Standi Techno News

Standi Techno News