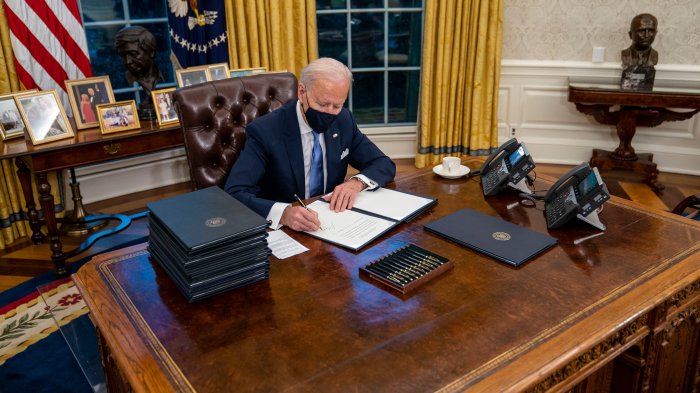

President Biden issues executive order to set standards for AI safety and security sets the stage for a new era in artificial intelligence. The order, issued in response to growing concerns about the potential risks posed by AI, aims to ensure that this powerful technology is developed and deployed responsibly. It’s not just about robots taking over the world, but about making sure AI benefits everyone, not just a select few.

The executive order focuses on key areas like data privacy, algorithmic bias, and transparency. It calls for the development of standards and guidelines for AI systems, which will be enforced by government agencies across various sectors. Think of it as a set of rules for the AI playground, ensuring fair play and safety for everyone involved.

Executive Order Context

President Biden’s executive order on AI safety and security reflects a growing awareness of the potential risks and benefits of artificial intelligence. The order acknowledges the transformative power of AI while emphasizing the need for responsible development and deployment to ensure its benefits are realized while mitigating potential harms.

The order was prompted by concerns regarding the potential for AI to be used for malicious purposes, such as the development of autonomous weapons systems or the spread of misinformation. It also addresses concerns about the potential for AI to exacerbate existing societal inequalities, such as algorithmic bias and job displacement.

Goals and Objectives of the Executive Order

The executive order sets forth a comprehensive framework for responsible AI development and deployment. It Artikels several key goals and objectives, including:

- Promoting AI Innovation: The order encourages the development and deployment of AI technologies that benefit society, while ensuring safety and security. It emphasizes the importance of fostering a robust and competitive AI ecosystem.

- Ensuring AI Safety and Security: The order calls for the development and implementation of standards and guidelines for AI safety and security. It emphasizes the need to mitigate potential risks associated with AI, such as algorithmic bias, data privacy violations, and the potential for misuse.

- Promoting AI Fairness and Equity: The order addresses the potential for AI to exacerbate existing societal inequalities. It calls for the development and deployment of AI systems that are fair, equitable, and accessible to all.

- Strengthening AI Research and Development: The order encourages research and development in areas related to AI safety, security, and ethics. It also emphasizes the need to build a strong and diverse AI workforce.

- Enhancing International Cooperation: The order recognizes the importance of international cooperation in addressing the challenges and opportunities presented by AI. It calls for collaboration with other countries to develop global standards and best practices.

AI Safety and Security Standards

President Biden’s executive order lays the groundwork for a comprehensive approach to AI safety and security, recognizing the transformative potential of AI while addressing the risks associated with its development and deployment. The order sets forth a series of standards and guidelines that aim to foster responsible AI innovation and ensure its benefits are broadly shared while mitigating potential harms.

Data Privacy and Security

Data privacy and security are fundamental to ensuring responsible AI development and use. The executive order emphasizes the importance of protecting sensitive data from unauthorized access, use, or disclosure. This includes:

- Requiring federal agencies to implement robust data privacy and security practices when developing or using AI systems.

- Promoting the development of data privacy standards and best practices for the private sector.

- Encouraging research and development of privacy-enhancing technologies, such as differential privacy and homomorphic encryption, to enable data analysis without compromising individual privacy.

These measures aim to build public trust in AI by ensuring that personal data is handled responsibly and securely, preventing misuse and protecting individual rights.

Algorithmic Bias and Fairness, President biden issues executive order to set standards for ai safety and security

Algorithmic bias, where AI systems exhibit unfair or discriminatory outcomes, can perpetuate existing societal inequalities and undermine public trust. The executive order addresses this challenge by:

- Directing federal agencies to assess and mitigate bias in their AI systems.

- Promoting the development of tools and techniques for identifying and mitigating algorithmic bias.

- Encouraging the use of diverse datasets and inclusive design principles to ensure AI systems are fair and equitable.

By addressing algorithmic bias, the executive order aims to ensure that AI systems are used in a way that benefits all Americans, regardless of their background or identity.

Transparency and Explainability

Transparency and explainability are crucial for understanding how AI systems work and building trust in their decision-making. The executive order encourages:

- The development of AI systems that are transparent and explainable, allowing users to understand the rationale behind their outputs.

- The creation of mechanisms for auditing and evaluating AI systems to ensure they are operating as intended.

- The development of guidelines for responsible disclosure of information about AI systems, including their capabilities, limitations, and potential risks.

These measures aim to increase accountability and transparency in AI development and deployment, fostering public understanding and trust in AI systems.

Safety and Security

The executive order recognizes the importance of ensuring the safety and security of AI systems, particularly in critical infrastructure and national security contexts. This includes:

- Developing guidelines for the safe and secure development and deployment of AI systems, including standards for testing, validation, and certification.

- Encouraging research and development of techniques for detecting and mitigating vulnerabilities in AI systems.

- Promoting collaboration between government, industry, and academia to address AI safety and security challenges.

These measures aim to ensure that AI systems are robust, resilient, and secure, mitigating the risk of malicious use or unintended consequences.

Examples of Implementation

The executive order’s standards and guidelines will be implemented across different sectors, including:

- Healthcare: AI systems used in healthcare will be subject to rigorous safety and security standards to ensure patient data is protected and AI-powered diagnoses are accurate and reliable.

- Transportation: AI systems used in autonomous vehicles will be required to meet high standards of safety and security to ensure the safe operation of self-driving cars and trucks.

- Finance: AI systems used in financial services will be subject to regulations that address algorithmic bias and ensure fair and transparent lending practices.

- Education: AI systems used in education will be designed to promote equitable access to learning opportunities and prevent algorithmic bias in student assessments.

These examples illustrate how the executive order’s standards and guidelines will be applied across different sectors, fostering responsible AI innovation and ensuring its benefits are broadly shared.

Government Agencies and Roles

The executive order designates specific government agencies as responsible for overseeing the development and implementation of AI safety and security standards. These agencies will play crucial roles in ensuring responsible and ethical AI development and deployment across the country.

Each agency brings its unique expertise and resources to the table, contributing to a comprehensive and collaborative approach to AI governance.

Agency Responsibilities

The following agencies will play key roles in implementing the executive order:

- The National Institute of Standards and Technology (NIST): NIST will be responsible for developing and disseminating voluntary AI risk management frameworks and standards. These frameworks will provide guidance to organizations on best practices for developing, deploying, and managing AI systems in a safe and responsible manner. NIST will also conduct research and analysis to identify emerging AI risks and develop appropriate mitigation strategies.

- The Office of Science and Technology Policy (OSTP): OSTP will coordinate the development and implementation of the executive order across the federal government. This includes ensuring that all relevant agencies are working together effectively and that the standards are consistent with broader national AI policy goals. OSTP will also be responsible for promoting public education and awareness about AI safety and security.

- The Department of Commerce: The Department of Commerce will work with NIST to develop and disseminate the AI risk management frameworks and standards. The department will also play a role in promoting the adoption of these standards by businesses and other organizations.

- The Department of Homeland Security (DHS): DHS will focus on the security of AI systems, particularly in critical infrastructure sectors. The department will work to identify and mitigate potential vulnerabilities in AI systems that could be exploited by malicious actors. DHS will also collaborate with other agencies to develop and implement cybersecurity standards for AI.

- The Department of Defense (DoD): The DoD will be responsible for ensuring the safety and security of AI systems used in defense applications. The department will also conduct research and development to advance AI technologies while mitigating potential risks. The DoD will work with other agencies to develop and implement standards for the responsible use of AI in military applications.

Collaboration and Enforcement

To ensure effective enforcement of the standards, these agencies will work together to:

- Coordinate efforts: Regular communication and coordination between agencies will be crucial for developing consistent and effective standards. This will involve sharing information, best practices, and research findings.

- Develop joint guidance: Agencies will collaborate to develop guidance for organizations on how to comply with the AI safety and security standards. This guidance will provide clear and actionable steps that organizations can take to ensure the responsible development and deployment of AI systems.

- Monitor and evaluate: Agencies will monitor the implementation of the standards and evaluate their effectiveness. This will involve collecting data on AI systems, identifying potential risks, and assessing the impact of the standards on AI development and deployment.

- Enforce compliance: Agencies will have the authority to enforce compliance with the AI safety and security standards. This may involve issuing guidance, conducting investigations, and taking enforcement actions against organizations that violate the standards.

Impact on AI Industry

President Biden’s executive order on AI safety and security is poised to significantly impact the AI industry, prompting both challenges and opportunities for businesses. The order sets standards for AI development and deployment, aiming to ensure responsible and ethical use of this transformative technology.

Impact on AI Research, Development, and Deployment

The executive order will undoubtedly influence AI research, development, and deployment. Here are some potential implications:

* Increased Focus on AI Safety and Security: Businesses will need to prioritize safety and security in their AI systems, incorporating robust testing, risk assessment, and mitigation strategies. This shift will require investment in specialized expertise and resources.

* Compliance with Standards: The executive order introduces new requirements for AI systems, such as transparency, accountability, and fairness. Businesses will need to adapt their processes and technologies to meet these standards.

* Enhanced Data Privacy and Security: The order emphasizes data privacy and security, which will impact how AI systems collect, store, and use data. Businesses will need to implement rigorous data protection measures and ensure compliance with relevant regulations.

* Collaboration and Transparency: The executive order promotes collaboration and transparency in AI development. Businesses will need to be more open about their AI projects and work together to address shared challenges.

* Government Oversight and Regulation: The executive order establishes a framework for government oversight and regulation of AI. This could lead to increased scrutiny of AI systems and potential limitations on certain applications.

International Cooperation: President Biden Issues Executive Order To Set Standards For Ai Safety And Security

The executive order recognizes that AI safety and security are global concerns, necessitating collaboration among nations to establish effective safeguards. International cooperation is crucial to address the challenges posed by AI, ensuring its development and deployment benefit all humanity while mitigating potential risks.

The executive order promotes international collaboration in several ways. It encourages the US government to engage with other countries in dialogues, joint research initiatives, and the development of shared principles and best practices for AI governance. It also emphasizes the importance of working with international organizations like the United Nations and the Organisation for Economic Co-operation and Development (OECD) to foster global cooperation on AI.

Potential Challenges and Opportunities for Global AI Governance

Global AI governance presents both challenges and opportunities.

- Different national priorities and values: Nations may have varying perspectives on AI regulation, driven by their unique social, economic, and political contexts. This can lead to conflicting approaches and make it challenging to reach global consensus on AI governance.

- Data sovereignty and privacy concerns: The cross-border flow of data is essential for AI development and deployment, but it raises concerns about data privacy and national security. Balancing the need for data access with data protection is a complex issue that requires careful consideration and international collaboration.

- Unequal access to AI resources: The uneven distribution of AI expertise and resources among nations can exacerbate existing inequalities. Ensuring equitable access to AI technologies and benefits is crucial for promoting inclusive growth and development.

- Coordination and enforcement: Implementing global AI governance requires effective coordination and enforcement mechanisms. This includes establishing clear standards, developing effective monitoring systems, and ensuring compliance with agreed-upon rules.

Despite these challenges, international cooperation on AI presents significant opportunities.

- Sharing best practices and lessons learned: Collaboration allows nations to learn from each other’s experiences, share best practices, and avoid repeating mistakes. This can accelerate the development of effective AI governance frameworks.

- Building trust and confidence: International cooperation can foster trust and confidence in AI systems, promoting wider adoption and innovation. This is particularly important for areas like healthcare, transportation, and finance, where AI applications have the potential to significantly benefit society.

- Promoting global stability and security: Addressing the potential risks of AI, such as misuse for malicious purposes or the development of autonomous weapons systems, requires a collective effort. International cooperation is essential for ensuring that AI technologies are used responsibly and for the benefit of all.

Public Perception and Awareness

Public perception of AI is complex and multifaceted, ranging from excitement about its potential benefits to anxieties about its potential risks. The executive order aims to address these concerns by establishing safety and security standards, which could influence public perception in various ways.

The executive order’s emphasis on AI safety and security is likely to increase public awareness of these issues. This heightened awareness could lead to greater public trust in AI, as people feel more confident that their concerns are being addressed. Conversely, if the implementation of the order is perceived as inadequate or ineffective, it could further fuel public anxieties about AI.

Public Awareness and Education

Promoting public understanding and engagement with AI issues is crucial for fostering a responsible and beneficial development of AI. Public awareness campaigns can play a vital role in educating the public about AI’s capabilities, limitations, and potential impacts.

- Educational initiatives could include workshops, online courses, and public lectures on AI topics, covering its ethical, social, and economic implications.

- Public forums and debates could facilitate open discussions and dialogue between experts, policymakers, and the public on AI-related issues.

- Engaging with the media to ensure accurate and balanced reporting on AI developments is essential to combat misinformation and foster informed public discourse.

Strategies for Promoting Public Understanding

Engaging the public in a meaningful way requires strategies that go beyond traditional information dissemination.

- Interactive exhibits and demonstrations can help people experience AI firsthand and understand its capabilities and limitations.

- Encouraging public participation in AI research projects, through citizen science initiatives, can foster a sense of ownership and responsibility.

- Supporting the development of AI-related educational resources for schools and universities can help equip future generations with the skills and knowledge needed to navigate the AI landscape.

Future of AI Regulation

President Biden’s executive order marks a significant step toward establishing a framework for responsible AI development and deployment. This order serves as a foundation for future AI regulations, setting the stage for a more robust and comprehensive approach to managing the potential risks and harnessing the benefits of this transformative technology.

Long-Term Implications of the Executive Order

The executive order’s long-term implications for AI regulation are multifaceted and far-reaching. It signals a commitment to proactive governance, aiming to prevent potential harms associated with AI while fostering innovation and economic growth. This commitment is expected to drive further developments in AI safety and security standards, shaping the future of AI governance.

Potential Future Developments in AI Safety and Security Standards

The executive order lays the groundwork for the development of more comprehensive and nuanced AI safety and security standards. These standards will likely evolve over time, incorporating emerging technologies, best practices, and lessons learned from real-world applications. Key areas of development include:

- Algorithmic Transparency and Explainability: Future standards may require developers to provide clear explanations of how AI algorithms work, enabling users to understand the reasoning behind decisions and identify potential biases.

- Data Privacy and Security: Regulations may focus on safeguarding sensitive data used to train AI systems, ensuring its responsible use and preventing unauthorized access or misuse.

- Robustness and Reliability: Standards may address the resilience of AI systems against adversarial attacks and ensure their accuracy and reliability in diverse environments.

- Human Oversight and Control: Future regulations might mandate mechanisms for human oversight and control over AI systems, ensuring that humans retain the ability to intervene and correct errors.

- Ethical Considerations: Standards may address ethical implications of AI, such as bias, fairness, and discrimination, promoting responsible development and deployment of AI systems.

Evolving Landscape of AI Governance

The executive order is part of a broader global movement towards responsible AI governance. International collaboration and the sharing of best practices will play a crucial role in shaping the future of AI regulation. Key aspects of this evolving landscape include:

- International Cooperation: Governments and organizations worldwide are increasingly collaborating to develop common standards and guidelines for AI development and deployment. Examples include the OECD’s AI Principles and the EU’s AI Act.

- Industry Self-Regulation: AI companies are increasingly taking steps to develop and implement ethical guidelines and best practices for AI development. This includes initiatives such as the Partnership on AI and the AI for Good Foundation.

- Public Engagement: Public awareness and engagement are essential for shaping responsible AI governance. Public consultations, educational programs, and open discussions will play a vital role in ensuring that AI development aligns with societal values and priorities.

This executive order marks a significant step towards ensuring that AI development and deployment are guided by ethical considerations and safety principles. While it’s not a magic bullet, it lays the groundwork for a future where AI is used for good, benefiting society as a whole. It’s a reminder that we need to be proactive in shaping the future of AI, ensuring that it serves humanity, not the other way around.

President Biden’s executive order on AI safety and security highlights the need for responsible development and deployment of this powerful technology. Similar to the way trellis climate focuses on the “missing middle” in sustainable development, the order emphasizes the importance of balancing innovation with ethical considerations. By setting standards for AI safety, the administration aims to mitigate potential risks and ensure that AI benefits society as a whole.

Standi Techno News

Standi Techno News