Why rag wont solve generative ais hallucination problem – Generative AI, with its ability to create realistic and compelling content, has captivated the world. But these powerful tools aren’t without their flaws. One persistent issue is the phenomenon of AI “hallucinations,” where models confidently generate inaccurate or nonsensical information. While Retrieval-Augmented Generation (RAG) holds promise for enhancing AI accuracy, it’s not a silver bullet for tackling this pervasive problem.

AI hallucinations differ from human errors. They stem from the model’s inherent limitations in understanding and processing information. RAG, which relies on retrieving relevant information from external sources, can improve factual accuracy in some cases. However, its effectiveness is limited by the quality and availability of the data it accesses, and it can’t always prevent the model from generating illogical or nonsensical outputs.

The Nature of Generative AI Hallucinations: Why Rag Wont Solve Generative Ais Hallucination Problem

Generative AI models, while capable of producing impressive outputs, sometimes exhibit a peculiar phenomenon known as “hallucinations.” These hallucinations are not the same as human errors, which stem from cognitive biases or lack of information. Instead, they are unique to AI systems and arise from the way these models are trained and interact with data.

Generative AI hallucinations are characterized by inconsistencies, factual inaccuracies, and illogical reasoning. These errors are often subtle, but they can have significant consequences, especially when AI models are used in sensitive domains like healthcare or finance.

Examples of AI-Generated Hallucinations

Understanding the specific types of hallucinations is crucial for mitigating their impact. Here are some common examples:

- Fabricating Information: AI models may generate text that contains entirely fabricated information, presenting it as factual. For instance, a model might generate a biography of a fictional person or invent events that never occurred.

- Inconsistent Statements: Within the same output, AI models can make contradictory statements. This can manifest as conflicting facts, opinions, or even conflicting descriptions of the same entity.

- Logical Fallacies: AI models may exhibit illogical reasoning, leading to conclusions that do not follow from the provided information. This can include drawing incorrect inferences or making assumptions that are not supported by evidence.

Limitations of RAG in Addressing Hallucinations

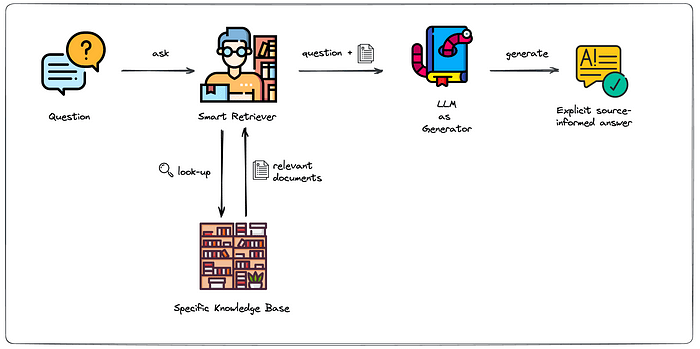

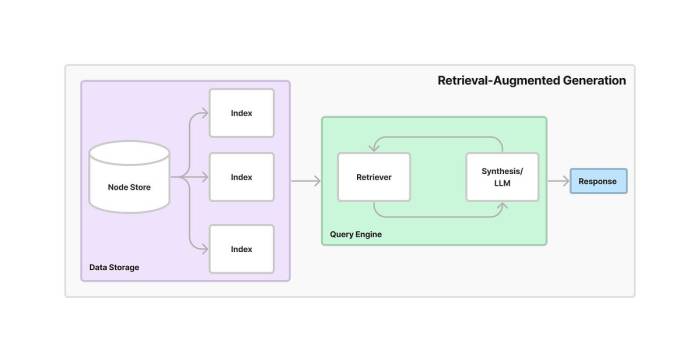

Retrieval-Augmented Generation (RAG) is a powerful technique that combines the strengths of information retrieval and language generation. It works by first retrieving relevant information from a knowledge base, like a database or a set of documents, and then using that information to generate a response. RAG holds immense potential for improving AI accuracy, particularly in tasks requiring factual accuracy, like question answering and summarization. However, despite its advantages, RAG has inherent limitations in addressing the problem of hallucinations in generative AI.

Limitations of RAG in Addressing Hallucinations

RAG’s effectiveness in preventing hallucinations is limited by several factors:

- Bias in Retrieved Information: RAG relies on the quality and completeness of the knowledge base it accesses. If the knowledge base contains biased or incomplete information, the generated response will reflect those biases, leading to inaccurate or hallucinated content.

- Inaccurate Retrieval: RAG’s ability to prevent hallucinations hinges on its ability to retrieve relevant and accurate information. If the retrieval system fails to identify the correct information or retrieves irrelevant information, the generated response may be inaccurate or contain hallucinations.

- Lack of Contextual Understanding: RAG typically retrieves information in a piecemeal fashion, without considering the overall context of the query. This can lead to misinterpretations and inaccurate responses, especially when dealing with complex or nuanced questions.

- Limited Reasoning Abilities: RAG’s primary function is to retrieve and synthesize information. It does not possess the reasoning abilities necessary to understand complex relationships between facts or to draw logical inferences, which are crucial for preventing hallucinations.

Examples of RAG’s Ineffectiveness

RAG may not be effective in preventing or mitigating hallucinations in situations where:

- The query requires reasoning or inference: For example, if a user asks, “What is the best way to prevent climate change?” RAG might retrieve information about different climate change mitigation strategies but might not be able to reason about their effectiveness or prioritize them based on scientific evidence.

- The knowledge base is incomplete or biased: If the knowledge base lacks information on a specific topic or contains biased information, RAG’s response may be inaccurate or reflect those biases. For instance, if a user asks about the history of a particular country, RAG might provide a biased or incomplete account if the knowledge base is lacking or skewed.

- The query is ambiguous or open-ended: RAG may struggle with ambiguous or open-ended queries that require a deeper understanding of context or intent. For example, if a user asks, “What is the meaning of life?” RAG might retrieve various philosophical perspectives but may not be able to synthesize them into a coherent and meaningful response.

Future Directions for Addressing Hallucinations

While RAG offers a promising approach to mitigating hallucinations, it’s not a silver bullet. Addressing the issue of hallucinations in generative AI requires a multi-faceted approach, encompassing advancements in both model architecture and training methodologies.

Research Directions for Mitigating Hallucinations

Developing more robust and reliable AI models that minimize hallucinations necessitates exploring various research avenues. These directions aim to improve the models’ ability to distinguish between real and fabricated information, enhance their reasoning capabilities, and provide mechanisms for detecting and correcting hallucinations.

- Improved Training Data and Techniques: One key area of focus is enhancing the quality and diversity of training data. This involves incorporating more diverse and reliable information sources, including factual datasets, knowledge graphs, and curated content. Furthermore, research into novel training techniques, such as incorporating reinforcement learning or adversarial training, can help models learn to distinguish between real and fabricated information.

- Enhanced Reasoning and Knowledge Representation: Integrating reasoning capabilities into generative AI models is crucial. This involves developing models that can understand and reason about the relationships between different pieces of information, enabling them to identify inconsistencies and inconsistencies. This can be achieved by incorporating techniques like graph neural networks or symbolic reasoning into model architectures.

- Hallucination Detection and Correction: Developing mechanisms for detecting and correcting hallucinations is essential. This could involve incorporating techniques like anomaly detection, probabilistic modeling, or external verification mechanisms. Models could be trained to identify potential hallucinations based on their statistical properties or by comparing their outputs with external knowledge bases.

Ethical and Societal Implications of Hallucinations, Why rag wont solve generative ais hallucination problem

The presence of hallucinations in generative AI raises ethical and societal concerns. As these models become more sophisticated and integrated into various applications, the potential for misinformation and harm becomes increasingly significant.

- Misinformation and Bias: Hallucinations can contribute to the spread of misinformation and biased information. Generative AI models trained on biased data may generate outputs that perpetuate existing prejudices or stereotypes.

- Trust and Reliability: The presence of hallucinations can erode trust in AI systems. If users cannot rely on the outputs of generative AI models to be accurate and reliable, it can undermine their acceptance and adoption.

- Ethical Considerations: The potential for AI-generated content to be used for malicious purposes, such as creating fake news or deepfakes, raises ethical concerns. It’s crucial to develop ethical guidelines and safeguards to mitigate these risks.

The quest for more reliable and trustworthy AI models is an ongoing journey. While RAG can be a valuable tool, it’s not a definitive solution for eliminating hallucinations. Addressing this challenge requires a multifaceted approach, including exploring alternative methods like fine-tuning models, incorporating external knowledge sources, and developing new evaluation metrics. As we continue to advance AI, understanding the limitations of current approaches and exploring innovative solutions will be crucial for ensuring the responsible and ethical development of these powerful technologies.

While RAG (Retrieval-Augmented Generation) can help reduce hallucinations in generative AI models by grounding them in real-world information, it’s not a silver bullet. The problem lies in the inherent uncertainty of language and the difficulty of accurately representing complex concepts. But hey, if you’re looking for a space to discuss these challenges with like-minded folks, check out Newsmast , a curated community platform built on Mastodon.

It’s a great place to connect with others interested in AI and explore the nuances of its development, including the ongoing battle against hallucinations.

Standi Techno News

Standi Techno News