Writers latest models can generate text from images including charts and graphs – Writers’ latest models can generate text from images including charts and graphs, setting the stage for a revolution in content creation and data analysis. Imagine a world where complex data visualizations instantly transform into insightful narratives, or where a photograph can spark a detailed description in seconds. This is the reality emerging with advanced AI models that bridge the gap between visual and textual information.

These models, trained on massive datasets of images and text, can decipher the intricacies of visual content, recognizing objects, understanding scenes, and extracting meaningful data. They then translate this visual information into coherent and informative text, offering a powerful tool for summarizing, describing, and even narrating what’s captured in an image.

The Rise of Visual Text Generation

The realm of text generation has undergone a dramatic transformation, moving beyond traditional methods to embrace the power of visual information. This shift marks a pivotal moment in the evolution of artificial intelligence, paving the way for a future where machines can understand and interpret the world through images.

The emergence of image-based text generation models has bridged the gap between visual and textual information, unlocking a new era of AI capabilities. These models can analyze images and extract meaningful insights, enabling them to generate text that accurately describes the visual content.

The Evolution of Text Generation

The ability of machines to generate text has been a long-standing goal in the field of artificial intelligence. Early text generation models relied on rule-based systems and statistical methods. These approaches were limited in their ability to generate coherent and meaningful text, often producing outputs that lacked nuance and creativity.

The advent of deep learning revolutionized text generation, leading to the development of powerful neural network models. These models, trained on vast amounts of text data, learned to generate human-quality text, exhibiting remarkable fluency and coherence. However, these models primarily focused on text-based input, neglecting the wealth of information available in the visual domain.

The latest generation of text generation models has incorporated image analysis capabilities, enabling them to understand and interpret visual content. These models leverage the power of computer vision techniques to extract features from images, such as objects, scenes, and relationships. This visual understanding empowers them to generate text that accurately describes the image content, providing a more comprehensive and insightful representation of the world.

Capabilities of Traditional and Image-Based Text Generation Models

| Feature | Traditional Text Generation Models | Image-Based Text Generation Models |

|---|---|---|

| Input | Text | Images |

| Output | Text | Text |

| Understanding of Visual Content | Limited | High |

| Capabilities | Generate text based on textual prompts, translate languages, summarize text, and create different writing styles. | Generate text that describes the content of images, including objects, scenes, and relationships. Can also be used for image captioning, visual question answering, and image-based storytelling. |

Examples of Image-Based Text Generation

Image-based text generation models have already demonstrated their potential in various applications, including:

- Image captioning: Generating descriptive captions for images, enhancing accessibility for visually impaired individuals and enriching the user experience on social media platforms.

- Visual question answering: Answering questions about images, enabling users to interact with visual information in a more natural and intuitive way.

- Image-based storytelling: Creating compelling narratives based on image sequences, providing a new avenue for creative expression and engaging storytelling.

Impact of Image-Based Text Generation

The rise of image-based text generation models has profound implications for various industries, including:

- Content creation: Automating the generation of text descriptions for images, reducing the workload for content creators and enabling the creation of more engaging and informative content.

- Customer service: Providing more accurate and efficient responses to customer inquiries based on images, enhancing customer satisfaction and streamlining support processes.

- Education: Creating interactive learning experiences that leverage visual information, making learning more engaging and accessible for students of all ages.

How Latest Models Analyze Images

These models, built on the foundation of deep learning, possess the ability to “see” and understand images in ways that were previously unimaginable. This “vision” is not simply about recognizing objects; it’s about deciphering the context, extracting meaningful information, and translating it into text.

Image Interpretation Mechanisms

The ability of these models to analyze images stems from a sophisticated interplay of various mechanisms.

- Object Recognition: These models are trained on vast datasets of images, learning to identify and categorize objects based on their visual features. This includes recognizing common objects like cars, trees, and animals, as well as more nuanced distinctions within categories, like different breeds of dogs or types of flowers.

- Scene Understanding: Beyond individual objects, these models can grasp the overall context of an image. They can discern the scene, understand the relationships between objects, and even infer the actions taking place. For instance, they can recognize a scene as a “kitchen” based on the presence of a stove, refrigerator, and cabinets, or identify a “street scene” by recognizing cars, pedestrians, and buildings.

- Data Extraction: These models can extract specific data from images, including text, numbers, and other visual information. This allows them to analyze charts and graphs, extract information from diagrams, and even interpret handwritten notes.

Image Types Analyzed

These models are capable of analyzing a wide range of image types, demonstrating their versatility and adaptability.

- Photographs: They can analyze photographs, identifying objects, understanding the scene, and generating descriptions that capture the essence of the image.

- Charts and Graphs: These models can interpret data presented in charts and graphs, extracting key insights and translating them into textual summaries. They can identify trends, patterns, and outliers, providing valuable insights from visual data.

- Diagrams: They can analyze diagrams, understanding the relationships between different elements and generating descriptions that capture the key information conveyed. This includes technical diagrams, flowcharts, and other visual representations of complex processes or concepts.

Translating Visual Information into Text, Writers latest models can generate text from images including charts and graphs

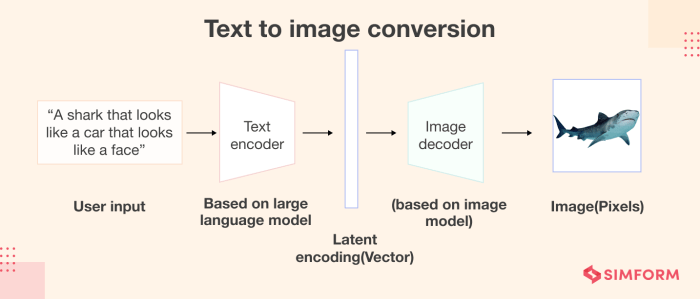

The translation of visual information into text is a complex process that involves machine learning algorithms.

- Feature Extraction: The model first extracts relevant features from the image, breaking it down into a representation that the algorithm can understand. This involves identifying edges, shapes, colors, and textures.

- Encoding and Decoding: These features are then encoded into a numerical representation, which the model uses to generate a sequence of words that accurately describes the image. This process involves complex mathematical operations and deep neural networks.

- Language Generation: The model uses its understanding of language and grammar to construct coherent and grammatically correct sentences that describe the image. This includes selecting appropriate vocabulary, arranging words in a logical order, and ensuring that the text flows smoothly.

Applications of Image-Based Text Generation

Image-based text generation, a revolutionary technology, allows computers to understand and interpret visual information, transforming it into meaningful text. This capability opens doors to a wide range of applications across various industries, revolutionizing how we interact with and analyze visual data.

Content Creation

Image-based text generation empowers content creators to generate descriptions, summaries, and narratives from images, enriching their content and making it more engaging. For example, a travel blogger could use this technology to automatically generate captions for their photos, saving time and effort. Imagine a travel blogger taking a picture of a bustling market in Thailand. The image-based text generation model could analyze the image and produce a caption like, “The vibrant colors and aromas of the Thai market are a sensory overload. Fresh produce, exotic spices, and handcrafted goods fill the air with a lively atmosphere.” This technology can also be used to create captions for social media posts, blog articles, and even books.

Data Analysis

Image-based text generation can be instrumental in analyzing large datasets of images, extracting valuable insights that would otherwise be difficult or time-consuming to obtain manually. This is particularly useful in fields like medical imaging, where analyzing medical scans for abnormalities can be a complex and time-consuming process. Imagine a medical professional analyzing a patient’s X-ray. The image-based text generation model could analyze the X-ray and generate a report describing any abnormalities detected. This report could then be used to help the medical professional make a diagnosis.

Accessibility

Image-based text generation can significantly improve accessibility for people with visual impairments. By converting images into text, this technology enables blind and visually impaired individuals to understand and interact with the visual world around them. Imagine a blind person trying to understand a graph depicting the performance of a company. The image-based text generation model could analyze the graph and generate a description of the data, such as “The company’s sales have been steadily increasing over the past year.” This technology can also be used to generate descriptions of images in books, magazines, and websites, making them accessible to visually impaired individuals.

Challenges and Future Directions: Writers Latest Models Can Generate Text From Images Including Charts And Graphs

The rise of image-based text generation, while promising, faces several challenges that require attention. Understanding these limitations is crucial for the responsible development and application of this technology. Additionally, exploring potential future advancements will guide the evolution of this field, shaping its impact on various industries and aspects of our lives.

Limitations of Current Models

Current image-based text generation models face several limitations that hinder their accuracy, fluency, and overall effectiveness. These limitations stem from the complexity of image understanding and the challenges of translating visual information into coherent and meaningful text.

- Limited Contextual Understanding: Models struggle to grasp the nuances of complex scenes, leading to inaccurate or incomplete descriptions. For example, a model might correctly identify objects in an image, but fail to understand their relationships or the overall context of the scene.

- Difficulty with Abstract Concepts: Visual representations of abstract concepts like emotions, ideas, or metaphors pose significant challenges for models. These concepts lack concrete visual elements, making it difficult for models to generate accurate and meaningful text.

- Bias and Stereotypes: The training data used for these models can reflect societal biases, leading to the generation of text that perpetuates stereotypes or reinforces harmful prejudices. This raises concerns about the ethical implications of using these models in sensitive contexts.

Ethical Implications of Image-Based Text Generation

The use of image-based text generation raises several ethical considerations that require careful examination. Addressing these concerns is crucial for ensuring responsible and ethical development and deployment of this technology.

- Privacy Concerns: The potential for generating text from images raises concerns about privacy, especially when dealing with personal or sensitive images. The use of this technology must adhere to strict privacy regulations and safeguards to protect individuals from unauthorized use of their images.

- Misinformation and Manipulation: The ability to generate text from images opens the door for potential misuse, such as creating misleading or fabricated narratives. It’s crucial to develop mechanisms for detecting and mitigating the spread of misinformation generated through this technology.

- Bias and Discrimination: The potential for bias in training data can lead to the generation of text that reinforces stereotypes or perpetuates discrimination. It’s essential to ensure that models are trained on diverse and representative datasets to minimize bias and promote fairness.

Future Directions in Image-Based Text Generation

Despite the challenges, image-based text generation holds immense potential for various applications. Research and development in this field are actively exploring advancements that could significantly enhance the capabilities and impact of this technology.

- Improved Contextual Understanding: Researchers are developing models that can better understand the context of images, incorporating information about relationships between objects, scene dynamics, and the overall narrative of the visual information. This will enable more accurate and comprehensive text generation.

- Multimodal Integration: Combining image-based text generation with other modalities, such as audio or video, will create richer and more immersive experiences. This integration can enable the generation of more comprehensive and nuanced narratives from multimodal data.

- Ethical Frameworks and Regulations: Developing ethical frameworks and regulations specifically for image-based text generation is crucial for ensuring responsible and ethical use of this technology. These frameworks will address concerns related to privacy, bias, and potential misuse, promoting responsible innovation.

The ability of these models to analyze images and generate text opens up a vast range of possibilities across various industries. From automating content creation and simplifying data analysis to enhancing accessibility and providing new avenues for creative expression, the implications of this technology are far-reaching. While challenges like bias, accuracy, and privacy need careful consideration, the future of image-based text generation is bright, promising to reshape how we interact with and interpret visual information.

It’s wild how AI writers can now churn out text from images, even complex charts and graphs. This opens up a whole new world of possibilities, like automatically generating reports or summarizing data. And speaking of worlds, Nintendo just signed a deal with Universal Studios to build new theme parks, nintendo signs theme park deal with universal studios , which is sure to be a huge hit.

Imagine the AI writers crafting immersive narratives for those rides, based on real-time data from park attendance and visitor feedback! It’s a whole new level of interactive storytelling.

Standi Techno News

Standi Techno News