EU AI Act Trilogue Crunch: Shaping the Future of AI – the very phrase conjures up images of intense negotiations, clashing ideologies, and the monumental task of shaping the future of artificial intelligence. This crunch point, where the European Parliament, Council, and Commission come together to hammer out the final details of the EU AI Act, represents a pivotal moment in the global landscape of AI regulation.

The EU AI Act, a landmark piece of legislation, seeks to establish a comprehensive framework for the development, deployment, and use of AI systems within the European Union. The Act aims to balance the potential benefits of AI with the need to address ethical concerns, mitigate risks, and protect fundamental rights. The trilogue negotiations, therefore, hold immense significance, as they will determine the final shape of this crucial regulatory framework.

The EU AI Act Trilogue Crunch

The trilogue negotiations for the EU AI Act represent a defining moment in the global landscape of artificial intelligence regulation. This critical phase brings together the European Parliament, the Council of the European Union, and the European Commission to finalize the landmark legislation.

The Significance of the Trilogue Negotiations

The trilogue negotiations are crucial for the EU AI Act’s success because they provide the final platform for reconciling the diverse perspectives of the three institutions involved. This process ensures a balanced and comprehensive legal framework that can effectively address the multifaceted challenges and opportunities presented by AI.

Key Challenges and Areas of Contention

The trilogue negotiations have been marked by several key challenges and areas of contention. These include:

- Risk-Based Approach: The EU AI Act adopts a risk-based approach, categorizing AI systems based on their potential harm. However, there are disagreements on the specific risk levels and the criteria used for classification.

- Prohibition of High-Risk AI Systems: The Act proposes prohibiting certain high-risk AI systems, such as those used for social scoring or real-time facial recognition in public spaces. The trilogue negotiations are focusing on defining the scope and application of these prohibitions.

- Transparency and Explainability: Ensuring transparency and explainability of AI systems is a key aspect of the Act. However, there are discussions on the extent of transparency requirements and the methods used to achieve explainability.

- Data Governance: The Act addresses data governance issues related to AI, including data access and sharing. The trilogue negotiations are focusing on balancing data protection with the need for innovation and development.

- Enforcement and Oversight: Establishing effective enforcement mechanisms and oversight bodies is crucial for the Act’s implementation. The trilogue negotiations are discussing the roles and responsibilities of different stakeholders in ensuring compliance.

Potential Impact of the Trilogue Outcome

The outcome of the trilogue negotiations will have a significant impact on the future of AI regulation in Europe. A robust and well-defined EU AI Act could:

- Shape Global AI Standards: The EU AI Act is expected to influence AI regulation globally, setting a precedent for other jurisdictions.

- Promote Ethical and Responsible AI Development: By establishing clear ethical guidelines and risk mitigation measures, the Act aims to promote the development and deployment of AI that benefits society.

- Foster Innovation and Economic Growth: By creating a predictable and transparent regulatory environment, the Act can encourage innovation and investment in AI while ensuring responsible development.

- Protect Fundamental Rights: The Act seeks to safeguard fundamental rights such as privacy, non-discrimination, and freedom of expression in the context of AI development and deployment.

Key Provisions and Their Implications: Eu Ai Act Trilogue Crunch

The EU AI Act is a landmark piece of legislation that aims to regulate the development, deployment, and use of artificial intelligence (AI) systems within the European Union. The Act is currently in the trilogue stage, where the European Parliament, the Council of the European Union, and the European Commission negotiate the final text. This process has been marked by significant disagreements over various provisions, highlighting the complex and evolving nature of AI regulation.

This section delves into the key provisions of the EU AI Act that are being negotiated, examining their potential implications for businesses, researchers, and consumers. It also compares and contrasts these provisions with existing AI regulations in other jurisdictions.

Risk-Based Approach to AI Regulation

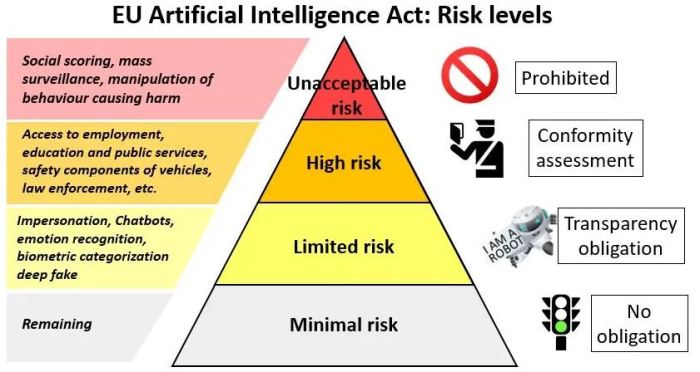

The EU AI Act adopts a risk-based approach to AI regulation, classifying AI systems into four risk categories: unacceptable risk, high risk, limited risk, and minimal risk. This approach aims to strike a balance between promoting innovation and ensuring the safety and ethical use of AI.

- Unacceptable Risk AI Systems: These systems are deemed to pose a clear and present danger to fundamental rights and are prohibited. Examples include AI systems used for social scoring, real-time facial recognition in public spaces, and AI-powered weapons systems.

- High-Risk AI Systems: These systems are subject to strict requirements, including conformity assessments, risk management, and transparency obligations. Examples include AI systems used in critical infrastructure, healthcare, education, and law enforcement.

- Limited Risk AI Systems: These systems are subject to less stringent requirements but still require transparency and accountability measures. Examples include AI systems used in marketing, customer service, and entertainment.

- Minimal Risk AI Systems: These systems are generally not subject to specific regulatory requirements. Examples include AI systems used for simple tasks such as spam filtering or image recognition.

This risk-based approach allows the EU AI Act to address the diverse range of AI applications while ensuring that the most significant risks are mitigated. It also provides a framework for future-proofing the legislation as AI technology continues to evolve.

Transparency and Explainability

The EU AI Act places a strong emphasis on transparency and explainability, requiring providers of high-risk AI systems to provide users with clear information about the system’s functionality, purpose, and limitations.

- Transparency Requirements: Providers of high-risk AI systems must provide users with clear and concise information about the system’s intended purpose, its limitations, and the data used to train it. This includes disclosing any potential biases or risks associated with the system.

- Explainability Requirements: The Act requires providers to offer explanations for the decisions made by high-risk AI systems, particularly when those decisions have significant consequences for individuals. This requirement aims to enhance user understanding and trust in AI systems.

These transparency and explainability requirements are intended to promote accountability and fairness in the use of AI. They also aim to empower users to make informed decisions about their interactions with AI systems.

Governance and Oversight

The EU AI Act establishes a comprehensive governance framework for AI, including the creation of a European Artificial Intelligence Board (EAIB) and national authorities responsible for enforcing the Act.

- European Artificial Intelligence Board (EAIB): The EAIB is a new body tasked with providing guidance on the implementation of the Act, developing best practices, and coordinating national authorities.

- National Authorities: Each EU member state will designate a national authority responsible for enforcing the Act within its territory. These authorities will be responsible for overseeing the development, deployment, and use of AI systems, including conducting audits and imposing penalties for non-compliance.

This governance framework aims to ensure consistent and effective implementation of the EU AI Act across the EU. It also provides a mechanism for addressing emerging challenges and adapting the legislation to technological advancements.

Implications for Stakeholders

The EU AI Act has significant implications for various stakeholders, including businesses, researchers, and consumers.

Businesses

Businesses developing, deploying, or using AI systems will need to comply with the Act’s requirements, which may involve significant changes to their processes and practices.

- Increased Compliance Costs: Businesses may face increased compliance costs, particularly those developing or deploying high-risk AI systems. They will need to invest in resources to ensure their systems meet the Act’s requirements, including conducting risk assessments, implementing transparency measures, and documenting their AI development processes.

- Potential Market Advantages: The Act could create a level playing field for businesses by setting clear standards for AI development and deployment. This could encourage innovation and create new market opportunities for businesses that comply with the Act’s requirements.

- Risk of Penalties: Businesses that fail to comply with the Act’s provisions may face significant penalties, including fines and even the suspension or prohibition of their AI systems.

Researchers

The EU AI Act could impact research activities by influencing the development and deployment of AI systems.

- Restrictions on Certain Research Areas: The Act’s prohibition of unacceptable risk AI systems could limit research in certain areas, particularly those involving the development of AI-powered weapons systems or social scoring systems.

- Increased Transparency Requirements: Researchers may need to comply with transparency requirements, such as disclosing their research methodologies and data sources, which could impact the confidentiality of their research.

- Opportunities for Collaboration: The Act could create opportunities for collaboration between researchers and industry, as businesses seek to comply with the Act’s requirements.

Consumers

The EU AI Act is intended to protect consumers from potential harms associated with the use of AI systems.

- Increased Transparency and Control: Consumers will have access to more information about the AI systems they interact with, allowing them to make informed decisions about their use.

- Enhanced Privacy Protection: The Act’s provisions on data protection and privacy could enhance consumer protection by limiting the collection and use of personal data by AI systems.

- Potential for Bias and Discrimination: The Act’s focus on fairness and non-discrimination could help to mitigate potential biases and discrimination in AI systems.

Comparison with Other Jurisdictions, Eu ai act trilogue crunch

The EU AI Act is one of the most comprehensive pieces of AI legislation proposed globally. It shares similarities with other jurisdictions’ AI regulations but also has distinctive features.

- United States: The US has a more fragmented approach to AI regulation, with various agencies focusing on specific aspects of AI, such as data privacy, algorithmic fairness, and cybersecurity. While the US lacks a comprehensive AI law, there are initiatives to develop federal guidelines and regulations.

- China: China has implemented a comprehensive AI strategy that emphasizes the development and deployment of AI for economic growth and national security. China’s AI regulations focus on promoting innovation while also addressing concerns about data privacy and security.

- Canada: Canada has adopted a principles-based approach to AI regulation, focusing on ethical considerations such as fairness, transparency, and accountability. Canada’s AI Strategy emphasizes the importance of collaboration between government, industry, and academia.

The EU AI Act’s risk-based approach, strong emphasis on transparency and explainability, and comprehensive governance framework differentiate it from other jurisdictions’ AI regulations. The Act’s provisions are likely to influence the development of AI regulations in other parts of the world.

Impact on High-Risk AI Systems

The EU AI Act’s focus on high-risk AI systems is a key aspect of its regulatory framework. This section delves into the specific provisions related to high-risk AI systems, analyzing their potential impact on the development and deployment of such systems in Europe.

Risk Assessment and Mitigation

The EU AI Act mandates a comprehensive risk assessment for all high-risk AI systems. This assessment requires identifying and evaluating potential risks, including those related to safety, health, fundamental rights, and the environment. The Act further Artikels specific requirements for risk mitigation measures, which must be proportionate to the identified risks and ensure that the AI system operates within acceptable safety and ethical boundaries.

The risk assessment should consider the intended purpose of the AI system, the context of its use, and the potential consequences of its deployment.

Transparency and Explainability

The EU AI Act emphasizes transparency and explainability for high-risk AI systems. This includes requirements for providing users with clear and concise information about the system’s capabilities, limitations, and potential risks. Additionally, the Act mandates that developers of high-risk AI systems must be able to explain the decision-making process of the system, enabling users to understand the rationale behind its outputs.

Human Oversight

The EU AI Act recognizes the importance of human oversight in the development and deployment of high-risk AI systems. It mandates that such systems should be subject to human supervision, allowing for intervention and correction in cases where the AI system’s outputs are deemed inappropriate or unsafe. This provision aims to ensure that human control and accountability remain central to the use of high-risk AI systems.

Key Requirements for Different Types of High-Risk AI Systems

| Type of High-Risk AI System | Key Requirements | Implications |

|---|---|---|

| Biometric identification systems used for law enforcement |

|

|

| AI systems used in critical infrastructure (e.g., transportation, energy) |

|

|

| AI systems used in education and employment |

|

|

The Role of Data Governance

The EU AI Act recognizes the crucial role of data governance in shaping the responsible development and deployment of AI systems. It aims to establish a robust framework that fosters trust, transparency, and accountability in the use of data for AI.

Impact on Data Access, Sharing, and Ownership

The Act addresses the challenges associated with data access, sharing, and ownership, recognizing their impact on the development and deployment of AI systems. The Act aims to balance the need for data access for AI development with the protection of individuals’ privacy and data rights.

- Increased Transparency and Accountability: The Act requires companies to provide clear and concise information about the data used to train their AI systems, promoting transparency and accountability in the data governance process. This includes details about the source, type, and purpose of the data, as well as any potential biases or risks associated with its use.

- Data Access for Research and Innovation: The Act encourages data sharing for research and innovation purposes, particularly in areas of public interest. It aims to facilitate the development of robust and beneficial AI applications by providing researchers and developers with access to relevant data sets. However, it also emphasizes the importance of protecting sensitive data and ensuring responsible data sharing practices.

- Data Ownership and Control: The Act recognizes the importance of data ownership and control for individuals. It aims to empower individuals with greater control over their personal data, enabling them to decide how their data is used for AI development. This includes the right to access, rectify, and delete their personal data, as well as the right to object to its processing.

Key Data Governance Principles

The EU AI Act enshrines several key data governance principles to guide the responsible use of data in AI development and deployment. These principles aim to ensure that AI systems are developed and used ethically, transparently, and with respect for fundamental rights.

| Principle | Description |

|---|---|

| Lawfulness, Fairness, and Transparency | AI systems should be developed and used in a lawful, fair, and transparent manner, ensuring that data is processed in accordance with applicable laws and regulations. This principle emphasizes the need for clear and understandable information about the data used and the purpose of its processing. |

| Purpose Limitation | Data should be collected and processed for specific, explicit, and legitimate purposes. This principle aims to prevent the misuse of data for purposes beyond those for which it was initially collected. |

| Data Minimization | Only the necessary data should be collected and processed for the intended purpose. This principle aims to minimize the risk of data breaches and unauthorized access by limiting the amount of data collected and stored. |

| Accuracy | Data should be accurate and kept up-to-date. This principle emphasizes the importance of ensuring the quality and reliability of data used for AI development. |

| Integrity and Confidentiality | Data should be protected from unauthorized access, use, disclosure, alteration, or destruction. This principle emphasizes the need for robust security measures to safeguard data and prevent breaches. |

| Accountability | Organizations responsible for developing and deploying AI systems should be accountable for their data governance practices. This principle emphasizes the need for transparency, traceability, and mechanisms for redress in case of data breaches or misuse. |

Ethical Considerations and Societal Impact

The EU AI Act, while aiming to foster innovation and responsible AI development, raises significant ethical considerations and potential societal implications. It seeks to address concerns surrounding bias, discrimination, and transparency in AI systems, while also exploring the Act’s impact on employment, privacy, and democratic values.

Bias and Discrimination in AI Systems

The Act recognizes the inherent risk of bias and discrimination in AI systems, stemming from the data used to train them. This data can reflect existing societal biases, leading to unfair or discriminatory outcomes.

- The Act requires developers to implement risk mitigation measures, including data quality checks, bias detection algorithms, and fairness assessments.

- It also encourages the use of diverse datasets and the development of AI systems that are robust to biases.

- The Act emphasizes the importance of transparency and explainability, allowing users to understand how AI systems make decisions and identify potential biases.

For example, in recruitment, an AI system trained on historical data might perpetuate existing gender or racial biases, favoring certain candidates over others. The Act aims to prevent such scenarios by mandating developers to ensure their systems are fair and unbiased.

Transparency and Explainability

Transparency and explainability are crucial for ensuring accountability and trust in AI systems.

- The Act requires developers to provide clear documentation and explanations about the functioning and intended use of high-risk AI systems.

- This includes outlining the data used, the algorithms employed, and the decision-making processes involved.

- It also emphasizes the need for user-friendly interfaces that enable individuals to understand the reasoning behind AI-driven decisions.

For instance, a loan approval system should be transparent about the factors considered in making a decision, allowing individuals to challenge unfair outcomes or understand why their application was rejected.

Impact on Employment

The Act acknowledges the potential impact of AI on the labor market, particularly for jobs that are susceptible to automation.

- It emphasizes the need for reskilling and upskilling programs to prepare workers for the changing job landscape.

- The Act encourages social dialogue and collaboration between stakeholders to address the potential displacement of workers and ensure a just transition.

For example, the Act promotes the development of AI-related skills and training programs to equip workers with the necessary competencies to thrive in the evolving job market.

Impact on Privacy

The Act recognizes the importance of protecting personal data in the context of AI development and deployment.

- It reinforces existing data protection regulations, ensuring that AI systems handle personal data responsibly and ethically.

- The Act emphasizes the need for data anonymization and minimization, limiting the collection and processing of personal data to what is strictly necessary.

- It also requires developers to obtain informed consent from individuals before using their data for AI training or deployment.

For instance, an AI-powered healthcare system should obtain explicit consent from patients before using their medical records for research or treatment purposes, ensuring data privacy and security.

Impact on Democratic Values

The Act acknowledges the potential impact of AI on democratic values, such as freedom of expression and the right to privacy.

- It encourages the development of AI systems that are compatible with democratic principles and respect human rights.

- The Act emphasizes the need for transparency and accountability in the use of AI for public purposes, ensuring that AI systems do not undermine democratic processes.

For example, the Act promotes the development of AI systems that are used in a way that safeguards democratic values, such as preventing the misuse of AI for political manipulation or censorship.

Key Ethical Principles for AI Development

The EU AI Act promotes a set of ethical principles that should guide the development and deployment of AI systems in Europe.

- Human oversight: AI systems should remain under human control and oversight, ensuring that they are used responsibly and ethically.

- Fairness and non-discrimination: AI systems should be designed and developed to be fair and non-discriminatory, avoiding bias and promoting equal opportunities.

- Transparency and explainability: AI systems should be transparent and explainable, allowing users to understand how they work and make decisions.

- Privacy and data protection: AI systems should respect privacy and data protection principles, ensuring that personal data is handled responsibly and ethically.

- Safety and security: AI systems should be safe and secure, minimizing risks to individuals and society.

- Accountability and responsibility: Developers and deployers of AI systems should be accountable for their actions and responsible for the consequences of their use.

These ethical principles are essential for building trust in AI and ensuring that it is used for the benefit of society.

Challenges and Opportunities for the Future

The EU AI Act is a groundbreaking piece of legislation that aims to regulate the development and deployment of artificial intelligence (AI) systems in Europe. While the Act is a significant step towards ensuring the responsible and ethical use of AI, it also presents several challenges and opportunities for the future of AI regulation in Europe.

Challenges in Implementing the EU AI Act

The successful implementation of the EU AI Act hinges on overcoming several key challenges.

- Defining and Classifying High-Risk AI Systems: The Act requires the identification and classification of high-risk AI systems, which can be a complex and subjective process. The definition of “high-risk” needs to be clear and unambiguous to ensure consistent application across different sectors and contexts.

- Ensuring Effective Oversight and Enforcement: The Act relies on a robust enforcement mechanism to ensure compliance with its provisions. This requires sufficient resources and expertise within national authorities to effectively monitor and sanction non-compliant AI systems.

- Balancing Innovation and Regulation: The Act aims to strike a balance between promoting innovation in AI and ensuring its responsible use. It is crucial to avoid overly burdensome regulations that could stifle innovation while still safeguarding against potential risks.

- Adapting to Rapid Technological Advancements: The field of AI is constantly evolving, with new technologies and applications emerging rapidly. The EU AI Act needs to be flexible and adaptable to keep pace with these advancements and ensure its continued relevance.

- International Cooperation and Harmonization: The global nature of AI requires international cooperation and harmonization of regulations to avoid fragmentation and conflicting standards. This is particularly important for ensuring the smooth operation of AI systems across borders.

Opportunities for Innovation and Responsible AI Development

The EU AI Act also presents significant opportunities for innovation and responsible AI development.

- Boosting Trust and Confidence in AI: The Act’s focus on ethical considerations and risk mitigation can help build public trust and confidence in AI systems, encouraging wider adoption and acceptance.

- Creating a Level Playing Field for Responsible AI: The Act can foster a more level playing field for responsible AI development by setting clear standards and guidelines. This can incentivize companies to prioritize ethical and responsible practices.

- Driving Innovation in AI Research and Development: The Act’s focus on transparency, accountability, and human oversight can encourage innovation in AI research and development by creating a more favorable environment for responsible AI solutions.

- Promoting the Development of Robust AI Governance Frameworks: The Act’s implementation can lead to the development of comprehensive and robust AI governance frameworks that address ethical, legal, and societal implications.

- Establishing Europe as a Leader in Responsible AI: The EU AI Act has the potential to position Europe as a global leader in responsible AI development, attracting investment and talent in the field.

Key Challenges and Opportunities for the Future of AI Regulation in Europe

| Challenge | Opportunity |

|---|---|

| Defining and classifying high-risk AI systems | Boosting trust and confidence in AI |

| Ensuring effective oversight and enforcement | Creating a level playing field for responsible AI |

| Balancing innovation and regulation | Driving innovation in AI research and development |

| Adapting to rapid technological advancements | Promoting the development of robust AI governance frameworks |

| International cooperation and harmonization | Establishing Europe as a leader in responsible AI |

The EU AI Act Trilogue Crunch is a defining moment for the future of AI in Europe. The outcome of these negotiations will have far-reaching implications for businesses, researchers, consumers, and society as a whole. As the dust settles, the EU AI Act will undoubtedly shape the global landscape of AI regulation, influencing how AI is developed, deployed, and used across the world. This crunch point is not just about regulating AI; it’s about shaping a future where AI benefits humanity, fosters innovation, and upholds ethical principles.

The EU AI Act trilogue crunch is heating up, with negotiators scrambling to find common ground on everything from risk assessment to transparency. But while lawmakers are busy debating the future of artificial intelligence, ULA aims to launch Astrobotic’s lunar lander on Christmas Eve , a mission that could usher in a new era of space exploration.

Back on Earth, the AI Act trilogue will likely continue to dominate headlines, but the race to the moon reminds us that humanity’s ambitions extend far beyond the digital realm.

Standi Techno News

Standi Techno News