Hugging Face releases a benchmark for testing generative AI on health tasks, marking a significant leap forward in the quest to leverage the power of AI for improving healthcare outcomes. This benchmark, developed by the renowned AI community platform, is poised to revolutionize the way we evaluate and develop AI models for a range of healthcare applications.

The benchmark provides a standardized framework for evaluating the performance of generative AI models on a variety of health-related tasks, including medical image analysis, drug discovery, and patient diagnosis. It encompasses a diverse dataset of real-world healthcare data, ensuring that the models are tested on realistic and challenging scenarios. The benchmark also incorporates a comprehensive set of evaluation metrics that are specifically designed to assess the accuracy, reliability, and ethical implications of AI models in the healthcare context.

Hugging Face’s Health Benchmark: Hugging Face Releases A Benchmark For Testing Generative Ai On Health Tasks

Hugging Face is a popular platform in the AI community that provides tools and resources for building and deploying machine learning models. It is known for its extensive library of pre-trained models, datasets, and tools that enable researchers and developers to easily access and utilize cutting-edge AI technologies.

The significance of this benchmark lies in its ability to objectively evaluate the performance of generative AI models on healthcare tasks. By providing a standardized framework for testing, the benchmark allows researchers to compare different models and identify those that are most effective for specific healthcare applications. This is crucial for ensuring that AI models used in healthcare are reliable and trustworthy.

Impact of the Benchmark

The introduction of this benchmark is expected to have a significant impact on the advancement of AI in healthcare. Here are some potential benefits:

- Improved Model Performance: The benchmark provides a clear target for researchers to strive for, encouraging the development of more accurate and robust generative AI models for healthcare applications.

- Increased Transparency and Trust: By establishing a standardized evaluation framework, the benchmark promotes transparency and trust in the development and deployment of AI models in healthcare.

- Faster Progress in AI Research: The benchmark facilitates collaboration among researchers, allowing them to share best practices and accelerate the pace of AI development in healthcare.

The Structure and Scope of the Benchmark

The Hugging Face Health Benchmark is a comprehensive evaluation suite designed to assess the performance of generative AI models on various healthcare tasks. It offers a standardized framework for researchers and developers to compare the capabilities of different models and identify areas for improvement.

This benchmark covers a wide range of healthcare-related tasks, including text summarization, question answering, and text generation, all tailored to the unique challenges of the medical domain. The data used in the benchmark is carefully curated and encompasses diverse real-world scenarios, ensuring its relevance and applicability to practical healthcare applications.

Tasks Included in the Benchmark

The benchmark encompasses a variety of tasks that are crucial for advancing AI in healthcare. These tasks are carefully chosen to represent real-world challenges and provide a comprehensive evaluation of model capabilities.

- Text Summarization: This task involves generating concise and informative summaries of medical documents, such as patient records or research articles. It is essential for enabling quick access to key information and facilitating efficient decision-making.

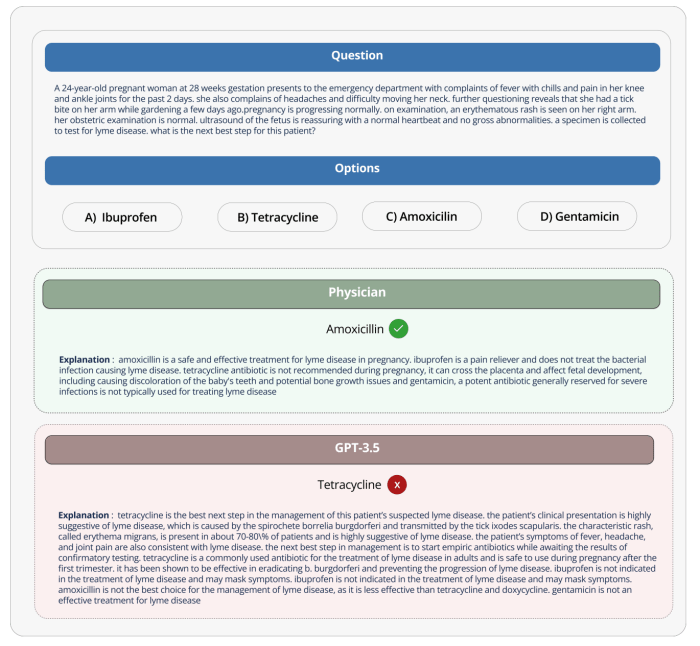

- Question Answering: This task focuses on retrieving accurate and relevant information from medical texts in response to user queries. This is particularly important for providing medical professionals and patients with timely and reliable answers to their questions.

- Text Generation: This task involves generating coherent and medically accurate text, such as patient reports or clinical notes. It can be used to automate documentation tasks and enhance communication between healthcare providers.

Data Used in the Benchmark

The benchmark utilizes a diverse range of data sources to ensure its real-world applicability. This data includes:

- Electronic Health Records (EHRs): These records contain detailed information about patient demographics, medical history, diagnoses, medications, and treatments. They provide a rich source of data for training and evaluating AI models.

- Medical Literature: The benchmark includes data from medical journals, textbooks, and research papers, offering access to a vast repository of medical knowledge.

- Clinical Trial Data: This data encompasses information from clinical trials, including patient characteristics, treatment outcomes, and adverse events. It is valuable for developing and evaluating AI models for drug discovery and clinical decision support.

Evaluation Metrics Employed in the Benchmark

The benchmark utilizes a set of carefully chosen evaluation metrics to assess the performance of AI models on different healthcare tasks. These metrics are designed to capture various aspects of model performance, including accuracy, fluency, and relevance.

- Accuracy: This metric measures the model’s ability to generate correct and factually accurate outputs. For tasks like question answering, it reflects the proportion of questions answered correctly.

- Fluency: This metric evaluates the readability and coherence of the generated text. It assesses whether the model produces grammatically correct and logically consistent outputs.

- Relevance: This metric measures the extent to which the generated text is relevant to the input or task at hand. For example, in text summarization, it evaluates whether the summary captures the most important information from the original document.

Key Features of the Benchmark

The Hugging Face Health Benchmark is designed to go beyond traditional AI evaluation frameworks by incorporating unique features that address the specific challenges of generative AI in healthcare. This comprehensive approach ensures a more robust and meaningful assessment, enabling researchers and developers to build better AI models for healthcare applications.

These features are crucial for evaluating generative AI models in the context of healthcare, where accuracy, safety, and ethical considerations are paramount.

Focus on Healthcare-Specific Tasks

The benchmark focuses on tasks directly relevant to healthcare, including:

- Medical Question Answering: Evaluating the model’s ability to answer complex medical questions accurately and comprehensively.

- Patient Summarization: Assessing the model’s capacity to summarize patient medical records, highlighting key information for clinical decision-making.

- Medical Text Generation: Testing the model’s ability to generate accurate and coherent medical text, such as discharge summaries or patient education materials.

This task-specific approach ensures that the benchmark evaluates models based on their performance in real-world healthcare scenarios.

Emphasis on Safety and Ethics, Hugging face releases a benchmark for testing generative ai on health tasks

The benchmark incorporates evaluation metrics that prioritize safety and ethical considerations, such as:

- Bias Detection: Measuring the model’s potential for generating biased or discriminatory outputs, ensuring fair and equitable outcomes.

- Hallucination Detection: Identifying instances where the model generates inaccurate or fabricated information, promoting responsible AI development.

- Privacy Protection: Evaluating the model’s ability to handle sensitive patient data responsibly, ensuring compliance with privacy regulations.

This focus on safety and ethics ensures that the benchmark assesses models based on their potential impact on patient care and societal well-being.

Multi-Modal Evaluation

The benchmark incorporates multi-modal evaluation, assessing the model’s ability to handle different types of data, including:

- Text: Evaluating the model’s understanding and generation of medical text.

- Images: Assessing the model’s ability to interpret and generate medical images, such as X-rays or MRIs.

- Audio: Evaluating the model’s capacity to process and generate audio data, such as patient recordings or medical consultations.

This multi-modal approach reflects the complexity of real-world healthcare data and ensures a more comprehensive evaluation of generative AI models.

Implications for the Future of AI in Healthcare

The Hugging Face Health Benchmark represents a pivotal moment in the evolution of AI in healthcare. This benchmark acts as a catalyst, propelling the development and adoption of generative AI solutions across various healthcare domains. Its impact extends beyond mere technological advancement, ushering in a new era of responsible and ethical AI development in healthcare.

Accelerating AI-Powered Solutions for Healthcare Challenges

The benchmark provides a standardized framework for evaluating generative AI models’ performance on a range of healthcare tasks. This standardized evaluation paves the way for researchers and developers to compare different models, identify areas for improvement, and prioritize research directions. Consequently, the benchmark accelerates the development of AI-powered solutions for addressing critical healthcare challenges.

- Drug Discovery and Development: Generative AI models can analyze vast datasets of molecular structures and predict the properties of new drug candidates. The benchmark facilitates the development of more accurate and efficient drug discovery processes, leading to the creation of novel and effective therapies. For instance, researchers can use the benchmark to evaluate models’ ability to predict the binding affinity of drug candidates to target proteins, thereby accelerating the identification of promising therapeutic agents.

- Personalized Medicine: Generative AI models can personalize treatment plans by analyzing individual patient data, including medical history, genetic information, and lifestyle factors. The benchmark empowers developers to build more robust and personalized AI-powered healthcare systems that tailor treatment strategies to each patient’s unique needs. For example, the benchmark can be used to evaluate models’ ability to predict the risk of developing specific diseases based on an individual’s genetic profile, enabling proactive interventions and personalized preventive care.

- Medical Imaging Analysis: Generative AI models can analyze medical images, such as X-rays, MRIs, and CT scans, to detect abnormalities and assist in diagnosis. The benchmark provides a robust platform for evaluating the performance of these models, leading to the development of more accurate and reliable diagnostic tools. For instance, the benchmark can be used to assess models’ ability to detect subtle signs of cancer in medical images, enabling early diagnosis and improved treatment outcomes.

The Hugging Face benchmark is a game-changer for the future of AI in healthcare. It empowers researchers and developers to build more robust, reliable, and ethical AI models that can address critical healthcare challenges. By providing a standardized platform for evaluation, the benchmark fosters collaboration and accelerates the development of AI-powered solutions that have the potential to improve patient care and transform the healthcare landscape.

While Hugging Face is making waves in the health tech world with its benchmark for testing generative AI, the sustainability scene is also buzzing. Cleanfiber, a company dedicated to making eco-friendly alternatives to traditional fabrics, just secured a hefty Series B funding round, check out the details here. This funding boost will likely fuel further development of AI-powered solutions in both the healthcare and sustainability fields, making it an exciting time for both industries.

Standi Techno News

Standi Techno News